filmov

tv

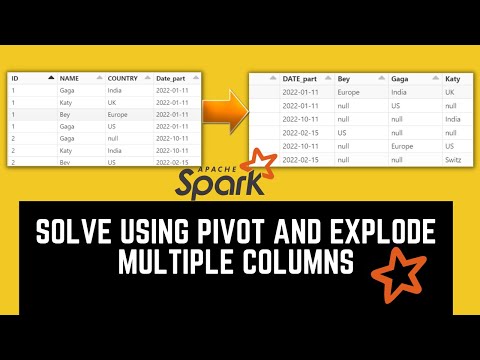

How to Explode an Array into 2 Columns in PySpark

Показать описание

Learn how to efficiently track package delivery routes in PySpark by exploding an array of routes into two columns using posexplode and lead functions.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Exploding an array into 2 columns

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

How to Explode an Array into 2 Columns in PySpark

When working with data, particularly in the logistics or transportation industry, it's essential to track packages efficiently. Tracking the hops made by a package — from the warehouse to the customer — can present challenges, especially when data is organized in a single column.

In this guide, we will explore a common scenario: you have a table containing routes for packages represented in a space-separated string format, and you need to transform this into a more useful two-column format. Let’s dive into the problem and its solution!

The Problem

Imagine you have the following data in a table where the column "Route" holds the various stops a package makes, beginning from the warehouse and ending at the customer:

[[See Video to Reveal this Text or Code Snippet]]

The challenge here is to explode this data such that we can have an output where each hop from one point to the next is displayed in a separate row. The expected output should look similar to this:

[[See Video to Reveal this Text or Code Snippet]]

The Solution

To achieve this, we will use PySpark, a powerful data processing library for big data. Specifically, we can utilize two functions: posexplode and lead. The combination of these functions allows us to index the array values and dynamically fetch the next value in the sequence.

Step-by-Step Guide

1. Setting Up Your Environment

Ensure you have PySpark installed and properly configured in your working environment. You can install PySpark using pip:

[[See Video to Reveal this Text or Code Snippet]]

2. Import Required Libraries

Start by importing necessary PySpark libraries:

[[See Video to Reveal this Text or Code Snippet]]

3. Create DataFrame

Assuming df is your existing DataFrame containing the route data:

[[See Video to Reveal this Text or Code Snippet]]

4. Explode the Route Column

Use posexplode to split the Route string and create two new columns, start and end:

[[See Video to Reveal this Text or Code Snippet]]

Here’s what’s happening in this code:

posexplode: This function allows us to split the Route string into individual hops while also providing an index.

lead: This function helps us retrieve the subsequent hop (the next stop) to form the "end" column.

window function: This organizes the data by ID and the index order.

5. View the Result

Finally, display the transformed DataFrame:

[[See Video to Reveal this Text or Code Snippet]]

This will output the exploded columns as desired, with each hop clearly detailed from start to end.

Final Thoughts

Implementing this transformation is simple and efficient in PySpark. By using posexplode and lead, we can effectively track the lifecycle of package deliveries, providing clear insights into logistics processes. This method not only organizes data neatly but also enables further analyses and optimizations.

Feel free to adapt this approach to your specific scenarios, ensuring your data insights are always at your fingertips!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Exploding an array into 2 columns

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

How to Explode an Array into 2 Columns in PySpark

When working with data, particularly in the logistics or transportation industry, it's essential to track packages efficiently. Tracking the hops made by a package — from the warehouse to the customer — can present challenges, especially when data is organized in a single column.

In this guide, we will explore a common scenario: you have a table containing routes for packages represented in a space-separated string format, and you need to transform this into a more useful two-column format. Let’s dive into the problem and its solution!

The Problem

Imagine you have the following data in a table where the column "Route" holds the various stops a package makes, beginning from the warehouse and ending at the customer:

[[See Video to Reveal this Text or Code Snippet]]

The challenge here is to explode this data such that we can have an output where each hop from one point to the next is displayed in a separate row. The expected output should look similar to this:

[[See Video to Reveal this Text or Code Snippet]]

The Solution

To achieve this, we will use PySpark, a powerful data processing library for big data. Specifically, we can utilize two functions: posexplode and lead. The combination of these functions allows us to index the array values and dynamically fetch the next value in the sequence.

Step-by-Step Guide

1. Setting Up Your Environment

Ensure you have PySpark installed and properly configured in your working environment. You can install PySpark using pip:

[[See Video to Reveal this Text or Code Snippet]]

2. Import Required Libraries

Start by importing necessary PySpark libraries:

[[See Video to Reveal this Text or Code Snippet]]

3. Create DataFrame

Assuming df is your existing DataFrame containing the route data:

[[See Video to Reveal this Text or Code Snippet]]

4. Explode the Route Column

Use posexplode to split the Route string and create two new columns, start and end:

[[See Video to Reveal this Text or Code Snippet]]

Here’s what’s happening in this code:

posexplode: This function allows us to split the Route string into individual hops while also providing an index.

lead: This function helps us retrieve the subsequent hop (the next stop) to form the "end" column.

window function: This organizes the data by ID and the index order.

5. View the Result

Finally, display the transformed DataFrame:

[[See Video to Reveal this Text or Code Snippet]]

This will output the exploded columns as desired, with each hop clearly detailed from start to end.

Final Thoughts

Implementing this transformation is simple and efficient in PySpark. By using posexplode and lead, we can effectively track the lifecycle of package deliveries, providing clear insights into logistics processes. This method not only organizes data neatly but also enables further analyses and optimizations.

Feel free to adapt this approach to your specific scenarios, ensuring your data insights are always at your fingertips!

0:02:58

0:02:58

0:23:00

0:23:00

0:01:28

0:01:28

0:18:03

0:18:03

0:03:12

0:03:12

0:08:35

0:08:35

0:01:32

0:01:32

0:03:56

0:03:56

0:01:57

0:01:57

0:01:34

0:01:34

0:01:07

0:01:07

0:01:44

0:01:44

0:06:57

0:06:57

0:02:16

0:02:16

0:00:51

0:00:51

0:01:56

0:01:56

0:22:01

0:22:01

0:04:15

0:04:15

0:01:24

0:01:24

0:11:44

0:11:44

0:14:50

0:14:50

0:10:09

0:10:09

0:00:59

0:00:59

0:00:57

0:00:57