filmov

tv

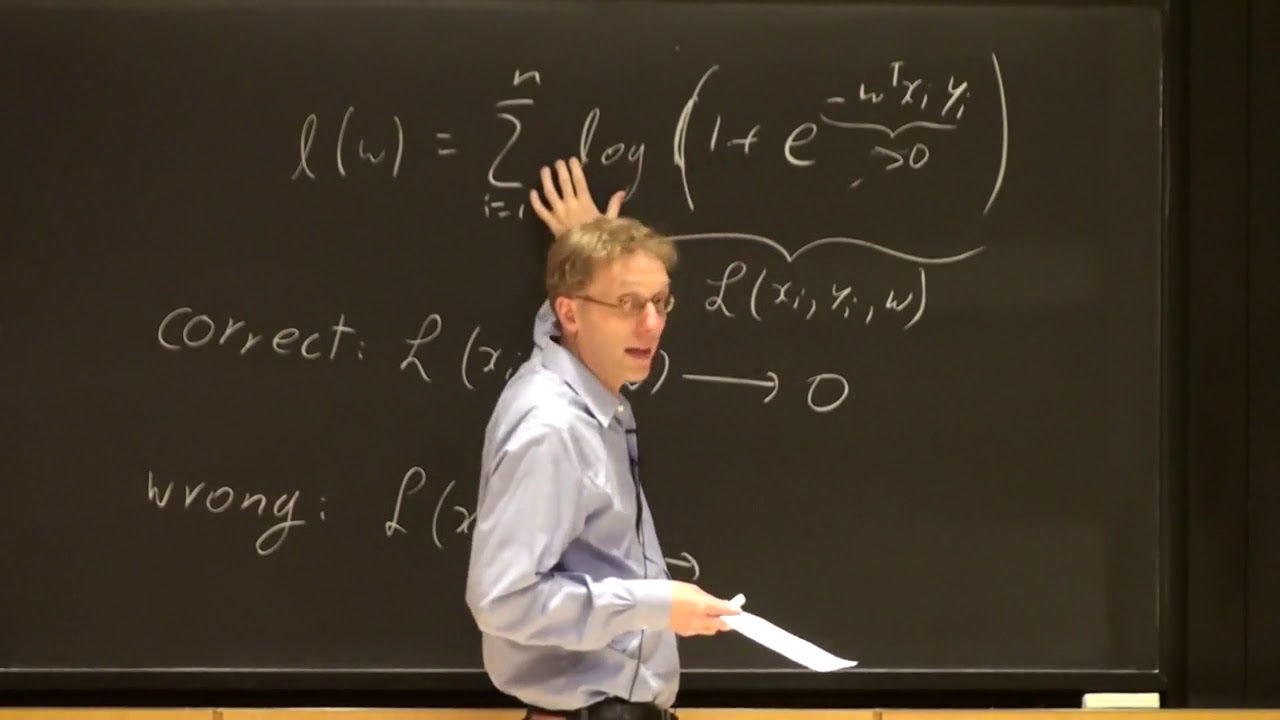

Machine Learning Lecture 13 'Linear / Ridge Regression' -Cornell CS4780 SP17

Показать описание

Lecture Notes:

Machine Learning Lecture 13 'Linear / Ridge Regression' -Cornell CS4780 SP17

ML Lecture 13: Unsupervised Learning - Linear Methods

Lecture 13 Linear Machine

Lecture 13 : Linear Machine

MLAI Lecture 13: Linear Regression (no sound)

Introduction to Machine Learning, Lecture-13( Probabilistic Interpretation of Linear Regression)

Cornell CS 5787: Applied Machine Learning. Lecture 13. Part 1: Boosting and Ensembling

Machine Learning course- Shai Ben-David: Lecture 13

Types Of Machine Learning | 61/100 Days of Python Algo Trading

Machine Learning Lecture 14 '(Linear) Support Vector Machines' -Cornell CS4780 SP17

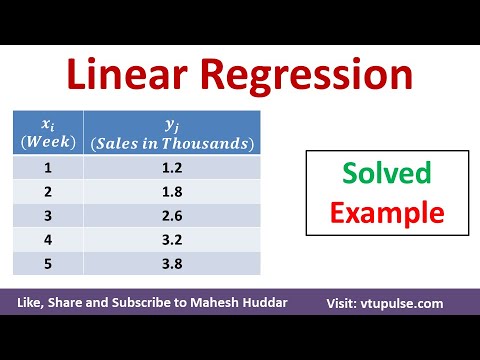

Linear Regression Algorithm – Solved Numerical Example in Machine Learning by Mahesh Huddar

Stanford CS229: Machine Learning | Summer 2019 | Lecture 13-Statistical Learning Uniform Convergence

Stanford CS229: Machine Learning | Summer 2019 | Lecture 4 - Linear Regression

MIT: Machine Learning 6.036, Lecture 13: Clustering (Fall 2020)

CS480/680 Lecture 13: Support vector machines

Lecture 13: Non Parametric Linear System Identification

Lecture 13: Randomized Matrix Multiplication

Applied Machine Learning 2019 - Lecture 13 - Parameter Selection and Automatic Machine Learning

Lecture 13 | Generative Models

Lec-4: Linear Regression📈 with Real life examples & Calculations | Easiest Explanation

Lecture 13 Multiple Linear Regression

Probabilistic ML - Lecture 13 - Computation and Inference

Lecture 13: Convolutional Neural Networks

Cornell CS 5787: Applied Machine Learning. Lecture 13. Part 2: Additive Models

Комментарии

0:39:50

0:39:50

1:40:21

1:40:21

0:29:18

0:29:18

0:29:18

0:29:18

0:48:07

0:48:07

0:27:57

0:27:57

0:21:48

0:21:48

1:20:09

1:20:09

0:09:25

0:09:25

0:49:59

0:49:59

0:05:30

0:05:30

1:55:15

1:55:15

1:48:00

1:48:00

1:15:28

1:15:28

1:17:43

1:17:43

1:29:21

1:29:21

0:52:24

0:52:24

1:22:07

1:22:07

1:17:41

1:17:41

0:11:01

0:11:01

0:45:14

0:45:14

1:35:57

1:35:57

1:22:12

1:22:12

0:26:05

0:26:05