filmov

tv

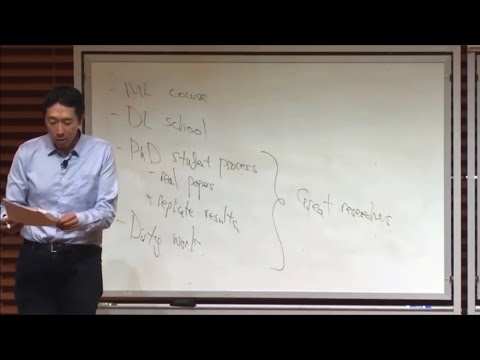

Stanford CS229: Machine Learning | Summer 2019 | Lecture 4 - Linear Regression

Показать описание

Anand Avati

Computer Science, PhD

To follow along with the course schedule and syllabus, visit:

What advice do you have for getting started in AI & Machine Learning? - Fei-Fei Li & Andrew ...

Andrew Ng's Secret to Mastering Machine Learning - Part 1 #shorts

How I’d learn ML in 2024 (if I could start over)

Is the Machine Learning Specialization ACTUALLY Worth It? (Andrew Ng)

Advice for machine learning beginners | Andrej Karpathy and Lex Fridman

Is Andrew Ng’s machine learning course too mathematical?

Stanford Machine Learning Specialization - Review 2024 - Andrew Ng Machine Learning Specialization

stanford online has full courses on machine learning

Harvard and Stanford's FREE A.I. courses!!!!

Andrew Ng: Opportunities in AI - 2023

3 Best FREE Machine Learning Courses 2024🔥 | #lmtshorts #shorts

What is our ethical responsibility with AI & Machine Learning? - Fei-Fei Li & Andrew Ng

How I would learn Machine Learning (if I could start over)

First impressions of Andrew Ng’s machine learning course

StanFord CS229: Machine Learning (Autumn 2018)| Lecture 1: Welcome! | Re-up (1080p)

Andrew Ng's Secret to Mastering Machine Learning - Part 2 #shorts

Stanford's FREE data science book and course are the best yet

Andrew Ng, Founder of DeepLearning.AI and Co-Founder of Coursera, pictures a better world

Why and how to start a Machine Learning career | Andrew Ng

Andrew Ng Machine Learning Career Advice

Stanford CS229: Machine Learning | Summer 2019 | Lecture 1 - Español

Stanford Professor's Secret: Late Night Filming Unlocks AI Learning Potential

CS229 Machine Learning, 2017. Final project: Convex Optimization for Machine Learning

Stanford CS25: V4 I Jason Wei & Hyung Won Chung of OpenAI

Комментарии

0:00:59

0:00:59

0:00:48

0:00:48

0:07:05

0:07:05

0:16:35

0:16:35

0:05:48

0:05:48

0:00:45

0:00:45

0:09:57

0:09:57

0:00:21

0:00:21

0:00:14

0:00:14

0:36:55

0:36:55

0:00:52

0:00:52

0:01:00

0:01:00

0:07:43

0:07:43

0:00:55

0:00:55

1:15:20

1:15:20

0:00:29

0:00:29

0:04:52

0:04:52

0:00:48

0:00:48

0:08:44

0:08:44

0:10:02

0:10:02

1:51:48

1:51:48

0:00:55

0:00:55

0:03:59

0:03:59

1:17:07

1:17:07