filmov

tv

Lecture 13: Randomized Matrix Multiplication

Показать описание

MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018

Instructor: Gilbert Strang

This lecture focuses on randomized linear algebra, specifically on randomized matrix multiplication. This process is useful when working with very large matrices. Professor Strang introduces and describes the basic steps of randomized computations.

License: Creative Commons BY-NC-SA

Instructor: Gilbert Strang

This lecture focuses on randomized linear algebra, specifically on randomized matrix multiplication. This process is useful when working with very large matrices. Professor Strang introduces and describes the basic steps of randomized computations.

License: Creative Commons BY-NC-SA

Lecture 13: Randomized Matrix Multiplication

Sec 2.1 rec matrix multiplication

Lecture 13 - Matrix operations (IV) & Midterm review (W7D2)

Lecture 13: Fundamental Matrix

Introduction to Probability - Verifying Matrix Multipilication

Lecture 13: Linear Algebra ii

Implementing Randomized Matrix Algorithms in Parallel and Distributed Environments, Michael Mahoney

Lecture 13 - Matrix operations IV & Midterm review - W7D2

Math 350 - Lecture 13 - More advanced matrix manipulation

Lecture 13, Tutorial 5: Matrix Factorisation, Movie Recommendation

Matrices - Lecture 13 - Levy-Desplanques theorem, equivalence of matrix norms, errors in inverses

Scientific Computing Lecture 13: Linear Algebra with BLAS and LAPACK

MAT 140 Lesson 7: Matrix Multiplication

ADSP - 13 Matched Filters - 03 Maximizing SNR as Matrix Multiplication

Introduction to Probability - Verifying Matrix Multipilication

ARTIFICIAL INTELLIGENCE LECTURE 13

Randomized Algorithms in Linear Algebra

Matrix Multiplication | Array In C Programming Part 10

Lecture 13 - Fundamental Matrix - 2014

AI4OPT Tutorial Lectures: Randomized Matrix Computations (Part II)

An Introduction to Randomized Algorithms for Matrix Computations Part 1

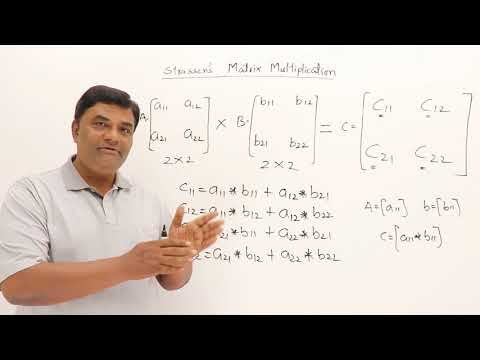

2.9 Strassens Matrix Multiplication

ARM Lecture 13 Programming LCD with Matrix Keypad Interface

EfficientML.ai Lecture 13 - Transformer and LLM (Part II) (MIT 6.5940, Fall 2023, Zoom)

Комментарии

0:52:24

0:52:24

0:18:46

0:18:46

1:21:14

1:21:14

0:59:10

0:59:10

0:26:28

0:26:28

1:15:06

1:15:06

0:30:05

0:30:05

1:21:14

1:21:14

0:31:52

0:31:52

1:13:24

1:13:24

0:59:17

0:59:17

0:59:52

0:59:52

0:21:46

0:21:46

0:01:01

0:01:01

0:20:25

0:20:25

1:00:27

1:00:27

0:31:33

0:31:33

0:07:58

0:07:58

1:31:30

1:31:30

0:57:30

0:57:30

0:33:26

0:33:26

0:23:40

0:23:40

0:23:52

0:23:52

1:17:03

1:17:03