filmov

tv

Machine Learning Lecture 15 '(Linear) Support Vector Machines continued' -Cornell CS4780 SP17

Показать описание

Lecture Notes:

Machine Learning Lecture 15 '(Linear) Support Vector Machines continued' -Cornell CS4780 S...

Machine Learning Lecture 14 '(Linear) Support Vector Machines' -Cornell CS4780 SP17

Lecture 15 : Linear Regression

Linear models and simulations | MIT Computational Thinking Spring 2021 | Lecture 15

Pattern Recognition [PR] Episode 15 - Linear Discriminant Analysis - Examples

15 Linear Regression

Eigen values and Eigen vectors (PCA): Dimensionality reduction Lecture 15@ Applied AI Course

Machine Learning Foundations Course – Regression Analysis

Electrical Machines - Transformers Exam Insights Series by Chetan Sir | Next Engineer #sscje #rrbje

15. Linear Programming: LP, reductions, Simplex

ML Lecture 15: Unsupervised Learning - Neighbor Embedding

Machine Learning Lecture 26 'Gaussian Processes' -Cornell CS4780 SP17

Deep Learning Lecture 15: Deep Reinforcement Learning - Policy search

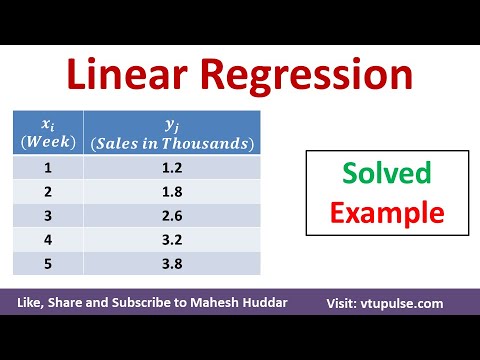

Linear Regression Algorithm – Solved Numerical Example in Machine Learning by Mahesh Huddar

Lecture 15 | Efficient Methods and Hardware for Deep Learning

Machine Learning Course for Beginners

CS231n Winter 2016: Lecture 15: Invited Talk by Jeff Dean

Cornell CS 5787: Applied Machine Learning. Lecture 5b. Part 1: Probabilistic Linear Regression

undergraduate machine learning 17: Linear prediction

SP15 Lecture 21 Part 2 Linear Classifier

Lecture 15 Time Series Modeling

Linear Regression Solved Numerical Part-1 Explained in Hindi l Machine Learning Course

Linear Regression Explained in Hindi ll Machine Learning Course

Lecture 15 | Applied Linear Algebra | Vector Properties | Prof AK Jagannatham

Комментарии

0:50:04

0:50:04

0:49:59

0:49:59

0:16:05

0:16:05

0:53:28

0:53:28

![Pattern Recognition [PR]](https://i.ytimg.com/vi/ryFD7lQxNw0/hqdefault.jpg) 0:11:35

0:11:35

0:32:52

0:32:52

0:23:00

0:23:00

9:33:29

9:33:29

1:01:37

1:01:37

1:22:27

1:22:27

0:30:59

0:30:59

0:52:41

0:52:41

0:54:40

0:54:40

0:05:30

0:05:30

1:16:52

1:16:52

9:52:19

9:52:19

1:14:50

1:14:50

0:17:24

0:17:24

0:51:16

0:51:16

0:08:28

0:08:28

0:42:13

0:42:13

0:06:56

0:06:56

0:14:20

0:14:20

0:33:11

0:33:11