filmov

tv

Eigen values and Eigen vectors (PCA): Dimensionality reduction Lecture 15@ Applied AI Course

Показать описание

For more information please visit

#ArtificialIntelligence,#MachineLearning,#DeepLearning,#DataScience,#NLP,#AI,#ML

#ArtificialIntelligence,#MachineLearning,#DeepLearning,#DataScience,#NLP,#AI,#ML

Eigenvectors and eigenvalues | Chapter 14, Essence of linear algebra

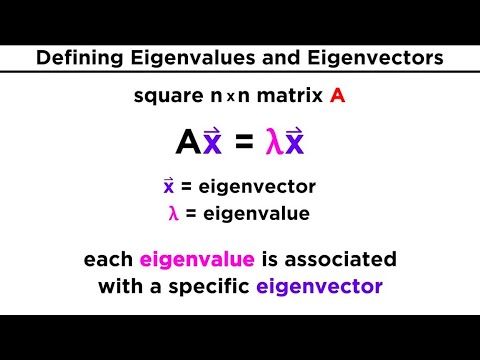

Finding Eigenvalues and Eigenvectors

Eigen values and Eigen vectors in 3 mins | Explained with an interesting analogy

Introduction to eigenvalues and eigenvectors | Linear Algebra | Khan Academy

Eigenvalues and Eigenvectors

Eigenvalues & Eigenvectors : Data Science Basics

3 x 3 eigenvalues and eigenvectors

Eigenvalues and Eigenvectors

❖ Finding Eigenvalues and Eigenvectors : 2 x 2 Matrix Example ❖

What eigenvalues and eigenvectors mean geometrically

Linear Algebra 5.1.1 Eigenvectors and Eigenvalues

🔷15 - Eigenvalues and Eigenvectors of a 3x3 Matrix

Oxford Linear Algebra: Eigenvalues and Eigenvectors Explained

4. Eigenvalues and Eigenvectors

Eigenvectors and eigenvalues - simply explained

15 - What are Eigenvalues and Eigenvectors? Learn how to find Eigenvalues.

Eigenvalues and Eigenvectors, Imaginary and Real

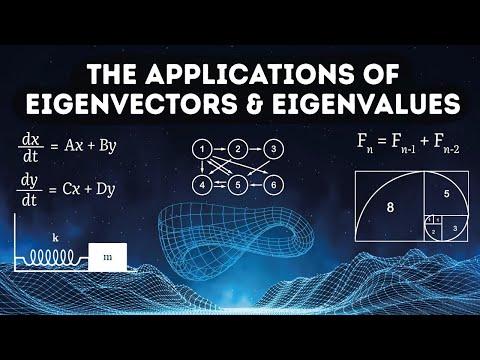

The applications of eigenvectors and eigenvalues | That thing you heard in Endgame has other uses

Real life example of Eigen values and Eigen vectors

How to find the eigenvector of a 3x3 matrix | Math with Janine

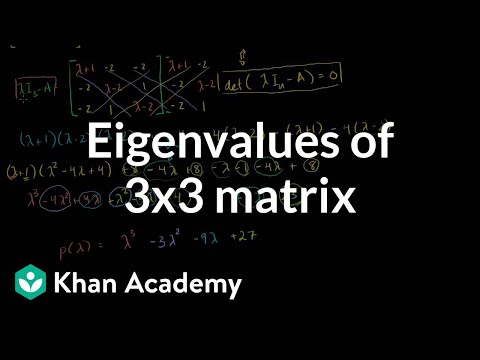

Eigenvalues of a 3x3 matrix | Alternate coordinate systems (bases) | Linear Algebra | Khan Academy

Eigenvalues and Eigenvectors

Find Eigenvalues and Eigenvectors of a 2x2 Matrix

Eigenvalues and Eigenvectors | Properties and Important Result | Matrices

Комментарии

0:17:16

0:17:16

0:17:10

0:17:10

0:02:55

0:02:55

0:07:43

0:07:43

0:18:32

0:18:32

0:11:58

0:11:58

0:12:29

0:12:29

0:33:57

0:33:57

0:13:41

0:13:41

0:09:09

0:09:09

0:19:07

0:19:07

0:31:10

0:31:10

0:26:23

0:26:23

0:48:56

0:48:56

0:11:40

0:11:40

0:13:08

0:13:08

0:12:42

0:12:42

0:23:45

0:23:45

0:04:44

0:04:44

0:03:56

0:03:56

0:14:08

0:14:08

0:19:01

0:19:01

0:18:37

0:18:37

0:24:21

0:24:21