filmov

tv

26 - Prior and posterior predictive distributions - an introduction

Показать описание

This video provides an introduction to the

26 - Prior and posterior predictive distributions - an introduction

Prior And Posterior - Intro to Statistics

Prior and Posterior Probabilities in Bayesian Networks

Assisted Lithology Interpretation – Prior & Posterior Probability

Prior And Posterior Solution - Intro to Statistics

Bayesian Statistics: An Introduction

Introduction to Bayesian statistics, part 1: The basic concepts

Bayesian statistics - Summarizing a posterior distribution

Bayesian posterior sampling

An example of how an improper prior leads to an improper posterior

What is a posterior predictive check and why is it useful?

Bayesian posterior probabilities for one-sided alternative hypotheses

Prior and Posterior Distributions

Estimating the posterior predictive distribution by sampling

Bayesian posterior sampling

Posterior & MAP for Normal distribution with unknown precision

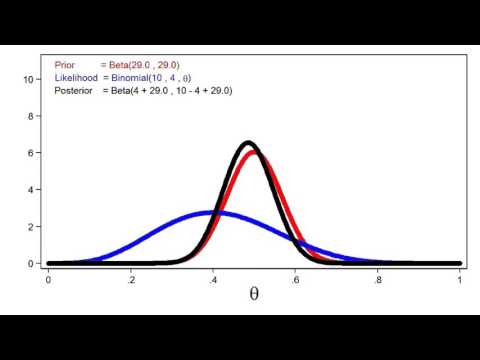

Bayesian fundamentals (Likelihood, Prior, Posterior) in R

Lecture 10a - Prior and Posterior Probability

3. Bayesian Posterior Distributions & Conjugate Priors

[Bayesian inference for a mean] Prior and posterior for mean and standard deviation part 1

[Bayesian inference for a mean] Prior and posterior for mean and standard deviation part 3

Decision Analysis 5: Posterior (Revised) Probability Calculations

Posterior Density - Introduction

Prior and Posterior Distribution (Poisson & Exponential Distribution)

Комментарии

0:05:44

0:05:44

0:02:20

0:02:20

0:11:51

0:11:51

0:01:01

0:01:01

0:00:27

0:00:27

0:38:19

0:38:19

0:09:12

0:09:12

0:06:41

0:06:41

0:07:23

0:07:23

0:09:41

0:09:41

0:11:16

0:11:16

0:03:28

0:03:28

0:28:36

0:28:36

0:12:26

0:12:26

0:07:23

0:07:23

0:30:31

0:30:31

0:44:48

0:44:48

0:08:37

0:08:37

0:13:14

0:13:14

0:09:51

0:09:51

0:29:42

0:29:42

0:04:00

0:04:00

0:02:15

0:02:15

0:04:52

0:04:52