filmov

tv

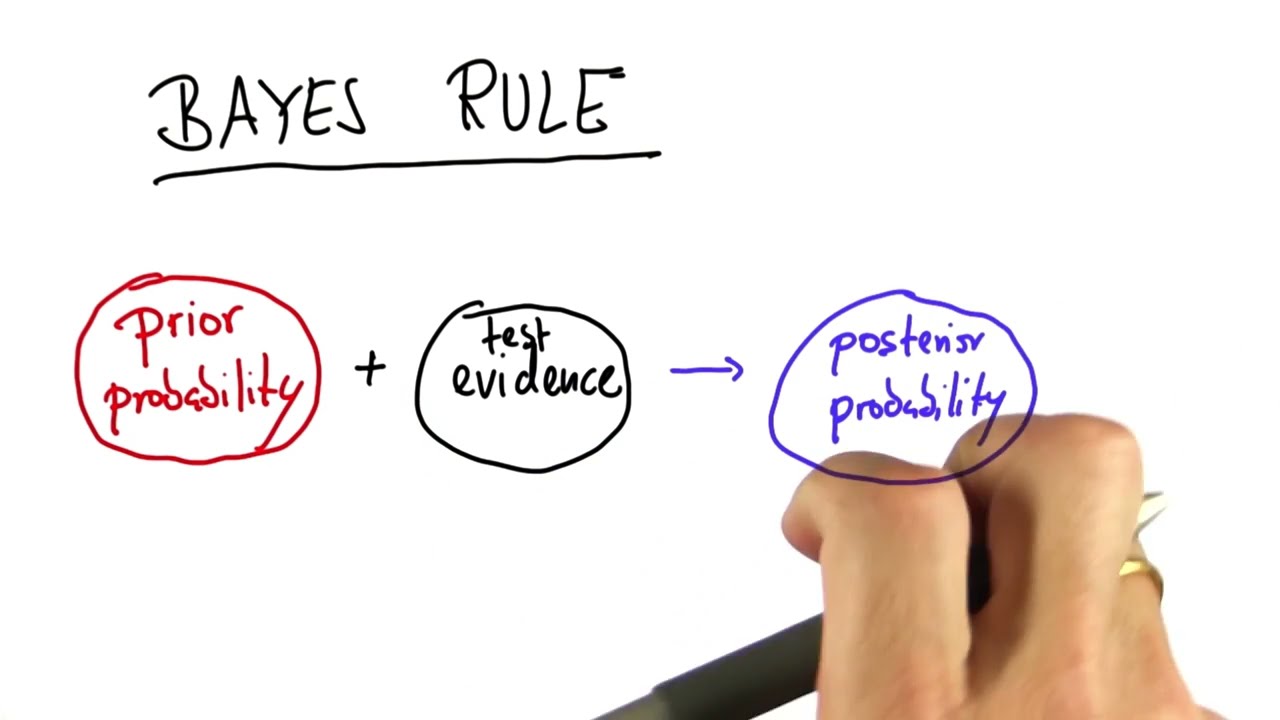

Prior And Posterior - Intro to Statistics

Показать описание

In this video, Udacity founder & AI/ML (artificial intelligence & machine learning) pioneer, Sebastian Thrun, gives you a comprehensive introduction to statistics, prior and posterior.

---

Connect with us on social! 🌐

---

Connect with us on social! 🌐

Prior And Posterior - Intro to Statistics

26 - Prior and posterior predictive distributions - an introduction

Prior And Posterior Solution - Intro to Statistics

Introduction to Bayesian statistics, part 1: The basic concepts

Prior and Posterior Probabilities in Bayesian Networks

Bayes theorem, the geometry of changing beliefs

Lecture 10a - Prior and Posterior Probability

Posterior Probabilities - Intro to Machine Learning

Posterior Probabilities - Intro to Machine Learning

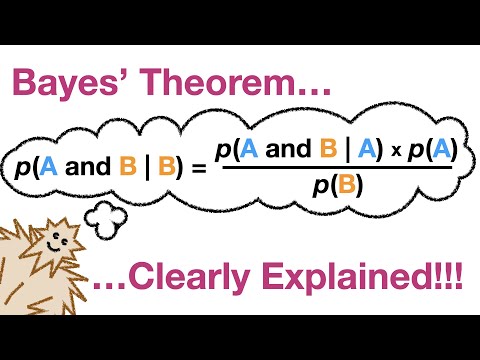

Bayes' Theorem, Clearly Explained!!!!

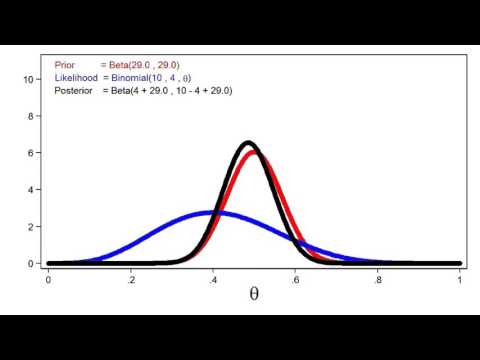

Prior and Posterior Distributions

Assisted Lithology Interpretation – Prior & Posterior Probability

Bayesian posterior sampling

Posterior Density - Introduction

Bayes' Theorem - The Simplest Case

Bayesian statistics - Summarizing a posterior distribution

General Expression for The Posterior Density

An example of how an improper prior leads to an improper posterior

3. Bayesian Posterior Distributions & Conjugate Priors

Bayesian posterior probabilities for one-sided alternative hypotheses

Prior and Posterior Distribution (Poisson & Exponential Distribution)

You Know I'm All About that Bayes: Crash Course Statistics #24

Posterior Density with Uni-Modal Prior

What is a posterior predictive check and why is it useful?

Комментарии

0:02:20

0:02:20

0:05:44

0:05:44

0:00:27

0:00:27

0:09:12

0:09:12

0:11:51

0:11:51

0:15:11

0:15:11

0:08:37

0:08:37

0:00:21

0:00:21

0:00:34

0:00:34

0:14:00

0:14:00

0:28:36

0:28:36

0:01:01

0:01:01

0:07:23

0:07:23

0:02:15

0:02:15

0:05:31

0:05:31

0:06:41

0:06:41

0:03:59

0:03:59

0:09:41

0:09:41

0:13:14

0:13:14

0:03:28

0:03:28

0:04:52

0:04:52

0:12:05

0:12:05

0:13:15

0:13:15

0:11:16

0:11:16