filmov

tv

Managing Spark Partitions | Spark Tutorial | Spark Interview Question

Показать описание

#Apache #BigData #Spark #Partitions #Shuffle #Stage #Internals #Performance #optimisation #DeepDive #Join #Shuffle:

Please join as a member in my channel to get additional benefits like materials in BigData , Data Science, live streaming for Members and many more

About us:

We are a technology consulting and training providers, specializes in the technology areas like : Machine Learning,AI,Spark,Big Data,Nosql, graph DB,Cassandra and Hadoop ecosystem.

Visit us :

Twitter :

Thanks for watching

Please Subscribe!!! Like, share and comment!

Please join as a member in my channel to get additional benefits like materials in BigData , Data Science, live streaming for Members and many more

About us:

We are a technology consulting and training providers, specializes in the technology areas like : Machine Learning,AI,Spark,Big Data,Nosql, graph DB,Cassandra and Hadoop ecosystem.

Visit us :

Twitter :

Thanks for watching

Please Subscribe!!! Like, share and comment!

Spark Basics | Partitions

Managing Spark Partitions | Spark Tutorial | Spark Interview Question

Apache Spark : Managing Spark Partitions with Coalesce and Repartition

Spark Executor Core & Memory Explained

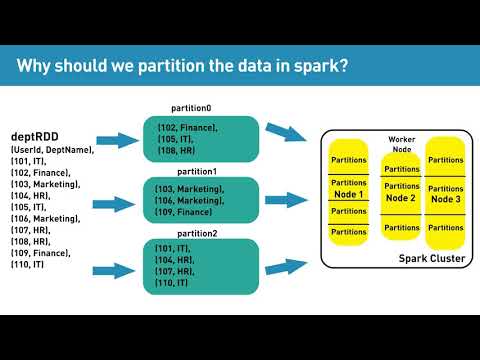

Why should we partition the data in spark?

Trending Big Data Interview Question - Number of Partitions in your Spark Dataframe

Apache Spark Core—Deep Dive—Proper Optimization Daniel Tomes Databricks

How Spark Creates Partitions || Spark Parallel Processing || Spark Interview Questions and Answers

Modernising data analytics with Databricks

Spark Join and shuffle | Understanding the Internals of Spark Join | How Spark Shuffle works

Basics of Apache Spark | Shuffle Partition [200] | learntospark

Shuffle Partition Spark Optimization: 10x Faster!

All about partitions in spark

Spark [Executor & Driver] Memory Calculation

Demo: Spark 3 Dynamic Partition Pruning

Partition vs bucketing | Spark and Hive Interview Question

Lessons From the Field: Applying Best Practices to Your Apache Spark Applications - Silvio Fiorito

Apache Spark Partitions Introduction

How Does Spark Partition the Data | Hadoop Interview Questions and Answers | Spark Partitioning

Determining the number of partitions

Spark Out of Memory Issue | Spark Memory Tuning | Spark Memory Management | Part 1

Spark performance optimization Part1 | How to do performance optimization in spark

Partitions and CPU Core Allocation in Apache Spark

Spark Application | Partition By in Spark | Chapter - 2 | LearntoSpark

Комментарии

0:05:12

0:05:12

0:11:20

0:11:20

0:14:33

0:14:33

0:08:32

0:08:32

0:03:43

0:03:43

0:08:37

0:08:37

1:30:18

1:30:18

0:12:43

0:12:43

1:42:12

1:42:12

0:09:15

0:09:15

0:05:21

0:05:21

0:19:03

0:19:03

0:12:27

0:12:27

0:06:50

0:06:50

0:01:17

0:01:17

0:09:15

0:09:15

0:29:29

0:29:29

0:08:25

0:08:25

0:01:19

0:01:19

0:03:47

0:03:47

0:07:38

0:07:38

0:20:20

0:20:20

0:00:59

0:00:59

0:13:45

0:13:45