filmov

tv

Spark Application | Partition By in Spark | Chapter - 2 | LearntoSpark

Показать описание

In this video, we will learn about the partitionBy in Spark Dataframe Writer. We will have a demo on how to save the data by creating a partition on date column using PySpark.

Blog link to learn more on Spark:

Linkedin profile:

FB page:

Github:

Blog link to learn more on Spark:

Linkedin profile:

FB page:

Github:

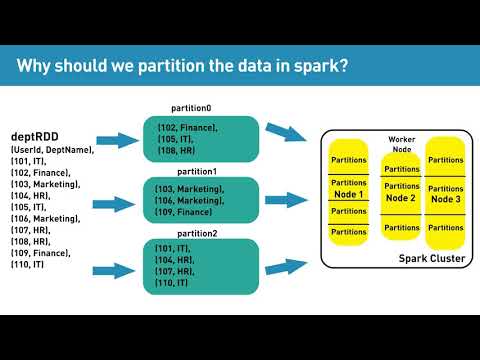

Why should we partition the data in spark?

Spark Application | Partition By in Spark | Chapter - 2 | LearntoSpark

How To Set And Get Number Of Partition In Spark | Spark Partition | Big Data

Partition the Data using Apache Spark with Java

Shuffle Partition Spark Optimization: 10x Faster!

Partition the Data using Apache Spark with Scala

How Does Spark Partition the Data | Hadoop Interview Questions and Answers | Spark Partitioning

Sparkling: Speculative Partition of Data for Spark Applications - Peilong Li

Devday | Apache Spark Under The Hood

Spark Executor Core & Memory Explained

How to use Windowing Functions in Apache Spark | Window Functions | OVER | PARTITION BY | ORDER BY

Partition vs bucketing | Spark and Hive Interview Question

Understanding and Working with Spark Web UI | Local Check Point | Scheduler | Max Partition Bytes

Dynamic Partition Pruning in Apache Spark

Dynamic Partition Pruning | Spark Performance Tuning

Apache Spark Datasource Mysql Partition

Spark Partition | Hash Partitioner | Interview Question

Apache Spark Core—Deep Dive—Proper Optimization Daniel Tomes Databricks

Wildcard path and partition values in Apache Spark SQL

Hash Partitioning vs Range Partitioning | Spark Interview questions

Spark Tutorial: Partition Window

35. Databricks & Spark: Interview Question - Shuffle Partition

Apache Spark 3 | New Feature | Performance Optimization | Dynamic Partition Pruning

46. Databricks | Spark | Pyspark | Number of Records per Partition in Dataframe

Комментарии

0:03:43

0:03:43

0:13:45

0:13:45

0:04:52

0:04:52

0:05:09

0:05:09

0:19:03

0:19:03

0:04:47

0:04:47

0:01:19

0:01:19

0:20:22

0:20:22

1:15:48

1:15:48

0:08:32

0:08:32

0:10:11

0:10:11

0:09:15

0:09:15

0:09:04

0:09:04

0:09:32

0:09:32

0:06:32

0:06:32

0:03:41

0:03:41

0:14:43

0:14:43

1:30:18

1:30:18

0:08:32

0:08:32

0:04:25

0:04:25

0:11:06

0:11:06

0:05:52

0:05:52

0:15:26

0:15:26

0:05:53

0:05:53