filmov

tv

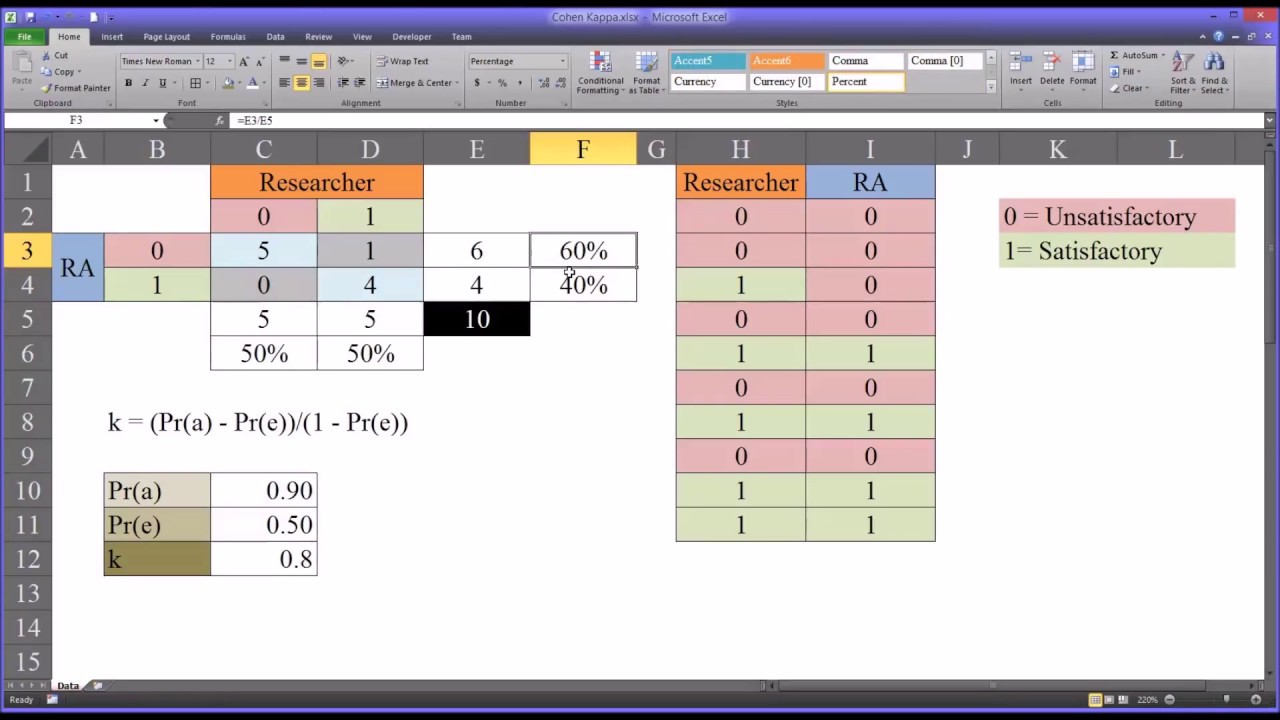

Calculating and Interpreting Cohen's Kappa in Excel

Показать описание

This video demonstrates how to estimate inter-rater reliability with Cohen’s Kappa in Microsoft Excel. How to calculate sensitivity and specificity is reviewed.

Kappa Value Calculation | Reliability

Calculating and Interpreting Cohen's Kappa in Excel

Cohen's Kappa (Inter-Rater-Reliability)

How to calculate Kappa Statistic? Where to use Kappa? How to interpret and calculate in SPSS?

Kappa Coefficient

Cohen's Kappa: Guidelines for Interpretation

Calculate and interpret Cohen's kappa coefficient in SPSS

Weighted Cohen's Kappa (Inter-Rater-Reliability)

What Is And How To Calculate Cohen's d?

Cohen's Kappa

Kappa Measure of Agreement in SPSS

StatHand - Interpreting Cohen's kappa in SPSS

How to calculate Cohen’s Kappa in SPSS

Estimating Inter-Rater Reliability with Cohen's Kappa in SPSS

SPSS Tutorial: Cohen's Kappa

StatHand - Calculating and interpreting a weighted kappa in SPSS

Cohens Kappa

What is Cohen's Kappa?

Cohen's kappa

Cohen's Kappa Coefficient|Statistical Measure| Classification performance evaluation

Kappa - SPSS (part 1)

Compute Cohen Kappa Score | Kappa Statistic | Kappa Score Binary & Multiclass in ML by Mahesh Hu...

StatHand - Calculating Cohen's kappa in SPSS

Calculating Kappa Reliability (SportsCode & Excel)

Комментарии

0:03:29

0:03:29

0:11:23

0:11:23

0:11:05

0:11:05

0:05:55

0:05:55

0:04:29

0:04:29

0:02:34

0:02:34

0:05:13

0:05:13

0:11:56

0:11:56

0:07:52

0:07:52

0:05:10

0:05:10

0:04:42

0:04:42

0:00:42

0:00:42

0:02:57

0:02:57

0:07:35

0:07:35

0:11:26

0:11:26

0:02:08

0:02:08

0:24:21

0:24:21

0:03:22

0:03:22

0:09:54

0:09:54

0:15:08

0:15:08

0:03:34

0:03:34

0:08:03

0:08:03

0:01:19

0:01:19

0:14:54

0:14:54