filmov

tv

How to use read and write streams in node.js for BIG CSV files

Показать описание

An overview on how to use a read and write stream in node when you want to read in a very large csv file and process it quickly.

------------

------------

How to remember what you read (and actually use it to improve your life)

Open PDFs in Edge to use Read Aloud

How To Use Google Read And Write!

How to Read and Use an Engineer's Scale for Beginners

How to use Read & Write Google Chrome Web Extension - Tutorial for Teachers (2020)

How to use Windows 10's Narrator to read your screen?

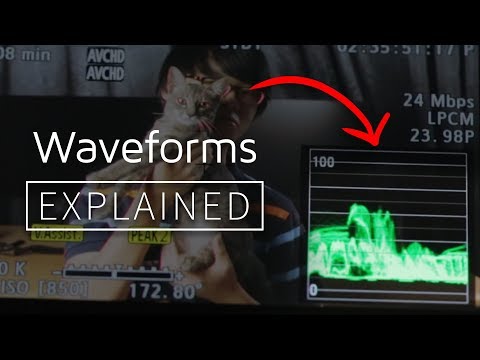

Explained! - How to Read and Use Waveforms

Bionic Reading: Read x2 Faster | How to Use it on Your Phone and Browser

(English) How to use Read and Write to Hear Text Read Aloud

How to Read a Digital Multimeter - How to Use a Digital Multimeter

How To Read and Use A Stopwatch

How to use read and write streams in node.js for BIG CSV files

How to Install and Use Read Aloud Text-to-Speech Free Google Chrome Extension - Read Aloud Tutorial

How to use SNAP & READ

Super Tips and Practice Questions for Read & Select! Duolingo English Test

How to Use and Read a Digital Caliper

How to Use the Read & Write App with Google Docs

HOW TO READ AND USE THE SCALE RULER.

How to Read a Multimeter - How to Use an Analog Multimeter

How to Read and Use an Architect's Scale for Beginners

Trimmer Trouble? Let me help! How to read and use a paper trimmer, start to finish!

Lenormand 5-Card Spread for Beginners: How to Read and Use It

How to Read Micrometer| How to use Micrometer| Micrometer 😲😲😲💥💥💥

✅ How To Use Read Aloud In Microsoft Word 🔴

Комментарии

0:09:32

0:09:32

0:01:32

0:01:32

0:06:31

0:06:31

0:02:05

0:02:05

0:14:45

0:14:45

0:01:29

0:01:29

0:05:49

0:05:49

0:04:22

0:04:22

0:01:06

0:01:06

0:01:12

0:01:12

0:02:00

0:02:00

0:11:00

0:11:00

0:02:59

0:02:59

0:02:58

0:02:58

0:25:54

0:25:54

0:05:05

0:05:05

0:02:22

0:02:22

0:07:24

0:07:24

0:02:03

0:02:03

0:04:53

0:04:53

0:25:25

0:25:25

0:12:52

0:12:52

0:00:43

0:00:43

0:01:09

0:01:09