filmov

tv

Efficiently Tracking Memory Consumption in Python Scripts During Execution

Показать описание

Discover effective strategies and techniques for `monitoring memory usage` in Python to handle large data processing without memory overflow.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Tracking memory consumption in python script during execution

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Efficiently Tracking Memory Consumption in Python Scripts During Execution

Handling large data sets in Python can be a daunting task, especially when you're dealing with data that exceeds the available memory. If you're trying to process over 500 GB of data, you'll need to find effective strategies to monitor and manage your memory consumption. In this guide, we'll address how to track and optimize memory usage in Python, ensuring your scripts run smoothly without hitting memory limits.

The Challenge of Memory Management

When working with large datasets, the challenge lies in efficiently loading and processing data in small chunks to prevent memory overflow. Even if you optimize your memory usage by clearing lists and arrays, you may still encounter increasing memory consumption. This often occurs due to memory leaks—areas where memory is reserved but not released properly.

Here are some common issues you'll face:

Increased memory usage after processing each chunk.

Performance degradation as your script continues running.

Memory leaks that are hard to pinpoint.

Introducing tracemalloc for Memory Tracking

Fortunately, Python provides a powerful tool for tracking memory usage: the tracemalloc module. Available starting from Python 3.4, this built-in module allows you to monitor memory allocation in your scripts effectively.

Key Features of tracemalloc

Snapshot Creation: Capture memory usage at specific points in your script.

Traceback Information: Get insights on where memory allocations occur, helping you identify potential leaks.

Memory Growth Analysis: Easily track growth in memory consumption over time.

How to Use tracemalloc

To integrate tracemalloc into your script, follow these simple steps:

Import the Module

[[See Video to Reveal this Text or Code Snippet]]

Start Tracking Memory Usage

At the beginning of your script, call:

[[See Video to Reveal this Text or Code Snippet]]

Take Snapshots

To create a snapshot, use:

[[See Video to Reveal this Text or Code Snippet]]

Analyze Memory Usage

After processing your data chunks, you can analyze the snapshot to see where memory is being allocated:

[[See Video to Reveal this Text or Code Snippet]]

Stop Tracking (Optional)

If you want to stop tracking memory at any point in your script, you can call:

[[See Video to Reveal this Text or Code Snippet]]

Best Practices for Memory Management

While tracemalloc is a great tool to track memory usage, optimizing memory consumption requires a combination of strategies:

Process Data in Chunks: Load and process chunks rather than the entire dataset.

Clear Unused Variables: Use the del statement to explicitly free up memory after you're done with certain variables.

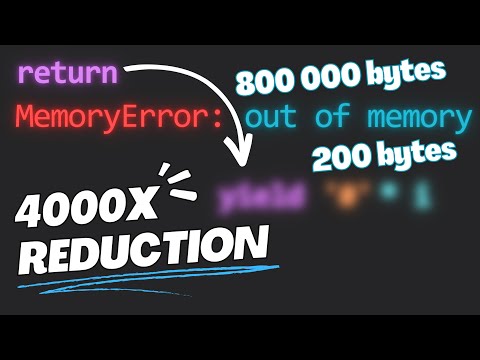

Use Generators: Instead of loading all data into memory, consider using generators which yield data on-the-fly.

Profile Your Code: Use profiling tools to find bottlenecks in your code.

Conclusion

Tracking and optimizing memory usage in Python when working with large datasets doesn't have to be overwhelming. With tracemalloc, you'll not only pinpoint memory leaks but also gain valuable insights into your program's performance. Combine this with chunk processing and other best practices, and you'll be well on your way to handling vast amounts of data efficiently without crashing your script.

By implementing these techniques, you can significantly enhance the performance and reliability of your Python application. Don’t let memory management be an obstacle; use these tools and tips to smoothly sail through your data processing tasks.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Tracking memory consumption in python script during execution

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Efficiently Tracking Memory Consumption in Python Scripts During Execution

Handling large data sets in Python can be a daunting task, especially when you're dealing with data that exceeds the available memory. If you're trying to process over 500 GB of data, you'll need to find effective strategies to monitor and manage your memory consumption. In this guide, we'll address how to track and optimize memory usage in Python, ensuring your scripts run smoothly without hitting memory limits.

The Challenge of Memory Management

When working with large datasets, the challenge lies in efficiently loading and processing data in small chunks to prevent memory overflow. Even if you optimize your memory usage by clearing lists and arrays, you may still encounter increasing memory consumption. This often occurs due to memory leaks—areas where memory is reserved but not released properly.

Here are some common issues you'll face:

Increased memory usage after processing each chunk.

Performance degradation as your script continues running.

Memory leaks that are hard to pinpoint.

Introducing tracemalloc for Memory Tracking

Fortunately, Python provides a powerful tool for tracking memory usage: the tracemalloc module. Available starting from Python 3.4, this built-in module allows you to monitor memory allocation in your scripts effectively.

Key Features of tracemalloc

Snapshot Creation: Capture memory usage at specific points in your script.

Traceback Information: Get insights on where memory allocations occur, helping you identify potential leaks.

Memory Growth Analysis: Easily track growth in memory consumption over time.

How to Use tracemalloc

To integrate tracemalloc into your script, follow these simple steps:

Import the Module

[[See Video to Reveal this Text or Code Snippet]]

Start Tracking Memory Usage

At the beginning of your script, call:

[[See Video to Reveal this Text or Code Snippet]]

Take Snapshots

To create a snapshot, use:

[[See Video to Reveal this Text or Code Snippet]]

Analyze Memory Usage

After processing your data chunks, you can analyze the snapshot to see where memory is being allocated:

[[See Video to Reveal this Text or Code Snippet]]

Stop Tracking (Optional)

If you want to stop tracking memory at any point in your script, you can call:

[[See Video to Reveal this Text or Code Snippet]]

Best Practices for Memory Management

While tracemalloc is a great tool to track memory usage, optimizing memory consumption requires a combination of strategies:

Process Data in Chunks: Load and process chunks rather than the entire dataset.

Clear Unused Variables: Use the del statement to explicitly free up memory after you're done with certain variables.

Use Generators: Instead of loading all data into memory, consider using generators which yield data on-the-fly.

Profile Your Code: Use profiling tools to find bottlenecks in your code.

Conclusion

Tracking and optimizing memory usage in Python when working with large datasets doesn't have to be overwhelming. With tracemalloc, you'll not only pinpoint memory leaks but also gain valuable insights into your program's performance. Combine this with chunk processing and other best practices, and you'll be well on your way to handling vast amounts of data efficiently without crashing your script.

By implementing these techniques, you can significantly enhance the performance and reliability of your Python application. Don’t let memory management be an obstacle; use these tools and tips to smoothly sail through your data processing tasks.

0:01:50

0:01:50

0:00:41

0:00:41

0:03:35

0:03:35

0:00:16

0:00:16

0:00:40

0:00:40

0:08:25

0:08:25

0:00:45

0:00:45

0:01:41

0:01:41

0:01:39

0:01:39

0:04:32

0:04:32

0:00:52

0:00:52

0:13:25

0:13:25

0:01:45

0:01:45

0:05:34

0:05:34

0:01:29

0:01:29

0:01:47

0:01:47

0:03:53

0:03:53

0:03:10

0:03:10

0:07:05

0:07:05

0:00:16

0:00:16

0:01:46

0:01:46

0:00:29

0:00:29

0:00:32

0:00:32

0:04:37

0:04:37