filmov

tv

Inserting 1 BILLION rows to a Timescale Database 💀 #ad #programming #software #technology #code

Показать описание

Inserting 1 BILLION rows to a Timescale Database 💀 #ad #programming #software #technology #code

How To Load One BILLION Rows into an SQL Database

Querying 100 Billion Rows using SQL, 7 TB in a single table

how to load one billion rows into an sql database

Processing 1 Billion Rows Per Second

Best Practices Working with Billion-row Tables in Databases

Hive Processes One Billion Rows

Display numbers in Millions in Excel #shorts

The Billion Rows Excel Challenge

🚨 GET RICH 'FAST' IN GROW A GARDEN ROBLOX #growagarden #roblox #robloxfyp

Databases: Database design for handling 1 billion rows and counting

Firebolt presents: 42 Billion Rows of Data in 1.3 Seconds ⚡

How to get robux 🤑 #Roblox #Shorts

Taras Kloba - Analyzing 100 billion rows in 30 seconds with Google BigQuery - SQL Saturday Kharkiv

SQL indexing best practices | How to make your database FASTER!

The LONGEST Comment On YouTube!

Stay AWAY From Fire Ants 😨 #viral

How to make billions in Grow A Garden 🤑 #roblox #growagarden

Did I Just Get The “MOST EXPENSIVE” FRUIT in GROW A GARDEN on ROBLOX?

Comma Style (Use of Thousand Separator in Excel 🔥| #excel #exceltutorial #exceltips #microsoftexcel...

Databases: PostgreSQL - 1 billion rows table cannot get vacuumed despite no errors

Databases: Can PostgreSQL manage a table with 1.5 billion rows?

6 MUTATIONS in 1 Fruit! How much will it sell for? 🤔

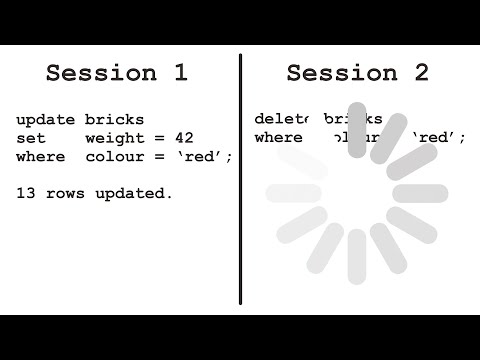

How to Make Inserts, Updates, and Deletes Faster: Databases for Developers: Performance #8

Комментарии

0:01:00

0:01:00

0:12:17

0:12:17

0:09:07

0:09:07

0:06:44

0:06:44

0:43:39

0:43:39

0:13:41

0:13:41

0:01:23

0:01:23

0:00:33

0:00:33

0:07:39

0:07:39

0:00:22

0:00:22

0:03:02

0:03:02

0:01:21

0:01:21

0:00:30

0:00:30

0:38:50

0:38:50

0:04:08

0:04:08

0:00:27

0:00:27

0:00:29

0:00:29

0:00:26

0:00:26

0:00:10

0:00:10

0:00:26

0:00:26

0:02:23

0:02:23

0:01:50

0:01:50

0:00:16

0:00:16

0:09:23

0:09:23