filmov

tv

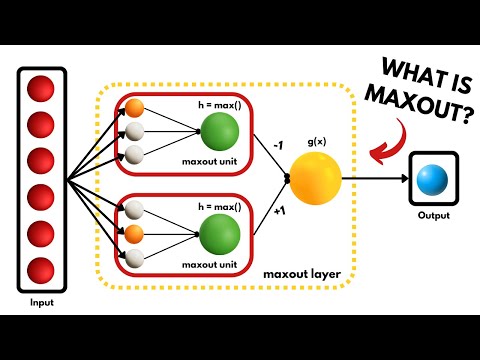

What is MaxOut in Deep Learning?

Показать описание

MaxOut is a technique introduced by Ian Goodfellow in 2013, which can learn different activation functions within each of its units.

In this tutorial, we'll be reviewing what a MaxOut network is and how it's constructed, as well as checking out it's relevance with more modern architecture.

# Table of Content

- What is Drop Out: 0:00

- Drop Out vs Stochastic Gradient Descent: 0:52

- What is MaxOut?: 1:27

- MaxOut can learn Activation Functions: 2:45

- MaxOut is a Universal Approximator: 4:02

- MaxOut Performance across Benchmarks: 4:41

- MaxOut vs Rectifiers: 5:50

- Conclusion: 8:08

To learn more about MaxOut here are a few interesting links:

Abstract:

"We consider the problem of designing models to leverage a recently introduced approximate model averaging technique called dropout.

We define a simple new model called maxout (so named because its output is the max of a set of inputs, and because it is a natural companion to dropout) designed to both facilitate optimization by dropout and improve the accuracy of dropout's fast approximate model averaging technique.

We empirically verify that the model successfully accomplishes both of these tasks. We use maxout and dropout to demonstrate state of the art classification performance on four benchmark datasets: MNIST, CIFAR-10, CIFAR-100, and SVHN."

----

----

Follow Me Online Here:

___

Have a great week! 👋

0:08:30

0:08:30

0:07:18

0:07:18

0:06:28

0:06:28

0:02:17

0:02:17

0:11:31

0:11:31

0:00:22

0:00:22

0:00:19

0:00:19

0:00:13

0:00:13

0:09:03

0:09:03

0:00:54

0:00:54

0:00:13

0:00:13

0:00:10

0:00:10

0:00:15

0:00:15

0:00:35

0:00:35

0:18:35

0:18:35

0:00:26

0:00:26

0:00:17

0:00:17

0:00:16

0:00:16

0:00:55

0:00:55

0:00:37

0:00:37

0:00:21

0:00:21

0:04:43

0:04:43

0:00:13

0:00:13

0:00:15

0:00:15