filmov

tv

Optimizing Gradient Descent: How to Choose the Best Learning Rate (Step-by-Step Guide) 🚀

Показать описание

Welcome to our channel! 🚀 In this video, we dive into the crucial aspect of selecting the perfect learning rate for your specific application in gradient descent. Before we delve into the details, let's quickly refresh our memory on what the learning rate, or alpha, entails. Take a moment to reflect on it before we proceed.

The learning rate (alpha) essentially dictates the size of the steps taken by the gradient descent algorithm. A larger alpha implies more substantial steps, potentially leading to faster convergence. However, be wary, as an excessively large alpha may hinder convergence, causing the algorithm to fail.

With this process, you can confidently choose the optimal learning rate. Stay tuned for our next video, where we'll unveil a nifty trick to enhance gradient descent—scaling our features. If you found this video helpful, consider subscribing for more insightful content! 📈🧠 #MachineLearning #GradientDescent #LearningRate #DataScience

The learning rate (alpha) essentially dictates the size of the steps taken by the gradient descent algorithm. A larger alpha implies more substantial steps, potentially leading to faster convergence. However, be wary, as an excessively large alpha may hinder convergence, causing the algorithm to fail.

With this process, you can confidently choose the optimal learning rate. Stay tuned for our next video, where we'll unveil a nifty trick to enhance gradient descent—scaling our features. If you found this video helpful, consider subscribing for more insightful content! 📈🧠 #MachineLearning #GradientDescent #LearningRate #DataScience

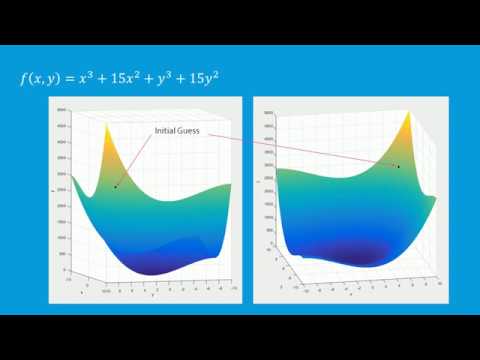

Intro to Gradient Descent || Optimizing High-Dimensional Equations

Gradient Descent in 3 minutes

Gradient Descent, Step-by-Step

Optimizing Gradient Descent: How to Choose the Best Learning Rate (Step-by-Step Guide) 🚀

Optimizers - EXPLAINED!

Gradient descent simple explanation|gradient descent machine learning|gradient descent algorithm

Optimization for Deep Learning (Momentum, RMSprop, AdaGrad, Adam)

Optimization in Machine Learning - First order methods - Gradient descent

ML Interview Questions: Gradient Descent

PREVIEW Optimizing AI - Applying Gradient Descent Optimization

Gradient descent, how neural networks learn | DL2

Gradient Descent Explained

Gradient Descent: The Ultimate Guide to Optimizing Your ML and Deep Learning Models #datascience

Stochastic Gradient Descent, Clearly Explained!!!

Numerical Optimization - Gradient Descent

Optimization Essentials: Mastering Gradient Descent

What is Optimization? + Learning Gradient Descent | Two Minute Papers #82

Gradient descent with momentum

Gradient Descent Machine Learning

Introduction To Optimization: Gradient Based Algorithms

Adam Optimization Algorithm (C2W2L08)

Tutorial 12- Stochastic Gradient Descent vs Gradient Descent

22. Gradient Descent: Downhill to a Minimum

Solve any equation using gradient descent

Комментарии

0:11:04

0:11:04

0:03:06

0:03:06

0:23:54

0:23:54

0:05:14

0:05:14

0:07:23

0:07:23

0:15:39

0:15:39

0:15:52

0:15:52

0:17:13

0:17:13

0:10:23

0:10:23

0:03:48

0:03:48

0:20:33

0:20:33

0:07:05

0:07:05

0:00:16

0:00:16

0:10:53

0:10:53

0:06:56

0:06:56

0:15:05

0:15:05

0:05:31

0:05:31

0:00:56

0:00:56

0:00:52

0:00:52

0:05:27

0:05:27

0:07:08

0:07:08

0:12:17

0:12:17

0:52:44

0:52:44

0:09:05

0:09:05