filmov

tv

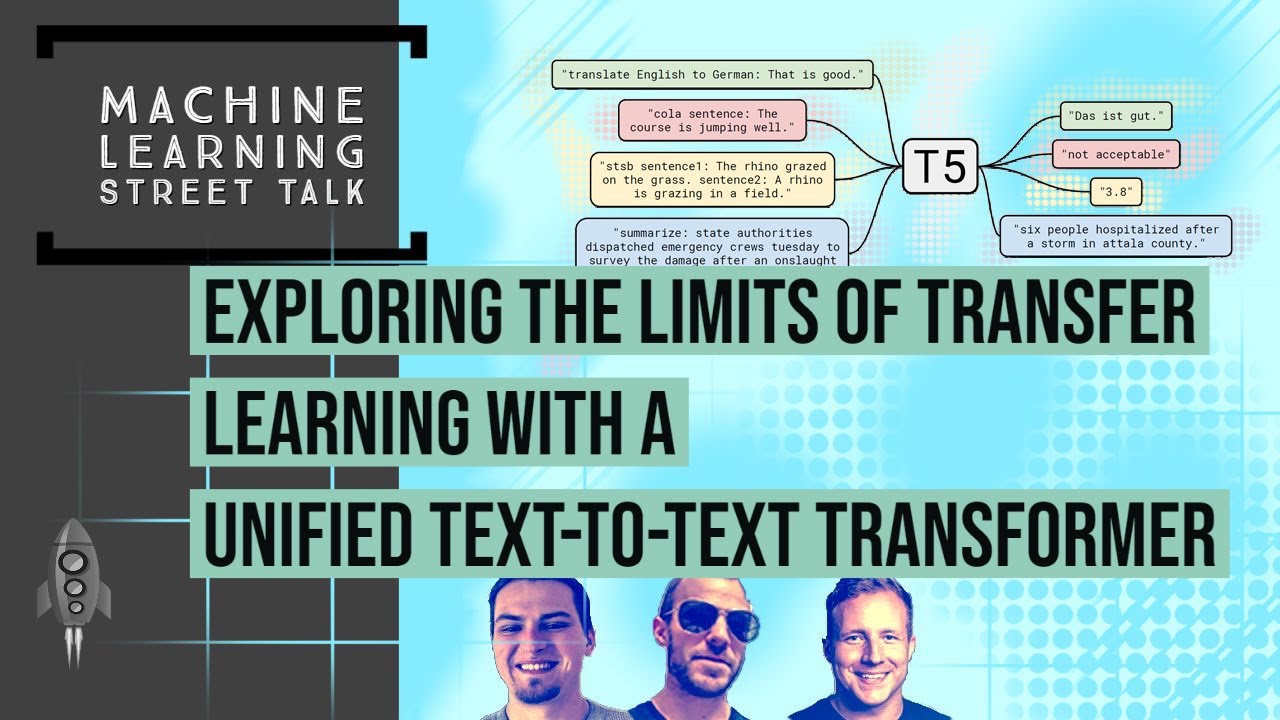

Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

Показать описание

In this episode of Machine Learning Street Talk, we chat about Large-scale Transfer Learning in Natural Language Processing. The Text-to-Text Transfer Transformer (T5) model from Google AI does an exhaustive survey of what’s important for Transfer Learning in NLP and what’s not. In this conversation, we go through the key takeaways of the paper, text-to-text input/output format, architecture choice, dataset size and composition, fine-tuning strategy, and how to best use more computation.

Beginning with these topics, we diverge into exciting ideas such as embodied cognition, meta-learning, and the measure of intelligence. We are still beginning our podcast journey and really appreciate any feedback from our listeners. Is the chat too technical? Do you prefer group discussions, interviewing experts, or chats between the three of us? Thanks for watching and if you haven’t already, Please Subscribe!

Paper Links discussed in the chat:

Beginning with these topics, we diverge into exciting ideas such as embodied cognition, meta-learning, and the measure of intelligence. We are still beginning our podcast journey and really appreciate any feedback from our listeners. Is the chat too technical? Do you prefer group discussions, interviewing experts, or chats between the three of us? Thanks for watching and if you haven’t already, Please Subscribe!

Paper Links discussed in the chat:

Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

T5: Exploring Limits of Transfer Learning with Text-to-Text Transformer (Research Paper Walkthrough)

Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

Colin Raffel: Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

[Paper Review] Exploring the Limits of Transfer Learning with a Unified Text to Text Transformer

Team 12 - Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer (reading papers)

[Audio notes] T5 - Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

LLM: Exploring the Limits of Transfer Learning with a unified Text-to-Text Transformer (T5)

PR-216: Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

What is T5 Model?

Pushing NLP Boundaries: The Power of T5's Unified Text to Text Transformer

An introduction to transfer learning in NLP and HuggingFace with Thomas Wolf

The Limits of NLP

Limits of Transfer Learning (LOD 2020)

Can you solve this 150 years old puzzle? #shorts

Hallucinations in Language Models: Critical Considerations

Does PayPal have transfer limits?

Are there any limits on how much money can be transferred from a debit card to another bank account?

I’m off limits when I’m crafting.✨ Who can relate? 🙋🏼♀️ #craft #craftroom #painting #diy #crafty...

New transistor research: Exploring the limits of miniaturisation

Are Transformers Good Learners? Exploring the Limits of Transformer Training [ LingMon #175 ]

T5 and Flan T5 Tutorial

'Transfer Learning Demystified: Leveraging Pretrained Models for Efficient AI Solutions'

Комментарии

1:40:09

1:40:09

0:12:47

0:12:47

0:23:43

0:23:43

1:04:19

1:04:19

![[Paper Review] Exploring](https://i.ytimg.com/vi/sfEfCe9Iea8/hqdefault.jpg) 0:26:32

0:26:32

0:30:04

0:30:04

0:14:19

0:14:19

![[Audio notes] T5](https://i.ytimg.com/vi/AFcEGugRzIs/hqdefault.jpg) 0:37:06

0:37:06

0:50:19

0:50:19

0:41:22

0:41:22

0:00:39

0:00:39

0:03:47

0:03:47

1:08:15

1:08:15

0:29:47

0:29:47

0:09:42

0:09:42

0:00:57

0:00:57

0:02:09

0:02:09

0:00:54

0:00:54

0:02:15

0:02:15

0:00:08

0:00:08

0:01:14

0:01:14

0:50:42

0:50:42

0:15:50

0:15:50

0:00:21

0:00:21