filmov

tv

Advanced RAG with Llama 3 in Langchain | Chat with PDF using Free Embeddings, Reranker & LlamaParse

Показать описание

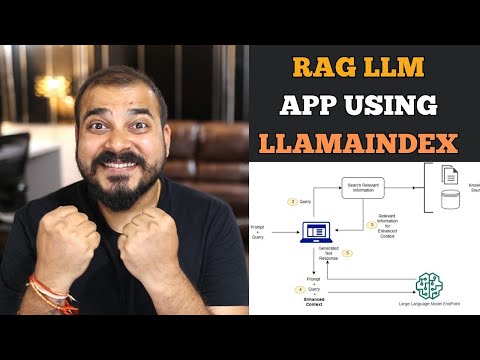

Let's build an advanced Retrieval-Augmented Generation (RAG) system with LangChain! You'll learn how to "teach" a Large Language Model (Llama 3) to read a complex PDF document and intelligently answer questions about it. We'll simplify the process by breaking the document into small pieces, converting these into vectors, and organizing them for fast answers. We'll build our RAG using only open models (Llama 3, FlagEmbedding & MS Marco reranker).

00:00 - Intro

00:43 - Our RAG Architecture

05:11 - Google Colab Setup

06:36 - Document Parsing with LlamaParse

09:07 - Text Splitting, Vector Embeddings & Vector DB (Qdrant)

13:26 - Reranking with FlashRank

14:45 - Q&A Chain with LangChain, Llama 3 and Groq API

16:32 - Chat with the PDF

21:30 - Conclusion

Join this channel to get access to the perks and support my work:

#artificialintelligence #langchain #chatbot #llama #chatgpt #llm

00:00 - Intro

00:43 - Our RAG Architecture

05:11 - Google Colab Setup

06:36 - Document Parsing with LlamaParse

09:07 - Text Splitting, Vector Embeddings & Vector DB (Qdrant)

13:26 - Reranking with FlashRank

14:45 - Q&A Chain with LangChain, Llama 3 and Groq API

16:32 - Chat with the PDF

21:30 - Conclusion

Join this channel to get access to the perks and support my work:

#artificialintelligence #langchain #chatbot #llama #chatgpt #llm

Advanced RAG with Llama 3 in Langchain | Chat with PDF using Free Embeddings, Reranker & LlamaPa...

'I want Llama3 to perform 10x with my private knowledge' - Local Agentic RAG w/ llama3

Reliable, fully local RAG agents with LLaMA3

Building Production-Ready RAG Applications: Jerry Liu

What is Retrieval-Augmented Generation (RAG)?

ADVANCED Python AI Agent Tutorial - Using RAG

Python RAG Tutorial (with Local LLMs): AI For Your PDFs

Vector Search RAG Tutorial – Combine Your Data with LLMs with Advanced Search

Python Advanced AI Agent Tutorial - LlamaIndex, Ollama and Multi-LLM!

Build a Large Language Model AI Chatbot using Retrieval Augmented Generation

LLMs for Advanced Question-Answering over Tabular/CSV/SQL Data (Building Advanced RAG, Part 2)

End to end RAG LLM App Using Llamaindex and OpenAI- Indexing and Querying Multiple pdf's

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

LLAMA-3 🦙: EASIET WAY To FINE-TUNE ON YOUR DATA 🙌

RAG But Better: Rerankers with Cohere AI

Build Anything with Llama 3 Agents, Here’s How

How Good is LLAMA-3 for RAG, Routing, and Function Calling

Meta Llama 3 Fine tuning, RAG, and Prompt Engineering for Drug Discovery

Extending Llama-3 to 1M+ Tokens - Does it Impact the Performance?

Building RAG with Llama 3 using LlamaIndex

Understanding Embeddings in RAG and How to use them - Llama-Index

Master RAG in 5 Hrs | RAG Introduction, Advanced Data Preparation, Advanced RAG Methods, GraphRAG

Llama 3 RAG: How to Create AI App using Ollama?

LLAMA 3 Released - All You Need to Know

Комментарии

0:22:09

0:22:09

0:24:02

0:24:02

0:21:19

0:21:19

0:18:35

0:18:35

0:06:36

0:06:36

0:40:59

0:40:59

0:21:33

0:21:33

1:11:47

1:11:47

0:53:57

0:53:57

0:02:53

0:02:53

0:35:07

0:35:07

0:27:21

0:27:21

2:33:11

2:33:11

0:15:17

0:15:17

0:23:43

0:23:43

0:12:23

0:12:23

0:17:57

0:17:57

1:07:41

1:07:41

0:16:31

0:16:31

0:05:34

0:05:34

0:16:19

0:16:19

4:40:32

4:40:32

0:07:11

0:07:11

0:11:22

0:11:22