filmov

tv

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

Показать описание

Learn how to implement RAG (Retrieval Augmented Generation) from scratch, straight from a LangChain software engineer. This Python course teaches you how to use RAG to combine your own custom data with the power of Large Language Models (LLMs).

✏️ Course created by Lance Martin, PhD.

⭐️ Course Contents ⭐️

⌨️ (0:00:00) Overview

⌨️ (0:05:53) Indexing

⌨️ (0:10:40) Retrieval

⌨️ (0:15:52) Generation

⌨️ (0:22:14) Query Translation (Multi-Query)

⌨️ (0:28:20) Query Translation (RAG Fusion)

⌨️ (0:33:57) Query Translation (Decomposition)

⌨️ (0:40:31) Query Translation (Step Back)

⌨️ (0:47:24) Query Translation (HyDE)

⌨️ (0:52:07) Routing

⌨️ (0:59:08) Query Construction

⌨️ (1:05:05) Indexing (Multi Representation)

⌨️ (1:11:39) Indexing (RAPTOR)

⌨️ (1:19:19) Indexing (ColBERT)

⌨️ (1:26:32) CRAG

⌨️ (1:44:09) Adaptive RAG

⌨️ (2:12:02) The future of RAG

🎉 Thanks to our Champion and Sponsor supporters:

👾 davthecoder

👾 jedi-or-sith

👾 南宮千影

👾 Agustín Kussrow

👾 Nattira Maneerat

👾 Heather Wcislo

👾 Serhiy Kalinets

👾 Justin Hual

👾 Otis Morgan

👾 Oscar Rahnama

--

✏️ Course created by Lance Martin, PhD.

⭐️ Course Contents ⭐️

⌨️ (0:00:00) Overview

⌨️ (0:05:53) Indexing

⌨️ (0:10:40) Retrieval

⌨️ (0:15:52) Generation

⌨️ (0:22:14) Query Translation (Multi-Query)

⌨️ (0:28:20) Query Translation (RAG Fusion)

⌨️ (0:33:57) Query Translation (Decomposition)

⌨️ (0:40:31) Query Translation (Step Back)

⌨️ (0:47:24) Query Translation (HyDE)

⌨️ (0:52:07) Routing

⌨️ (0:59:08) Query Construction

⌨️ (1:05:05) Indexing (Multi Representation)

⌨️ (1:11:39) Indexing (RAPTOR)

⌨️ (1:19:19) Indexing (ColBERT)

⌨️ (1:26:32) CRAG

⌨️ (1:44:09) Adaptive RAG

⌨️ (2:12:02) The future of RAG

🎉 Thanks to our Champion and Sponsor supporters:

👾 davthecoder

👾 jedi-or-sith

👾 南宮千影

👾 Agustín Kussrow

👾 Nattira Maneerat

👾 Heather Wcislo

👾 Serhiy Kalinets

👾 Justin Hual

👾 Otis Morgan

👾 Oscar Rahnama

--

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

Local Retrieval Augmented Generation (RAG) from Scratch (step by step tutorial)

RAG From Scratch: Part 1 (Overview)

What is Retrieval-Augmented Generation (RAG)?

Learn RAG From Scratch – Spring AI Tutorial

RAG Explained

RAG from Scratch without any Frameworks

Building a RAG application from scratch using Python, LangChain, and the OpenAI API

Python RAG Tutorial (with Local LLMs): AI For Your PDFs

RAG + Langchain Python Project: Easy AI/Chat For Your Docs

Building a RAG application using open-source models (Asking questions from a PDF using Llama2)

Vector Search RAG Tutorial – Combine Your Data with LLMs with Advanced Search

Build your own RAG (retrieval augmented generation) AI Chatbot using Python | Simple walkthrough

4-Langchain Series-Getting Started With RAG Pipeline Using Langchain Chromadb And FAISS

'I want Llama3 to perform 10x with my private knowledge' - Local Agentic RAG w/ llama3

Build a Large Language Model AI Chatbot using Retrieval Augmented Generation

ADVANCED Python AI Agent Tutorial - Using RAG

Building Corrective RAG from scratch with open-source, local LLMs

Building Production-Ready RAG Applications: Jerry Liu

When Do You Use Fine-Tuning Vs. Retrieval Augmented Generation (RAG)? (Guest: Harpreet Sahota)

Easy 100% Local RAG Tutorial (Ollama) + Full Code

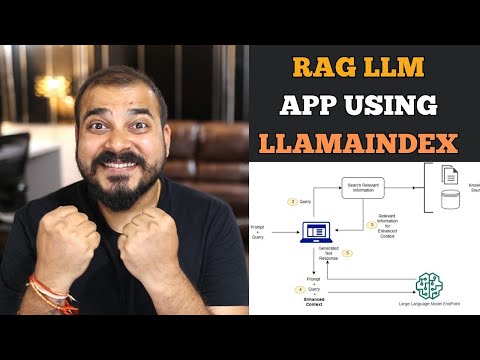

End to end RAG LLM App Using Llamaindex and OpenAI- Indexing and Querying Multiple pdf's

Building RAG based model using Langchain | rag langchain tutorial | rag langchain huggingface

RAG From Scratch: Part 3 (Retrieval)

Комментарии

2:33:11

2:33:11

5:40:59

5:40:59

0:05:13

0:05:13

0:06:36

0:06:36

0:34:38

0:34:38

0:08:03

0:08:03

0:11:21

0:11:21

1:12:39

1:12:39

0:21:33

0:21:33

0:16:42

0:16:42

0:53:15

0:53:15

1:11:47

1:11:47

0:16:41

0:16:41

0:30:21

0:30:21

0:24:02

0:24:02

0:02:53

0:02:53

0:40:59

0:40:59

0:26:00

0:26:00

0:18:35

0:18:35

0:00:53

0:00:53

0:06:50

0:06:50

0:27:21

0:27:21

0:20:16

0:20:16

0:05:14

0:05:14