filmov

tv

Vectorized UDF: Scalable Analysis with Python and PySpark - Li Jin

Показать описание

Li Jin, a software engineer at Two Sigma shares a new type of Py Spark UDF: Vectorized UDF.

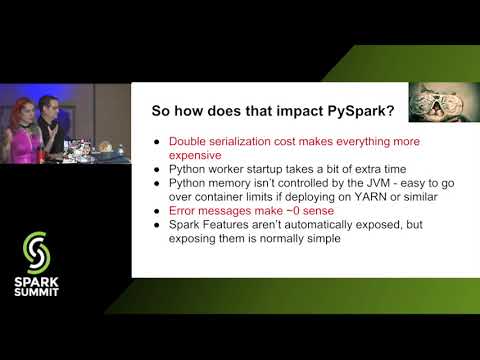

Over the past few years, Python has become the default language for data scientists. Packages such as pandas, numpy, statsmodel, and scikit-learn have gained great adoption and become the mainstream toolkits. At the same time, Apache Spark has become the de facto standard in processing big data. Spark ships with a Python interface, aka PySpark, however, because Spark’s runtime is implemented on top of JVM, using PySpark with native Python library sometimes results in poor performance and usability.

Vectorized UDF is built on top of Apache Arrow and bring you the best of both worlds – the ability to define easy to use, high performance UDFs and scale up your analysis with Spark.

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

Over the past few years, Python has become the default language for data scientists. Packages such as pandas, numpy, statsmodel, and scikit-learn have gained great adoption and become the mainstream toolkits. At the same time, Apache Spark has become the de facto standard in processing big data. Spark ships with a Python interface, aka PySpark, however, because Spark’s runtime is implemented on top of JVM, using PySpark with native Python library sometimes results in poor performance and usability.

Vectorized UDF is built on top of Apache Arrow and bring you the best of both worlds – the ability to define easy to use, high performance UDFs and scale up your analysis with Spark.

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

Vectorized UDF: Scalable Analysis with Python and PySpark - Li Jin

Vectorized Pandas UDF in Spark | Apache Spark UDF | Part - 3 | LearntoSpark

4.5 Spark vectorized UDF | Pandas UDF | Spark Tutorial

Eng & Kwon - Scaling data workloads using the best of both worlds: pandas and Spark

🎯PySpark with Pandas UDFs 🎯Tips📕🐍 #python

Leveraging Apache Spark for Scalable Data Prep and Inference in Deep Learning

PySpark Examples - User Defined Function (UDF) - Spark SQL

Making PySpark Amazing—From Faster UDFs to Graphing! (Holden Karau and Bryan Cutler)

Scaling Genetic Data Analysis with Apache Spark - Jonathan Bloom and Timothy Poterba

PySpark UDFs - performance considerations by Andrzej Lewcun

PandasUDFs: One Weird Trick to Scaled Ensembles

Pandas UDF and Python Type Hint in Apache Spark 3.0

Location based crime data search and analysis with Spark UDF

User Defined Aggregation in Apache Spark: A Love Story

Enabling Vectorized Engine in Apache Spark

Is PySpark UDF is Slow? Why ?

How to create UDF using PySpark in English |Hands-On|Spark Tutorial for Beginners| DM | DataMaking

Creating UDF and use with Spark SQL

Improving Python and Spark Performance and Interoperability with Apache Arrow

Felix Cheung - Scalable Data Science in Python and R on Apache Spark

Spark UDF | User Defined Functions in Apache Spark | How to create and use UDF in Spark

spark udf dataframe

Accelerating Data Processing in Spark SQL with Pandas UDFs

Vectorized Query Execution in Apache Spark at Facebook Chen Yang Facebook

Комментарии

0:29:11

0:29:11

0:07:34

0:07:34

0:09:41

0:09:41

0:36:09

0:36:09

0:00:38

0:00:38

0:25:53

0:25:53

0:06:23

0:06:23

0:30:50

0:30:50

0:29:29

0:29:29

0:41:38

0:41:38

0:37:32

0:37:32

0:22:22

0:22:22

0:10:15

0:10:15

0:20:51

0:20:51

0:21:59

0:21:59

0:05:54

0:05:54

0:09:16

0:09:16

0:10:00

0:10:00

0:28:14

0:28:14

0:42:03

0:42:03

0:09:01

0:09:01

0:03:43

0:03:43

0:27:26

0:27:26

0:32:14

0:32:14