filmov

tv

CPU Pinning in Proxmox: Configuration and Performance testing

Показать описание

CPU pinning can lock a VM to specific threads. While not officially supported in Proxmox, Linux tools allow CPU pinning to be configured and I test the performance impact CPU pinning can create and when it would make sense to be used.

00:00 Intro

00:22 What is CPU pinning?

01:39 Setting up CPU pinning in Proxmox

09:39 Benchmark results

00:00 Intro

00:22 What is CPU pinning?

01:39 Setting up CPU pinning in Proxmox

09:39 Benchmark results

CPU Pinning in Proxmox: Configuration and Performance testing

Proxmox Sizing CPU and Memory

10 tips to get the most out of your Proxmox server

How does Proxmox use P and E cores?

Proxmox 8.0 - PCIe Passthrough Tutorial

Remote Gaming! (and Video Encoding using Proxmox and GPU Passthrough)

This Changes Everything: Passthrough iGPU To Your VM with Proxmox

Unraid: CPU PINNING, VM Settings für Gaming/Arbeit, Passthrough und GPUs! #007

02 - Virtualization and Proxmox Installation

What are NUMA Nodes?

how to clean thermal paste off of a cpu socket #shorts

Double GPU Passthrough in Proxmox 8!? Play Baldur's Gate 3 and Minecraft On The Same Machine?

what is VCPU? CPU - Core - Threads #compute #vcpu #bigdata #sparkcluster #databrickscluster #cluster

Kiosk mode Bruteforce Evasion with Flipper Zero

How to setup HDD Spin down in Proxmox VE

My Proxmox Home Server ... (GPU Passthrough, IOMMU Groups and more)

Proxmox VE 7.3: USB device sem desligar vm e cpu affinity.

Manage your Media Collection with Jellyfin! Install on Proxmox with Hardware Transcode

This isn't a normal mini PC... and I love it.

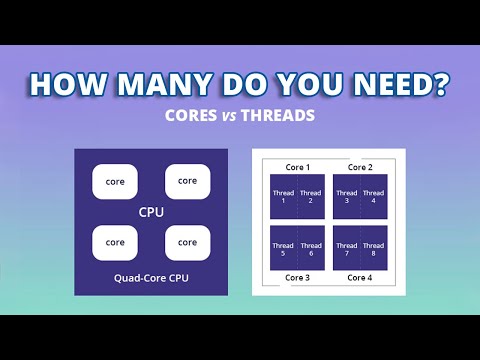

Does More Core Means Better Performance? | CPU Cores & Threads Explained

Proxmox 7.3 Testing and Q&A

iGPU Transcoding In Proxmox with Jellyfin Media Center!

The ULTIMATE Budget Workstation.

Stream 5 - Proxmox Install and Config in the Lab

Комментарии

0:12:19

0:12:19

0:08:41

0:08:41

0:05:24

0:05:24

0:10:27

0:10:27

0:22:31

0:22:31

0:13:27

0:13:27

0:08:24

0:08:24

0:20:26

0:20:26

0:08:48

0:08:48

0:02:17

0:02:17

0:00:37

0:00:37

0:25:55

0:25:55

0:02:40

0:02:40

0:00:40

0:00:40

0:09:50

0:09:50

0:15:01

0:15:01

0:09:27

0:09:27

0:22:10

0:22:10

0:14:17

0:14:17

0:02:40

0:02:40

2:12:26

2:12:26

0:22:37

0:22:37

0:14:57

0:14:57

1:00:42

1:00:42