filmov

tv

[Classic] Word2Vec: Distributed Representations of Words and Phrases and their Compositionality

Показать описание

#ai #research #word2vec

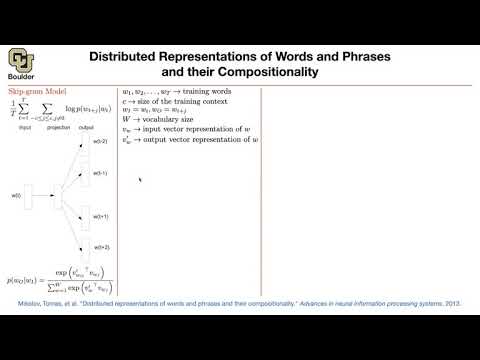

Word vectors have been one of the most influential techniques in modern NLP to date. This paper describes Word2Vec, which the most popular technique to obtain word vectors. The paper introduces the negative sampling technique as an approximation to noise contrastive estimation and shows that this allows the training of word vectors from giant corpora on a single machine in a very short time.

OUTLINE:

0:00 - Intro & Outline

1:50 - Distributed Word Representations

5:40 - Skip-Gram Model

12:00 - Hierarchical Softmax

14:55 - Negative Sampling

22:30 - Mysterious 3/4 Power

25:50 - Frequent Words Subsampling

28:15 - Empirical Results

29:45 - Conclusion & Comments

Abstract:

The recently introduced continuous Skip-gram model is an efficient method for learning high-quality distributed vector representations that capture a large number of precise syntactic and semantic word relationships. In this paper we present several extensions that improve both the quality of the vectors and the training speed. By subsampling of the frequent words we obtain significant speedup and also learn more regular word representations. We also describe a simple alternative to the hierarchical softmax called negative sampling. An inherent limitation of word representations is their indifference to word order and their inability to represent idiomatic phrases. For example, the meanings of "Canada" and "Air" cannot be easily combined to obtain "Air Canada". Motivated by this example, we present a simple method for finding phrases in text, and show that learning good vector representations for millions of phrases is possible.

Authors: Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, Jeffrey Dean

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Word vectors have been one of the most influential techniques in modern NLP to date. This paper describes Word2Vec, which the most popular technique to obtain word vectors. The paper introduces the negative sampling technique as an approximation to noise contrastive estimation and shows that this allows the training of word vectors from giant corpora on a single machine in a very short time.

OUTLINE:

0:00 - Intro & Outline

1:50 - Distributed Word Representations

5:40 - Skip-Gram Model

12:00 - Hierarchical Softmax

14:55 - Negative Sampling

22:30 - Mysterious 3/4 Power

25:50 - Frequent Words Subsampling

28:15 - Empirical Results

29:45 - Conclusion & Comments

Abstract:

The recently introduced continuous Skip-gram model is an efficient method for learning high-quality distributed vector representations that capture a large number of precise syntactic and semantic word relationships. In this paper we present several extensions that improve both the quality of the vectors and the training speed. By subsampling of the frequent words we obtain significant speedup and also learn more regular word representations. We also describe a simple alternative to the hierarchical softmax called negative sampling. An inherent limitation of word representations is their indifference to word order and their inability to represent idiomatic phrases. For example, the meanings of "Canada" and "Air" cannot be easily combined to obtain "Air Canada". Motivated by this example, we present a simple method for finding phrases in text, and show that learning good vector representations for millions of phrases is possible.

Authors: Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, Jeffrey Dean

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Комментарии

![[Classic] Word2Vec: Distributed](https://i.ytimg.com/vi/yexR53My2O4/hqdefault.jpg) 0:31:22

0:31:22

0:51:04

0:51:04

0:14:08

0:14:08

0:13:09

0:13:09

1:16:03

1:16:03

0:14:09

0:14:09

0:46:04

0:46:04

0:27:02

0:27:02

0:08:44

0:08:44

0:24:15

0:24:15

0:41:26

0:41:26

0:16:56

0:16:56

0:02:54

0:02:54

0:33:05

0:33:05

1:10:26

1:10:26

![[Project 2-min intro]](https://i.ytimg.com/vi/eAH54-X33R8/hqdefault.jpg) 0:02:00

0:02:00

0:06:43

0:06:43

0:04:55

0:04:55

0:05:51

0:05:51

0:31:32

0:31:32

1:28:47

1:28:47

0:52:46

0:52:46

0:18:23

0:18:23

1:13:29

1:13:29