filmov

tv

The Illustrated Word2vec - A Gentle Intro to Word Embeddings in Machine Learning

Показать описание

The concept of word embeddings is a central one in language processing (NLP). It's a method of representing words as numerically -- as lists of numbers that capture their meaning. Word2vec is an algorithm (a couple of algorithms, actually) of creating word vectors which helped popularize this concept. In this video, Jay take you in a guided tour of The Illustrated Word2Vec, an article explaining the method and how it came to be developed.

By Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeffrey Dean

---

---

More videos by Jay:

Language Processing with BERT: The 3 Minute Intro (Deep learning for NLP)

Explainable AI Cheat Sheet - Five Key Categories

The Narrated Transformer Language Model

By Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeffrey Dean

---

---

More videos by Jay:

Language Processing with BERT: The 3 Minute Intro (Deep learning for NLP)

Explainable AI Cheat Sheet - Five Key Categories

The Narrated Transformer Language Model

The Illustrated Word2vec - A Gentle Intro to Word Embeddings in Machine Learning

Word Embedding and Word2Vec, Clearly Explained!!!

Word embedding explained | Jay Alammar's 'The Illustrated Word2vec' by Abhilash | NLP...

Intuition & Use-Cases of Embeddings in NLP & beyond

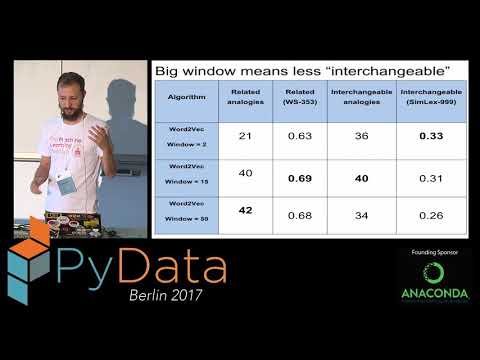

(Re)training word embeddings for a specific domain - Jetze Schuurmans

A Complete Overview of Word Embeddings

Word2Vec, GloVe, FastText- EXPLAINED!

Exploring word2vec vector space - Julia Bazińska

Lev Konstantinovskiy - Next generation of word embeddings in Gensim

[Classic] Word2Vec: Distributed Representations of Words and Phrases and their Compositionality

Cracking the Code: Word Embeddings for NLP & GPT

Francois Scharffe: Word embeddings as a service

Globally Scalable Web Document Classification Using Word2Vec

R & Python - word2vec

Converting words to numbers, Word Embeddings | Deep Learning Tutorial 39 (Tensorflow & Python)

Lev Konstantinovskiy - Text similiarity with the next generation of word embeddings in Gensim

Deep Learning State of the Art (2019) - MIT

10 years of NLP history explained in 50 concepts | From Word2Vec, RNNs to GPT

The scientist who coined retrieval augmented generation

Instructor - Embeddings Model

Word Embeddings || Embedding Layers || Quick Explained

Text Embeddings für Neulinge

NLP Tutorial 18 | word2vec Word Embedding with SpaCy

What is Word2Vec? How does it work? CBOW and Skip-gram

Комментарии

0:08:44

0:08:44

0:16:12

0:16:12

0:52:46

0:52:46

0:51:01

0:51:01

0:30:06

0:30:06

0:17:17

0:17:17

0:13:20

0:13:20

0:31:32

0:31:32

0:39:21

0:39:21

![[Classic] Word2Vec: Distributed](https://i.ytimg.com/vi/yexR53My2O4/hqdefault.jpg) 0:31:22

0:31:22

0:00:58

0:00:58

0:36:35

0:36:35

0:40:28

0:40:28

1:13:10

1:13:10

0:11:32

0:11:32

0:40:26

0:40:26

0:46:25

0:46:25

0:17:32

0:17:32

1:13:47

1:13:47

0:01:13

0:01:13

0:02:09

0:02:09

0:43:07

0:43:07

0:36:06

0:36:06

0:19:27

0:19:27