filmov

tv

Vectoring Words (Word Embeddings) - Computerphile

Показать описание

How do you represent a word in AI? Rob Miles reveals how words can be formed from multi-dimensional vectors - with some unexpected results.

08:06 - Yes, it's a rubber egg :)

Unicorn AI:

This video was filmed and edited by Sean Riley.

08:06 - Yes, it's a rubber egg :)

Unicorn AI:

This video was filmed and edited by Sean Riley.

Vectoring Words (Word Embeddings) - Computerphile

Word Embedding and Word2Vec, Clearly Explained!!!

12.1: What is word2vec? - Programming with Text

What is Word2Vec? A Simple Explanation | Deep Learning Tutorial 41 (Tensorflow, Keras & Python)

Vector databases are so hot right now. WTF are they?

Vector Databases simply explained! (Embeddings & Indexes)

The Illustrated Word2vec - A Gentle Intro to Word Embeddings in Machine Learning

Word Embedding - Natural Language Processing| Deep Learning

Word embedding | natural language processing (NLP)

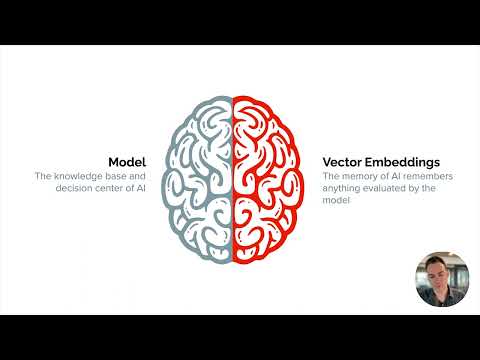

What are AI vector embeddings?

Text Vectorization NLP | Vectorization using Python | Bag Of Words | Machine Learning

AI Demystified: Word Embeddings

Pre Trained Word Embeddings | Word2Vect, GloVe

How AI 'Understands' Images (CLIP) - Computerphile

Embeddings from Scratch!

Natural Language Processing|TF-IDF Intuition| Text Prerocessing

What Are Word Embeddings? | Aadil Ali

LLM embeddings explained by Jerry Liu from LlamaIndex

Intuition & Use-Cases of Embeddings in NLP & beyond

L04 : Text and Embeddings: Introduction to NLP, Word Embeddings, Word2Vec

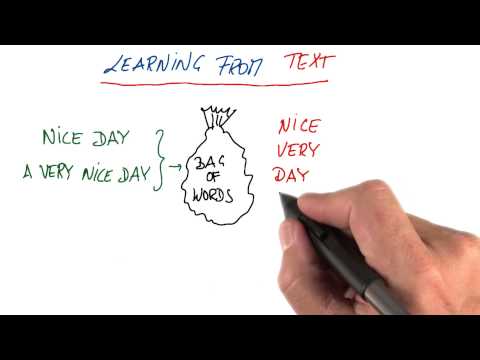

Bag of Words - Intro to Machine Learning

5 Ways To Generate Vector Embeddings

Word Embeddings: Introduction

[Classic] Word2Vec: Distributed Representations of Words and Phrases and their Compositionality

Комментарии

0:16:56

0:16:56

0:16:12

0:16:12

0:10:20

0:10:20

0:18:28

0:18:28

0:03:22

0:03:22

0:04:23

0:04:23

0:08:44

0:08:44

0:15:10

0:15:10

0:07:45

0:07:45

0:05:59

0:05:59

0:09:57

0:09:57

0:00:56

0:00:56

0:07:57

0:07:57

0:18:05

0:18:05

0:18:00

0:18:00

0:08:27

0:08:27

0:02:30

0:02:30

0:01:24

0:01:24

0:51:01

0:51:01

2:17:25

2:17:25

0:01:35

0:01:35

0:04:45

0:04:45

0:10:36

0:10:36

![[Classic] Word2Vec: Distributed](https://i.ytimg.com/vi/yexR53My2O4/hqdefault.jpg) 0:31:22

0:31:22