filmov

tv

Controlling Blender with my voice using LLM

Показать описание

Experimenting with Googles' new Gemini 2.0 Flash Experimental to control Blender with my voice!

Tools used:

Tools used:

Controlling Blender with my voice using LLM

Controlling Blender with your voice using LLM, here's how

Control Blender with your Voice (demo)

Controlling Blender with my voice using Google Gemini LLM

How to Control Blender with Your Voice: Talking Character Tutorial

Speech recognition and then visualize the 3D text in Blender Eevee in realtime

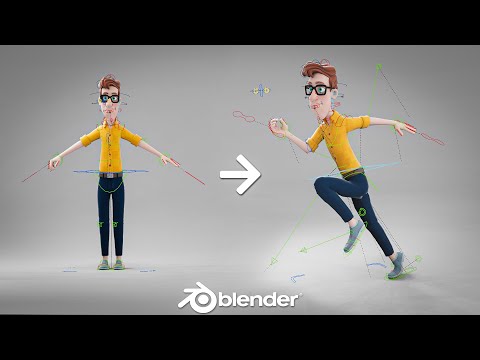

Character animation for impatient people - Blender Tutorial

Which one looks better with fps? (Aimkid - Modify) Lel

How to Animate 3D Characters in 1 Minute

FAST TIPS & TUTORIALS Wow! Real Human Voices for your Youtube Iclone Blender Any Videos. AMAZON ...

LIVE ACTION Avatar Bending Scrolls

LipSync Animation | Arcane | Blender 3D

Blender Tutorial: How to Speed Up Video in Blender Video Editor

Playing With Time

FREE-AUTOMATIC Facial MoCap Shapekeys (Blender)

How to generate Lip Sync for Mouth Shape Keys with Audio Voice - Blender 3.6 Tutorial

Third Person Camera - Unity in 15 seconds

Trying to make Supercar commercial /ad on my own / this is the result

3D Printed Controllable Prosthetic Hand via EMG

Creating in Flow | How to use Google’s new AI Filmmaking Tool

Adding Depth to Doof

Learn Rigid Body Physics in Blender | All Settings Explained With Examples | Blender Eevee & Cyc...

Home automation using raspberry pi (turning the blender with a dc pulse)

How to rig cheeks in Blender using lattices | Intermediate level Tutorial

Комментарии

0:15:33

0:15:33

0:07:02

0:07:02

0:00:41

0:00:41

0:19:08

0:19:08

0:19:53

0:19:53

0:00:24

0:00:24

0:12:49

0:12:49

0:00:19

0:00:19

0:01:01

0:01:01

0:00:54

0:00:54

0:00:21

0:00:21

0:00:21

0:00:21

0:00:50

0:00:50

0:01:34

0:01:34

0:02:40

0:02:40

0:15:50

0:15:50

0:00:23

0:00:23

0:00:13

0:00:13

0:00:46

0:00:46

0:02:53

0:02:53

0:01:02

0:01:02

0:15:38

0:15:38

0:00:27

0:00:27

0:09:23

0:09:23