filmov

tv

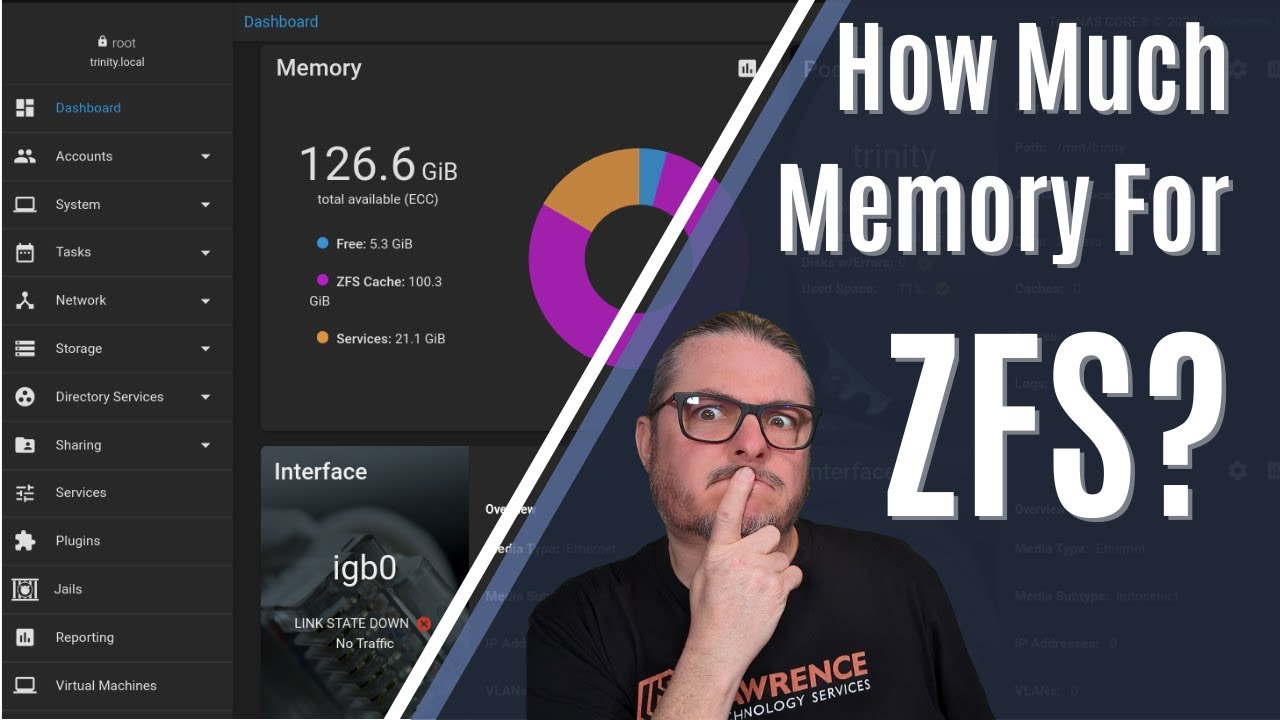

How Much Memory Does ZFS Need and Does It Have To Be ECC?

Показать описание

ZFS is a COW

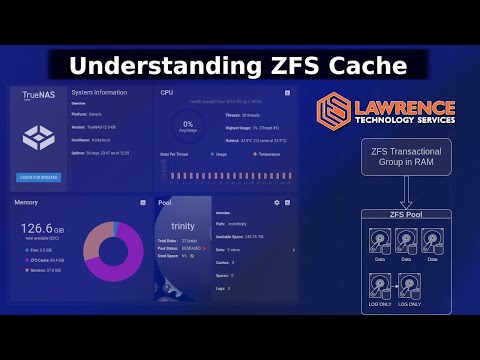

Explaining ZFS LOG and L2ARC Cache: Do You Need One and How Do They Work?

Set ZFS Arc Size on TrueNAS Scale

Connecting With Us

---------------------------------------------------

Lawrence Systems Shirts and Swag

---------------------------------------------------

AFFILIATES & REFERRAL LINKS

---------------------------------------------------

Amazon Affiliate Store

UniFi Affiliate Link

All Of Our Affiliates that help us out and can get you discounts!

Gear we use on Kit

Use OfferCode LTSERVICES to get 10% off your order at

Digital Ocean Offer Code

HostiFi UniFi Cloud Hosting Service

Protect you privacy with a VPN from Private Internet Access

Patreon

⏱️ Time Stamps ⏱️

00:00 ZFS Memory Requirement

01:32 Minimum Memory ZFS System

03:04 TrueNAS Scale Linux ZFS Memory Usage

04:03 ZFS Memory For Performance

#TrueNAS #ZFS

How Much Memory Does ZFS Need and Does It Have To Be ECC?

Do You Need ECC Memory For ZFS? #truenas #storage #Shorts

Explaining ZFS LOG and L2ARC Cache: Do You Need One and How Do They Work?

What Is ZFS?: A Brief Primer

What is L2ARC for ZFS and why you should use it?

Do you need ECC RAM with ZFS?

ZFS for Newbies

ZFS Caching: How Big Is the ARC? by George Wilson

#BUSTED Mythbusting with ZFS: 'Single Drive IOPs Limitation per RAIDZ vdev'

Getting the Most Performance out of TrueNAS and ZFS

Scaling ZFS for NVMe - Allan Jude - EuroBSDcon 2022

Make Your Home Server Go FAST with SSD Caching

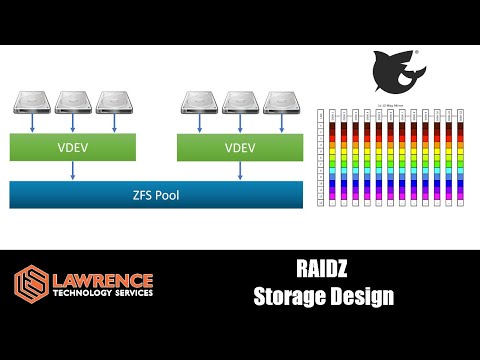

TrueNAS ZFS VDEV Pool Design Explained: RAIDZ RAIDZ2 RAIDZ3 Capacity, Integrity, and Performance.

How *I* Reduced Proxmox RAM Usage with NFS Instead of ZFS

INSANE PetaByte Homelab! (TrueNAS Scale ZFS + 10Gb Networking + 40Gb SMB Fail)

ZFS Basics - Pools and VDEVs - Testing, Configuration, and Expansion

DirectIO for ZFS by Brian Atkinson

Deciding between RAIDZ-1 and RAIDZ-2 (Configuring ZFS)

I had VDEV Layouts all WRONG! ...and you probably do too!

ZFS Essentials: Reformat (ZFS) and or Upgrade Cache Pools in Unraid

Linux Talk #2: Ubuntu 19.10 ZFS vs EXT4 | Memory Usage Benchmark and Comparison | 2019

TrueNAS: How To Expand A ZFS Pool

Tuesday Tech Tip - ZFS Read & Write Caching

ZFS 101: Leveraging Datasets and Zvols for Better Data Management

Комментарии

0:06:59

0:06:59

0:00:41

0:00:41

0:25:08

0:25:08

0:31:50

0:31:50

0:26:22

0:26:22

0:00:56

0:00:56

0:34:35

0:34:35

0:22:28

0:22:28

0:16:01

0:16:01

0:18:31

0:18:31

0:46:00

0:46:00

0:17:41

0:17:41

0:13:57

0:13:57

0:15:40

0:15:40

0:22:04

0:22:04

0:10:22

0:10:22

0:39:48

0:39:48

0:01:14

0:01:14

0:17:42

0:17:42

0:15:41

0:15:41

0:13:03

0:13:03

0:18:42

0:18:42

0:06:26

0:06:26

0:14:17

0:14:17