filmov

tv

I had VDEV Layouts all WRONG! ...and you probably do too!

Показать описание

Look, it's hard to know what VDEV layout is the best for #TrueNAS CORE and SCALE. Rich has built numerous different disk layouts and never really saw much of a performance difference between them. So, he's endeavoring to find out. In this video, we show you the results of our testing on which data VDEV layout is the best and whether read/write caches (L2ARC & SLOG caches) actually make a difference. Spoiler alert, we had to go get answers from the experts! Thank you again @TrueNAS, @iXsystemsInc, and Chris Peredun, for helping us answer the tough questions and setting us straight!

**GET SOCIAL AND MORE WITH US HERE!**

Get help with your Homelab, ask questions, and chat with us!

Subscribe and follow us on all the socials, would ya?

Find all things 2GT at our website!

More of a podcast kinda person? Check out our Podcast here:

Support us through the YouTube Membership program! Becoming a member gets you priority comments, special emojis, and helps us make videos!

**TIMESTAMPS!**

0:00 Introduction

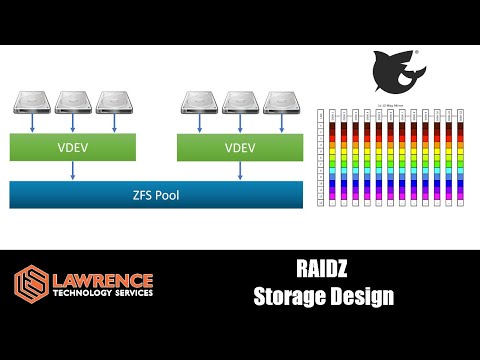

0:40 OpenZFS and VDEV types (Data VDEVs, L2ARC VDEVs, and Log VDEVS, OH MY!)

2:21 The hardware we used to test

3:09 The VDEV layout combinations we tested

3:30 A word about the testing results

3:53 The results of our Data VDEV tests

5:22 Something's not right here. Time to get some help

5:42 Interview with Chris Peredun at iXsystems

6:00 Why are my performance results so similar regardless of VDEV layout?

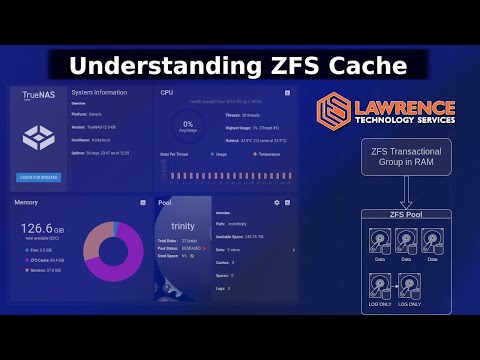

7:30 Where do caching VDEVs make sense to deploy in OpenZFS?

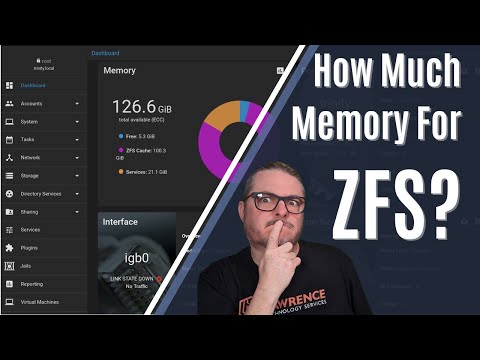

10:04 How much RAM should you put into your TrueNAS server?

11:37 What are the best VDEV layouts simple home file sharing? (SMB, NFS, and mixed SMB/NFS)

13:27 What's the best VDEV layout for high random read/writes?

14:09 What's the best VDEV layout for iSCSI and Virtualization?

16:41 Conclusions, final thoughts, and what you should do moving forward!

17:10 Closing! Thanks for watching!

**GET SOCIAL AND MORE WITH US HERE!**

Get help with your Homelab, ask questions, and chat with us!

Subscribe and follow us on all the socials, would ya?

Find all things 2GT at our website!

More of a podcast kinda person? Check out our Podcast here:

Support us through the YouTube Membership program! Becoming a member gets you priority comments, special emojis, and helps us make videos!

**TIMESTAMPS!**

0:00 Introduction

0:40 OpenZFS and VDEV types (Data VDEVs, L2ARC VDEVs, and Log VDEVS, OH MY!)

2:21 The hardware we used to test

3:09 The VDEV layout combinations we tested

3:30 A word about the testing results

3:53 The results of our Data VDEV tests

5:22 Something's not right here. Time to get some help

5:42 Interview with Chris Peredun at iXsystems

6:00 Why are my performance results so similar regardless of VDEV layout?

7:30 Where do caching VDEVs make sense to deploy in OpenZFS?

10:04 How much RAM should you put into your TrueNAS server?

11:37 What are the best VDEV layouts simple home file sharing? (SMB, NFS, and mixed SMB/NFS)

13:27 What's the best VDEV layout for high random read/writes?

14:09 What's the best VDEV layout for iSCSI and Virtualization?

16:41 Conclusions, final thoughts, and what you should do moving forward!

17:10 Closing! Thanks for watching!

Комментарии

0:17:42

0:17:42

0:21:42

0:21:42

0:09:32

0:09:32

0:09:53

0:09:53

0:15:56

0:15:56

0:18:31

0:18:31

0:10:40

0:10:40

0:13:57

0:13:57

0:10:22

0:10:22

0:18:42

0:18:42

0:11:45

0:11:45

0:25:08

0:25:08

0:00:57

0:00:57

0:20:55

0:20:55

0:05:08

0:05:08

0:06:59

0:06:59

0:19:49

0:19:49

0:04:55

0:04:55

0:12:32

0:12:32

0:11:16

0:11:16

0:15:54

0:15:54

0:14:17

0:14:17

0:00:41

0:00:41

0:41:43

0:41:43