filmov

tv

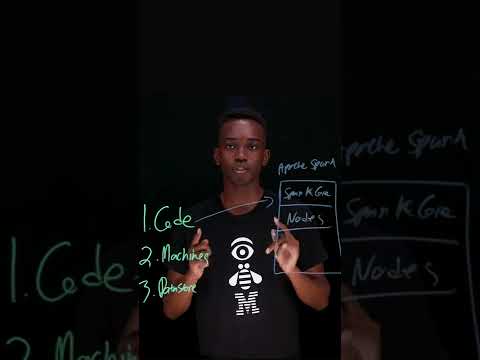

PySpark: Python API for Spark

Показать описание

Summary:

00:33 What is Spark?

03:00 What is PySpark?

03:45 Example Word Count

04:35 Demonstration of interactive shell on AWS EC2

06:37 Spark web interface

11:20 API documentation

11:27 Python doctest, create tests from interactive samples

12:39 Getting help help(sc)

13:18 PySpark Implementation details

14:15 PySpark less than 2K lines including comments

17:18 Pickled Objects, RDD[Array[Byte]]

17:44 Batching Pickle to reduce overhead

18:00 Consolidating operations into single pass when possible

19:27 PySpark Roadmap,

adding sorting support, file formats such as csv, PyPy JIT

PySpark: Python API for Spark

PySpark: Python API for Spark | Invoke Spark Shell & Pyspark | Apache Spark Tuorial | Edureka

What is PySpark | Introduction to PySpark For Beginners | Intellipaat

Apache Spark in 60 Seconds

apache spark python api

Introducing the New Python Data Source API for Apache Spark™

How to Build ETL Pipelines with PySpark? | Build ETL pipelines on distributed platform | Spark | ETL

PySpark Tutorial

HADOOP + PYSPARK + PYTHON + LINUX tutorial || by Mr. N. Vijay Sunder Sagar On 18-01-2025 @4:30PM IST

PySpark Tutorial 2: Create SparkSession in PySpark | PySpark with Python

Apache Spark Tutorial Python with PySpark 12 | Actions

PySpark Tutorial 2, Pyspark Installations On Windows,#PysparkInstallation,#PysparkTutorial, #Pyspark

Python Tutorial: PySpark: Spark with Python

What Is Apache Spark?

Install Apache PySpark on Windows PC | Apache Spark Installation Guide

Apache Spark Tutorial Python with PySpark 7 | Map and Filter Transformation

PySpark Course: Big Data Handling with Python and Apache Spark

PySpark | Tutorial-8 | Reading data from Rest API | Realtime Use Case | Bigdata Interview Questions

PySpark Full Course [2024] | Learn PySpark | PySpark Tutorial | Edureka

Vectorized UDF: Scalable Analysis with Python and PySpark - Li Jin

What is PySpark? Apache Spark with Python II What is PySpark II Spark API's II KSR Datavizon

Apache Spark Tutorial Python With PySpark 4 | Run our first Spark job

PySpark Tutorial 14: createGlobalTempView PySpark | PySpark with Python

HOW TO: Setup And Use Pyspark In Python (windows 10)

Комментарии

0:23:10

0:23:10

0:25:57

0:25:57

0:02:53

0:02:53

0:01:00

0:01:00

0:03:26

0:03:26

0:26:38

0:26:38

0:08:32

0:08:32

1:49:02

1:49:02

0:48:47

0:48:47

0:04:27

0:04:27

0:09:04

0:09:04

0:17:50

0:17:50

0:03:52

0:03:52

0:02:39

0:02:39

0:14:42

0:14:42

0:09:30

0:09:30

1:07:44

1:07:44

0:17:17

0:17:17

3:58:31

3:58:31

0:29:11

0:29:11

0:33:41

0:33:41

0:03:49

0:03:49

0:05:51

0:05:51

0:05:04

0:05:04