filmov

tv

AWS Tutorials – Building ETL Pipeline using AWS Glue and Step Functions

Показать описание

In AWS, ETL pipelines can be built using AWS Glue Job and Glue Crawler. AWS Glue Jobs are responsible for data transformation while Crawlers are responsible for data catalog. Amazon Step Functions is one approach to create such pipelines. In this tutorial, learn how to use Step Functions build ETL pipeline in AWS.

AWS Tutorials – Building ETL Pipeline using AWS Glue and Step Functions

Building ETL Pipelines on AWS

Back to Basics: Building an Event Driven Serverless ETL Pipeline on AWS

AWS Tutorials – Building Event Based AWS Glue ETL Pipeline

How to build an ETL pipeline with Python | Data pipeline | Export from SQL Server to PostgreSQL

What is ETL Pipeline? | ETL Pipeline Tutorial | How to Build ETL Pipeline | Simplilearn

AWS Tutorials - Methods of Building AWS Glue ETL Pipeline

AWS Hands-On: ETL with Glue and Athena

How to build and automate a python ETL pipeline with airflow on AWS EC2 | Data Engineering Project

AWS Tutorials - Build Enterprise Scale Python ETL Jobs using AWS Glue on Ray

ETL | Incremental Data Load from Amazon S3 Bucket to Amazon Redshift Using AWS Glue | Datawarehouse

AWS Tutorials - Data Quality Check in AWS Glue ETL Pipeline

AWS Tutorials – ETL Pipeline with Multiple Files Ingestion in S3

AWS Glue Tutorial | Getting Started with AWS Glue ETL | AWS Tutorial for Beginners | Edureka

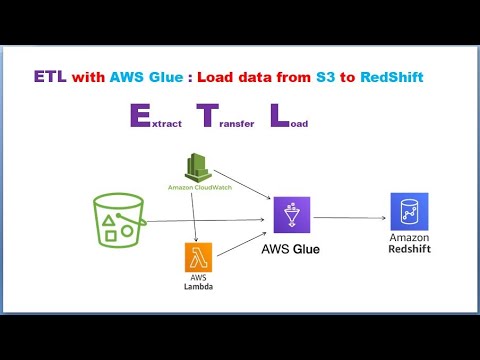

ETL | AWS Glue | AWS S3 | Load Data from AWS S3 to Amazon RedShift

AWS Glue Tutorial for Beginners [FULL COURSE in 45 mins]

AWS Tutorials - Joining Datasets in AWS Glue ETL Job

How to build and automate your Python ETL pipeline with Airflow | Data pipeline | Python

AWS Tutorials - Using Glue Job ETL from REST API Source to Amazon S3 Bucket Destination

AWS Data Engineer Project | AWS Glue | ETL in AWS

How to create and run a Glue ETL Job | Transform S3 Data using AWS Glue ETL| AWS Glue ETL Pipeline

What Is DBT and Why Is It So Popular - Intro To Data Infrastructure Part 3

What is Data Pipeline | How to design Data Pipeline ? - ETL vs Data pipeline (2024)

Building ETL Pipelines Using Cloud Dataflow in GCP

Комментарии

0:38:31

0:38:31

0:06:29

0:06:29

0:06:01

0:06:01

0:52:42

0:52:42

0:10:41

0:10:41

0:09:21

0:09:21

0:43:57

0:43:57

0:22:35

0:22:35

1:49:49

1:49:49

0:23:27

0:23:27

0:38:28

0:38:28

0:41:33

0:41:33

0:41:30

0:41:30

0:21:52

0:21:52

0:37:55

0:37:55

0:41:30

0:41:30

0:25:57

0:25:57

0:11:30

0:11:30

0:23:22

0:23:22

0:17:58

0:17:58

0:15:49

0:15:49

0:09:48

0:09:48

0:10:34

0:10:34

0:15:32

0:15:32