filmov

tv

AWS Tutorials - Methods of Building AWS Glue ETL Pipeline

Показать описание

AWS Glue Pipelines are responsible to ingest data in the data platform or data lake and manage data transformation lifecycle from raw to cleansed to curated state. There are many methods to build such pipelines. In this video, we talk about some of these methods and compare them for reusability, observability and development effort.

AWS Tutorials - Methods of Building AWS Glue ETL Pipeline

AWS In 5 Minutes | What Is AWS? | AWS Tutorial For Beginners | AWS Training | Simplilearn

Top 50+ AWS Services Explained in 10 Minutes

Create a REST API with API Gateway and Lambda | AWS Cloud Computing Tutorials for Beginners

AWS In 10 Minutes | AWS Tutorial For Beginners | AWS Cloud Computing For Beginners | Simplilearn

Basics of Amazon CloudWatch and CloudWatch Metrics | AWS Tutorials for Beginners

What is AWS In Telugu

Instant AI Agents on AWS (The Easy Way)

Unraveling Multi-tenancy Issues in Aurora MySQL using AI/ML | Let's Talk About Data

The 24 MOST POPULAR AWS Services You Need to Know in 2025

🔥 AWS Cloud Engineer Salary | Salary Of Cloud Engineer In India #Shorts #simplilearn

UPDATED - Create Your First AWS Lambda Function | AWS Tutorial for Beginners

AWS Amplify (Gen 1) in Plain English | Getting Started Tutorial for Beginners

AWS S3 Tutorial For Beginners

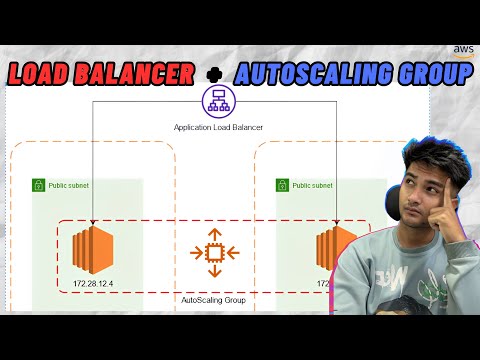

AWS Tutorial to create Application Load Balancer and Auto Scaling Group

What is Amazon Web Services? AWS Explained | Tutorial & Resources

AWS API Gateway tutorial ( Latest)

How to use the STAR Method in Job Interviews 🌟 #careeradvice

AWS Vs. Azure Vs. Google Cloud

How AWS Batch Works

3G Open Root Stick Welding by @100amper

AWS SQS Overview For Beginners

How to make AWS free account ✅ #viral #trending #shorts #aws #clouds #shortsvideo

How to create REST API in AWS Using API Gateway and Lambda | Hands On Tutorial

Комментарии

0:43:57

0:43:57

0:05:30

0:05:30

0:11:46

0:11:46

0:04:33

0:04:33

0:09:12

0:09:12

0:11:00

0:11:00

0:03:30

0:03:30

0:07:02

0:07:02

0:58:43

0:58:43

0:13:48

0:13:48

0:00:39

0:00:39

0:15:56

0:15:56

0:16:54

0:16:54

0:27:18

0:27:18

0:12:43

0:12:43

0:07:29

0:07:29

0:31:00

0:31:00

0:01:00

0:01:00

0:00:05

0:00:05

0:03:36

0:03:36

0:00:21

0:00:21

0:28:49

0:28:49

0:00:16

0:00:16

0:03:33

0:03:33