filmov

tv

AWS Tutorials – Building Event Based AWS Glue ETL Pipeline

Показать описание

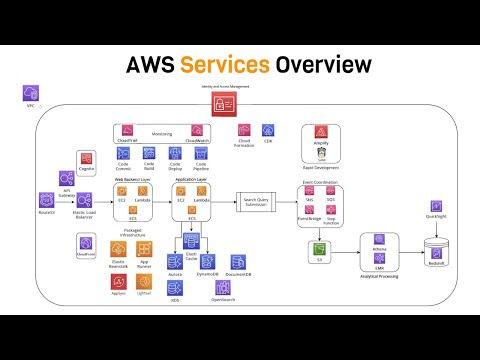

AWS Glue Pipelines are responsible to ingest data in the data platform or data lake and manage data transformation lifecycle from raw to cleansed to curated state. There are many methods to build such pipelines. In this video, you learn how to build event based ETL pipeline.

AWS Tutorials – Building Event Based AWS Glue ETL Pipeline

Back to Basics: Building an Event Driven Serverless ETL Pipeline on AWS

Event Driven Architectures vs Workflows (with AWS Services!)

AWS Project: Architect and Build an End-to-End AWS Web Application from Scratch, Step by Step

Event Driven Architecture | AWS S3 . SNS . SQS . Lambda

AWS re:Invent 2020: Building event-driven applications with Amazon EventBridge

AMAZON EVENTBRIDGE tutorial with AWS CDK - Building an Event-Driven Application

AWS re:Invent 2022 - Building next-gen applications with event-driven architectures (API311-R)

AWS Certified Security Specialty Exam Practice Questions - ANALYSIS P6 (SCS-C02)

AWS re:Invent 2019: [NEW LAUNCH!] Building event-driven architectures w/ Amazon EventBridge (API320)

AMAZON EVENTBRIDGE tutorial - Build your SERVERLESS event-driven app with AWS SAM

AWS Tutorials – ETL Pipeline with Multiple Files Ingestion in S3

AWS Tutorials – Building ETL Pipeline using AWS Glue and Step Functions

AWS EventBridge Tutorial | AWS EventBridge Theory and Demo | CloudWatch Events | AWS Tutorials

AWS re:Invent 2022 - [NEW] EventBridge Pipes simplifies connecting event-driven services (API206)

AWS Event-Driven Architecture Explainer Video | Amazon Web Services

AWS re:Invent 2022 - Building Serverlesspresso: Creating event-driven architectures (SVS312)

AWS Tutorials - Methods of Building AWS Glue ETL Pipeline

Intro to AWS - The Most Important Services To Learn

AWS Step Functions + Lambda Tutorial - Step by Step Guide in the Workflow Studio

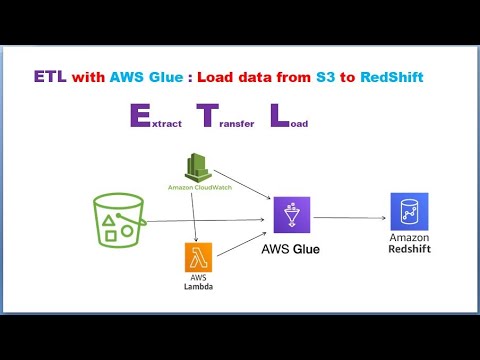

ETL | AWS Glue | AWS S3 | Load Data from AWS S3 to Amazon RedShift

AWS re:Invent 2022 - Building observable applications with OpenTelemetry (BOA310)

Serverless Web Application on AWS [S3, Lambda, SQS, DynamoDB and API Gateway]

Building an Observability Solution with Amazon OpenSearch Service | AWS Events

Комментарии

0:52:42

0:52:42

0:06:01

0:06:01

0:15:49

0:15:49

0:26:13

0:26:13

0:19:54

0:19:54

0:27:56

0:27:56

0:11:52

0:11:52

0:50:49

0:50:49

0:52:17

0:52:17

0:52:58

0:52:58

0:27:27

0:27:27

0:41:30

0:41:30

0:38:31

0:38:31

0:08:47

0:08:47

0:35:48

0:35:48

0:01:12

0:01:12

0:53:45

0:53:45

0:43:57

0:43:57

0:50:07

0:50:07

0:27:51

0:27:51

0:37:55

0:37:55

0:55:58

0:55:58

0:17:32

0:17:32

0:58:25

0:58:25