filmov

tv

Neural networks [6.2] : Autoencoder - loss function

Показать описание

Neural networks [6.2] : Autoencoder - loss function

Neural networks [6.1] : Autoencoder - definition

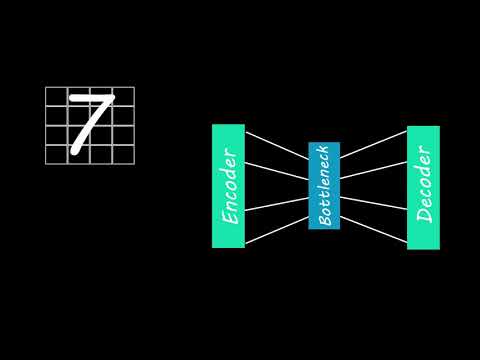

Autoencoders - EXPLAINED

Simple Explanation of AutoEncoders

Neural networks [6.5] : Autoencoder - undercomplete vs. overcomplete hidden layer

Sequence To Sequence Learning With Neural Networks| Encoder And Decoder In-depth Intuition

Autoencoders in MATLAB | Neural Network | @MATLABHelper

What are AutoEncoders in deep learning? - explained

Neural networks [6.7] : Autoencoder - contractive autoencoder

Autoencoders explained — The unsung heroes of the AI world!

MIT 6.S191 (2023): Deep Generative Modeling

Variational Autoencoders

What is an Autoencoder? | Two Minute Papers #86

L16.5 Other Types of Autoencoders

Build an Autoencoder in 5 Min - Fresh Machine Learning #5

Deep Learning Decall Fall 2017 Day 6: Autoencoders and Representation Learning

Autoencoders - Ep. 10 (Deep Learning SIMPLIFIED)

Autoencoders Tutorial | Autoencoders In Deep Learning | Tensorflow Training | Edureka

Neural Networks and Clustering (Autoencoders)

Autoencoders || From Scratch ||Developers Hutt

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

MIT 6.S191: Deep Generative Modeling

Autoencoders in Keras and TensorFlow for Data Compression and Reconstruction - Neural Networks

Word2Vec vs Autoencoder | NLP | Machine Learning

Комментарии

![Neural networks [6.2]](https://i.ytimg.com/vi/xTU79Zs4XKY/hqdefault.jpg) 0:11:52

0:11:52

![Neural networks [6.1]](https://i.ytimg.com/vi/FzS3tMl4Nsc/hqdefault.jpg) 0:06:15

0:06:15

0:10:53

0:10:53

0:10:31

0:10:31

![Neural networks [6.5]](https://i.ytimg.com/vi/5rLgoM2Pkso/hqdefault.jpg) 0:05:36

0:05:36

0:13:22

0:13:22

0:09:11

0:09:11

0:07:57

0:07:57

![Neural networks [6.7]](https://i.ytimg.com/vi/79sYlJ8Cvlc/hqdefault.jpg) 0:12:08

0:12:08

0:04:51

0:04:51

0:59:52

0:59:52

0:15:05

0:15:05

0:03:50

0:03:50

0:05:34

0:05:34

0:05:38

0:05:38

1:20:51

1:20:51

0:03:52

0:03:52

0:23:58

0:23:58

0:19:06

0:19:06

0:06:21

0:06:21

0:05:45

0:05:45

0:56:19

0:56:19

0:17:54

0:17:54

0:06:43

0:06:43