filmov

tv

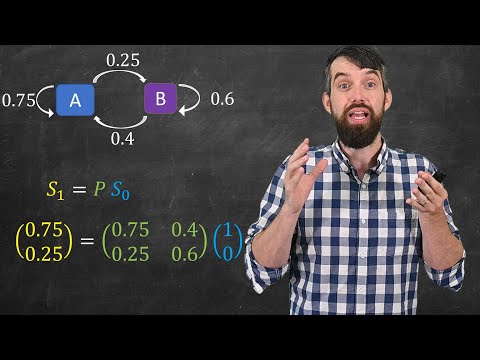

Markov Chains : Data Science Basics

Показать описание

The basics of Markov Chains, one of my ALL TIME FAVORITE objects in data science.

Introducing Markov Chains

Markov Chains : Data Science Basics

Markov Chains Clearly Explained! Part - 1

Markov Chain Monte Carlo (MCMC) : Data Science Concepts

What is Markov Chain - Machine Learning & Data Science Terminologies

Intro to Markov Chains & Transition Diagrams

The Best Markov Chain Explanation

Hidden Markov Model : Data Science Concepts

Avik Das: Dynamics Programming for Machine Learning- Hidden Markov Models | PyData LA 2019

Niloy Biswas - Estimating Convergence of Markov Chains with L-Lag Couplings

GerryChain.jl: detecting gerrymandering with Markov chains | P J Rule, B Suwal, M Sun | JuliaCon2021

Markov Chains: n-step Transition Matrix | Part - 3

Origin of Markov chains | Journey into information theory | Computer Science | Khan Academy

Markov Chains & Transition Matrices

Statistical Methods Applied Maths Data Science: Simulate Discrete-time Markov Chain | packtpub.com

10.1 Markov chains

Stateful Structure Streaming and Markov Chains Join Forces to Monitor the Biggest Storage of Physics

Intro to Markov Chains and Bayesian Inference | Mackenzie Simper

Markov Chain Stationary Distribution : Data Science Concepts

Markov Chain Example - How to use Markov Chains in Natural Language Generation

Markov Chains: Generating Sherlock Holmes Stories | Part - 4

Hidden Markov Chains

Estimating the Mixing Time of Ergodic Markov Chains

Introduction To Markov Chains | Markov Chains in Python | Edureka

Комментарии

0:04:46

0:04:46

0:10:24

0:10:24

0:09:24

0:09:24

0:12:11

0:12:11

0:07:36

0:07:36

0:11:25

0:11:25

0:10:11

0:10:11

0:13:52

0:13:52

0:39:25

0:39:25

0:23:33

0:23:33

0:03:02

0:03:02

0:08:34

0:08:34

0:07:15

0:07:15

0:06:54

0:06:54

0:06:58

0:06:58

0:12:09

0:12:09

0:28:44

0:28:44

0:48:26

0:48:26

0:17:33

0:17:33

0:06:20

0:06:20

0:13:28

0:13:28

1:24:52

1:24:52

0:11:13

0:11:13

0:26:44

0:26:44