filmov

tv

Intro to Markov Chains & Transition Diagrams

Показать описание

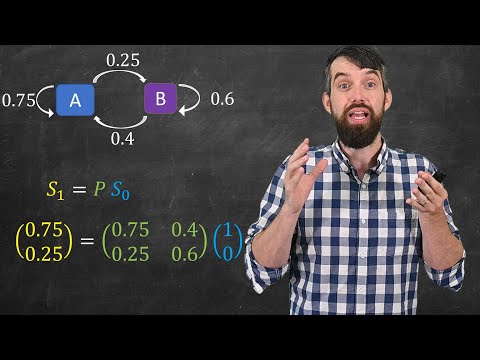

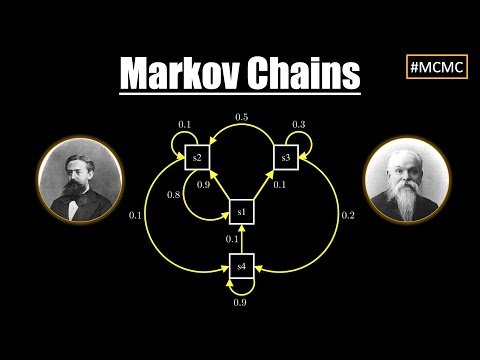

Markov Chains or Markov Processes are an extremely powerful tool from probability and statistics. They represent a statistical process that happens over and over again, where we try to predict the future state of a system. A markov process is one where the probability of the future ONLY depends on the present state, and ignores the past entirely. This might seem like a big restriction, but what we gain is a lot of power in our computations. We will see how to come up with transition diagram to describe the probabilities of shifting between different states, and then do an example where we use a tree diagram to compute the probabilities two stages into the future. We finish with an example looking at bull and bear weeks in the stock market.

Coming Soon: The follow up video covers using a Transition Matrix to easily compute probabilities multiple states in the future.

0:00 Markov Example

2:04 Definition

3:02 Non-Markov Example

4:06 Transition Diagram

5:27 Stock Market Example

COURSE PLAYLISTS:

OTHER PLAYLISTS:

► Learning Math Series

►Cool Math Series:

BECOME A MEMBER:

Special thanks to Imaginary Fan members Frank Dearr & Cameron Lowes for supporting this video.

MATH BOOKS & MERCH I LOVE:

SOCIALS:

Coming Soon: The follow up video covers using a Transition Matrix to easily compute probabilities multiple states in the future.

0:00 Markov Example

2:04 Definition

3:02 Non-Markov Example

4:06 Transition Diagram

5:27 Stock Market Example

COURSE PLAYLISTS:

OTHER PLAYLISTS:

► Learning Math Series

►Cool Math Series:

BECOME A MEMBER:

Special thanks to Imaginary Fan members Frank Dearr & Cameron Lowes for supporting this video.

MATH BOOKS & MERCH I LOVE:

SOCIALS:

Комментарии

0:11:25

0:11:25

0:04:46

0:04:46

0:07:15

0:07:15

0:09:24

0:09:24

0:34:21

0:34:21

0:02:09

0:02:09

0:14:33

0:14:33

0:06:54

0:06:54

1:30:52

1:30:52

0:38:39

0:38:39

0:26:44

0:26:44

0:38:46

0:38:46

0:12:50

0:12:50

0:09:44

0:09:44

0:29:30

0:29:30

0:45:12

0:45:12

0:10:24

0:10:24

0:10:00

0:10:00

0:16:00

0:16:00

0:08:14

0:08:14

0:04:29

0:04:29

0:13:21

0:13:21

0:33:07

0:33:07

0:07:23

0:07:23