filmov

tv

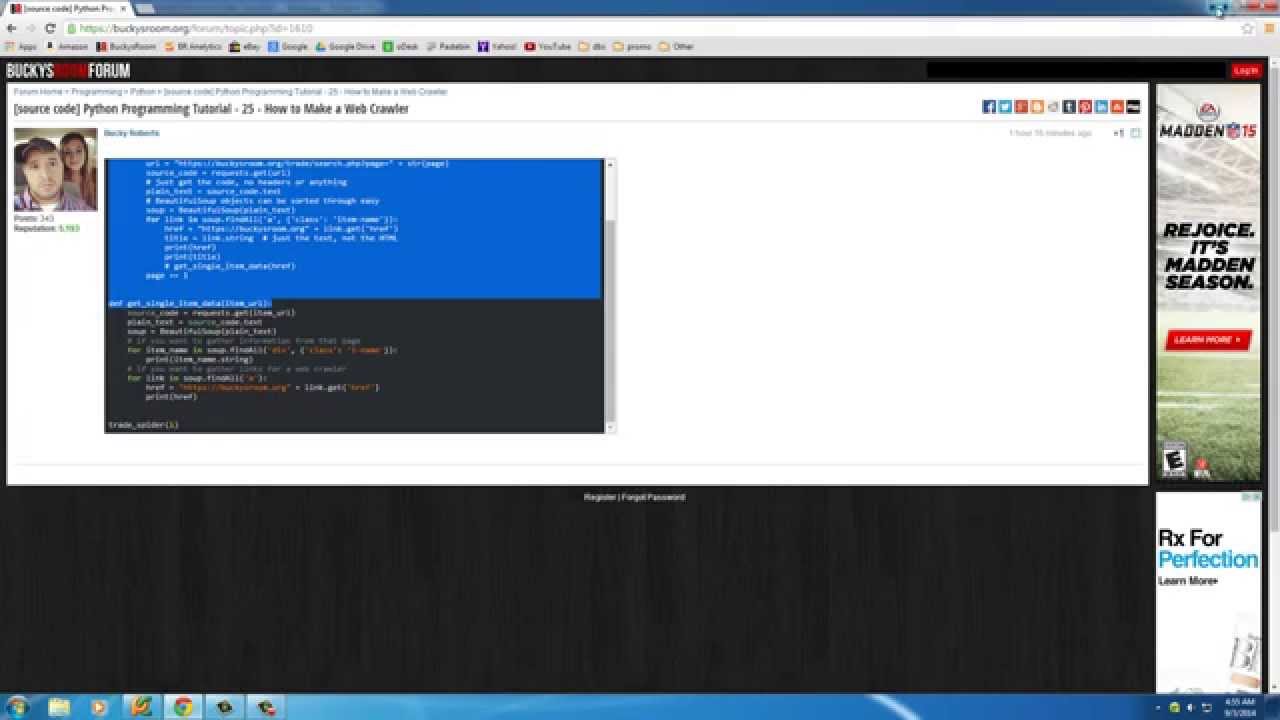

Python Programming Tutorial - 27 - How to Build a Web Crawler (3/3)

Показать описание

Python Tutorial - 27. Multiprocessing Introduction

Python Programming Tutorial - 27 - How to Build a Web Crawler (3/3)

Python Programming Tutorial - 27: String Functions (Part-2)

Python Tutorial for Beginners 27 - Python Encapsulation

Try / Except | Python | Tutorial 27

Python Tutorial 27 - Packages in Python

#27 Python Tutorial for Beginners | Array values from User in Python | Search in Array

P_27 Coding Exercise for Beginners in Python | Exercise 7 | Python Tutorials for Beginners

Live with Reuven Lerner: How to Sort Anything with Python

Learn Python Programming Tutorial Online Training by Durga Sir On 27-01-2018

Python Full Course for free 🐍 (2024)

Python Functions || Python Tutorial || Learn Python Programming

Remove Element - Leetcode 27 - Python

Python 3 Tutorial for Beginners #27 - Writing Files

Transfer Learning | Deep Learning Tutorial 27 (Tensorflow, Keras & Python)

Learn Python - Full Course for Beginners [Tutorial]

Python for Beginners – Full Course [Programming Tutorial]

Python Programming Tutorial - 8 - for

Python Programming Tutorial - 17 - Flexible Number of Arguments

Python for Beginners - Learn Python in 1 Hour

Iterators, Iterables, and Itertools in Python || Python Tutorial || Learn Python Programming

Selenium with Python Tutorial 27-Working with Cookies

The complete guide to Python

Pygame (Python Game Development) Tutorial - 27 - Centering Text

Комментарии

0:08:17

0:08:17

0:11:21

0:11:21

0:05:30

0:05:30

0:11:36

0:11:36

0:08:43

0:08:43

0:25:34

0:25:34

0:10:02

0:10:02

0:06:57

0:06:57

0:46:27

0:46:27

1:06:48

1:06:48

12:00:00

12:00:00

0:09:28

0:09:28

0:09:05

0:09:05

0:06:32

0:06:32

0:25:55

0:25:55

4:26:52

4:26:52

4:40:00

4:40:00

0:07:09

0:07:09

0:03:38

0:03:38

1:00:06

1:00:06

0:09:09

0:09:09

0:16:54

0:16:54

11:08:59

11:08:59

0:06:24

0:06:24