filmov

tv

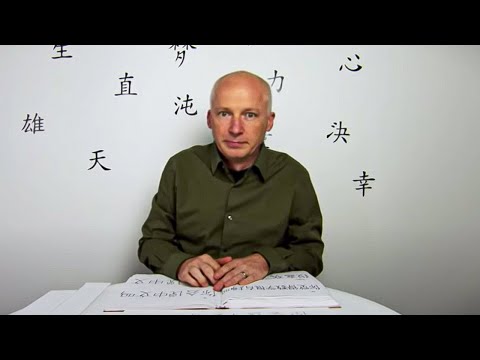

What Does The Chinese Room Argument Actually Prove? w/ Dr. Jim Madden

Показать описание

The Chinese Room argument is often taken to show that the theory of human minds being computer like is refuted. Dr. Jim briefly explains the argument while outlining what he takes its limitations to be.

Please like, comment, share, and subscribe.

Please like, comment, share, and subscribe.

The Chinese Room - 60-Second Adventures in Thought (3/6)

The Chinese Room Experiment | The Hunt for AI | BBC Studios

Why does the Chinese Room still haunt AI?

John Searle's Chinese Room Thought Experiment

The famous Chinese Room thought experiment - John Searle (1980)

What Does The Chinese Room Argument Actually Prove? w/ Dr. Jim Madden

John Searle, The Chinese Room and Strong AI

Artificial Intelligence & Personhood: Crash Course Philosophy #23

Does AI truly understand? The Chinese room argument

Joscha Bach on The Chinese Room Experiment

Thinking Machines & the Chinese Room Argument

The Chinese Room Thought Experiment

What is the Chinese Room Argument?

Consciousness, Computers and the Chinese Room

Chinese Room Replies

The Chinese Room Argument: Can Machine Fool Us?

Functionalism and John Searle's Chinese Room Argument - Philosophy of Mind III

CONSCIOUSNESS IN THE CHINESE ROOM

Chat GPT and the Chinese Room

The Chinese Room Argument: Can AI Understand Meaning?

The Great Debate: Large Language Models and the Chinese Room Experiment

The Chinese Room

The Chinese Room: A Thought Experiment in AI!!!

The Turing test: Can a computer pass for a human? - Alex Gendler

Комментарии

0:01:17

0:01:17

0:03:58

0:03:58

1:39:33

1:39:33

0:02:59

0:02:59

0:28:30

0:28:30

0:02:42

0:02:42

0:02:40

0:02:40

0:09:26

0:09:26

0:00:59

0:00:59

0:09:23

0:09:23

0:02:56

0:02:56

0:00:46

0:00:46

0:03:36

0:03:36

0:15:10

0:15:10

0:09:00

0:09:00

0:08:58

0:08:58

0:05:47

0:05:47

2:09:35

2:09:35

0:03:43

0:03:43

0:00:47

0:00:47

0:06:27

0:06:27

0:00:48

0:00:48

0:15:45

0:15:45

0:04:43

0:04:43