filmov

tv

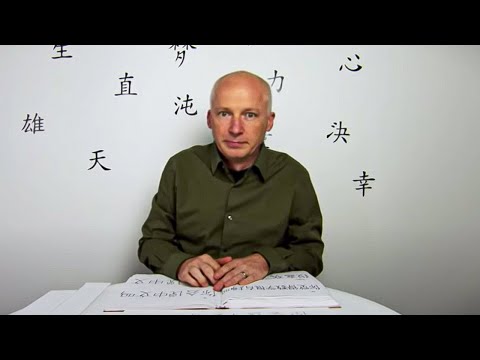

Chinese Room Replies

Показать описание

This is the second part of a two-part series on John Searle's famous Chinese Room argument against "Strong AI"

The Chinese Room - 60-Second Adventures in Thought (3/6)

Chinese Room Replies

The Chinese Room Experiment | The Hunt for AI | BBC Studios

The famous Chinese Room thought experiment - John Searle (1980)

What Does The Chinese Room Argument Actually Prove? w/ Dr. Jim Madden

John Searle's Chinese Room Thought Experiment

The Chinese Room Thought Experiment

Why does the Chinese Room still haunt AI?

Does AI truly understand? The Chinese room argument

John Searle, The Chinese Room and Strong AI

Thinking Machines & the Chinese Room Argument

Can computers think? The Chinese Room Argument

AI and Society: 09c. The Chinese Room argument

Problems with the Chinese Room Argument

Thinking Machines: Searle's Chinese Room Argument (1984)

Can AI Understand ? Computer vs Human Intelligence #chinese #room #experiment #ai

Chinese Room Experiment argues against artificial intelligence #springonshorts

Searle's Chinese Room Counter Argument to Turing/Strong AI

Passing the Turing Test: The Chinese Room | Minds and Machines | Dr. Josh Redstone

What is the Chinese Room Argument?

Functionalism and John Searle's Chinese Room Argument - Philosophy of Mind III

The Chinese Room Argument: Can Machine Fool Us?

Joscha Bach on The Chinese Room Experiment

Artificial Intelligence & Personhood: Crash Course Philosophy #23

Комментарии

0:01:17

0:01:17

0:09:00

0:09:00

0:03:58

0:03:58

0:28:30

0:28:30

0:02:42

0:02:42

0:02:59

0:02:59

0:00:46

0:00:46

1:39:33

1:39:33

0:00:59

0:00:59

0:02:40

0:02:40

0:02:56

0:02:56

0:40:31

0:40:31

0:34:38

0:34:38

0:08:07

0:08:07

0:10:14

0:10:14

0:00:38

0:00:38

0:00:42

0:00:42

0:43:12

0:43:12

0:36:04

0:36:04

0:03:36

0:03:36

0:05:47

0:05:47

0:08:58

0:08:58

0:09:23

0:09:23

0:09:26

0:09:26