filmov

tv

Key Value Cache in Large Language Models Explained

Показать описание

In this video, we unravel the importance and value of KV cache in optimizing the performance of transformer architectures. We start by exploring the fundamental concepts behind attention mechanisms, the backbone of transformer models. Attention mechanisms enable models to weigh the importance of different input tokens, allowing them to focus on relevant information while processing sequences.

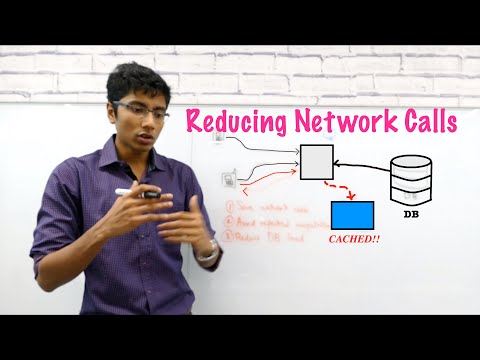

Next, we delve into the KV cache mechanism and its significance in transformer architectures. KV cache optimizes computation by storing previously computed key-value pairs and reusing them across different queries. This not only reduces computational overhead but also enhances memory efficiency, particularly in scenarios where the same key-value pairs are repeatedly used.

We dissect code snippets to compare implementations with and without KV cache, highlighting the computational differences and efficiency gains achieved through KV caching. By analyzing the code, we gain insights into how KV cache streamlines the computation process, leading to faster inference and improved model performance

My Links 🔗

Next, we delve into the KV cache mechanism and its significance in transformer architectures. KV cache optimizes computation by storing previously computed key-value pairs and reusing them across different queries. This not only reduces computational overhead but also enhances memory efficiency, particularly in scenarios where the same key-value pairs are repeatedly used.

We dissect code snippets to compare implementations with and without KV cache, highlighting the computational differences and efficiency gains achieved through KV caching. By analyzing the code, we gain insights into how KV cache streamlines the computation process, leading to faster inference and improved model performance

My Links 🔗

The KV Cache: Memory Usage in Transformers

Key Value Cache in Large Language Models Explained

[QA] Reducing Transformer Key-Value Cache Size with Cross-Layer Attention

Cache Systems Every Developer Should Know

Reducing Transformer Key-Value Cache Size with Cross-Layer Attention

System Design Interview - Distributed Cache

NSDI '21 - Segcache: a memory-efficient and scalable in-memory key-value cache for small object...

FAST '20 - Characterizing, Modeling, and Benchmarking RocksDB Key-Value Workloads at Facebook

Coding the self attention mechanism with key, query and value matrices

The math behind Attention: Keys, Queries, and Values matrices

Rasa Algorithm Whiteboard - Transformers & Attention 2: Keys, Values, Queries

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Techniques to Improve Cache Speed

FASTER: An Embedded Key-Value Store for State Management

LLM Jargons Explained: Part 4 - KV Cache

NSDI '19 - Flashield: a Hybrid Key-value Cache that Controls Flash Write Amplification

I've been using Redis wrong this whole time...

Key Value Store | Storage Part 4 | System Design Interview Basics

SILK: Preventing Latency Spikes in Log-Structured Merge Key-Value Stores

What are Distributed CACHES and how do they manage DATA CONSISTENCY?

7 Database Paradigms

FAST '22 - NyxCache: Flexible and Efficient Multi-tenant Persistent Memory Caching

Redis vs Memcached: Which One is Right for Your Caching Needs?

'Lazy Defenses: Using Scaled TTLs to Keep Your Cache Correct' by Bonnie Eisenman

Комментарии

0:08:33

0:08:33

0:17:36

0:17:36

![[QA] Reducing Transformer](https://i.ytimg.com/vi/jyJi4fyTOuE/hqdefault.jpg) 0:08:37

0:08:37

0:05:48

0:05:48

0:18:49

0:18:49

0:34:34

0:34:34

0:11:59

0:11:59

0:28:38

0:28:38

1:19:08

1:19:08

0:36:16

0:36:16

0:12:26

0:12:26

0:58:04

0:58:04

0:31:32

0:31:32

0:06:08

0:06:08

0:13:47

0:13:47

0:25:10

0:25:10

0:20:53

0:20:53

0:05:04

0:05:04

0:50:14

0:50:14

0:13:29

0:13:29

0:09:53

0:09:53

0:16:17

0:16:17

0:01:50

0:01:50

0:38:22

0:38:22