filmov

tv

LLM Jargons Explained: Part 4 - KV Cache

Показать описание

In this video, I explore the mechanics of KV cache, short for key-value cache, highlighting its importance in modern LLM systems. I discuss how it improves inference times, common implementation strategies, and the challenges it presents.

_______________________________________________________

_______________________________________________________

Follow me on:

_______________________________________________________

_______________________________________________________

Follow me on:

LLM Jargons Explained: Part 4 - KV Cache

LLM Jargons Explained

LLM Jargons Explained: Part 5 - PagedAttention Explained

LLM Explained | What is LLM

LLM Jargons Explained: Part 2 - Multi Query & Group Query Attent

LLM Jargons Explained: Part 3 - Sliding Window Attention

LLM Explained | Common LLM Terms You Should Know | KodeKloud

Transformers, explained: Understand the model behind GPT, BERT, and T5

Markov Chains: Generating Sherlock Holmes Stories | Part - 4

How AIs, like ChatGPT, Learn

what it’s like to work at GOOGLE…

Search Engine + GraphRAG + LLM Agents = AI-powered Smart Search

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

But what is a neural network? | Chapter 1, Deep learning

Using ChatGPT to generate a research dissertation and thesis. It is our research writing assistant.

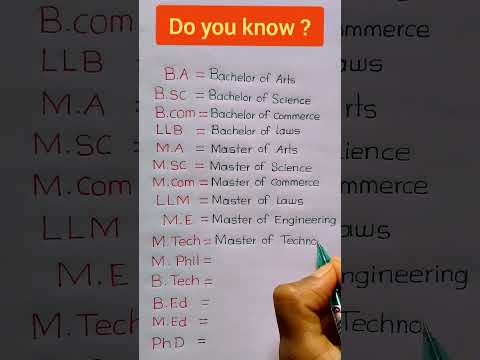

B.A/B.Sc/B.Com/LLB/M.A/M.sc/M.com/LLM/M.E/M.Tech/PhD Full Form

My Jobs Before I was a Project Manager

Mastering AI Jargon - Your Guide to OpenAI & LLM Terms

Risks of Large Language Models (LLM)

Why Large Language Models Hallucinate

How do our brains process speech? - Gareth Gaskell

Programming Language Tier List

Natural Language Processing In 5 Minutes | What Is NLP And How Does It Work? | Simplilearn

AI inbreeding ouroboros #language #linguistics #ai #llm #chatgpt #openai #etymology

Комментарии

0:13:47

0:13:47

0:02:04

0:02:04

0:08:43

0:08:43

0:04:17

0:04:17

0:15:51

0:15:51

0:15:22

0:15:22

0:07:14

0:07:14

0:09:11

0:09:11

0:13:28

0:13:28

0:08:55

0:08:55

0:00:25

0:00:25

0:07:47

0:07:47

0:05:45

0:05:45

0:18:40

0:18:40

0:06:49

0:06:49

0:00:52

0:00:52

0:00:15

0:00:15

0:07:07

0:07:07

0:08:26

0:08:26

0:09:38

0:09:38

0:04:54

0:04:54

0:00:55

0:00:55

0:05:29

0:05:29

0:01:00

0:01:00