filmov

tv

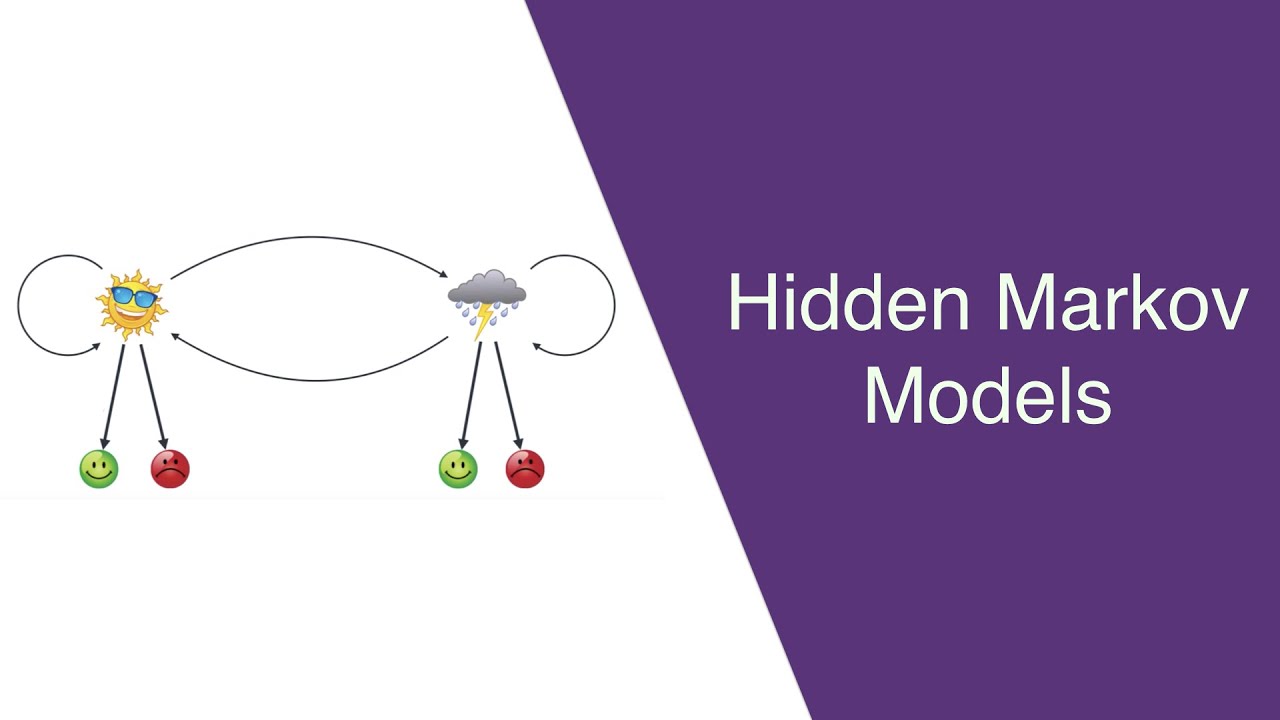

A friendly introduction to Bayes Theorem and Hidden Markov Models

Показать описание

40% discount code: serranoyt

A friendly introduction to Bayes Theorem and Hidden Markov Models, with simple examples. No background knowledge needed, except basic probability.

Accompanying notebook:

A friendly introduction to Bayes Theorem and Hidden Markov Models, with simple examples. No background knowledge needed, except basic probability.

Accompanying notebook:

A friendly introduction to Bayes Theorem and Hidden Markov Models

Bayes theorem, the geometry of changing beliefs

A Friendly Introduction to Machine Learning

Naive Bayes classifier: A friendly approach

You Know I'm All About that Bayes: Crash Course Statistics #24

Bayes' Theorem - The Simplest Case

Everything You Ever Wanted to Know About Bayes' Theorem But Were Afraid To Ask.

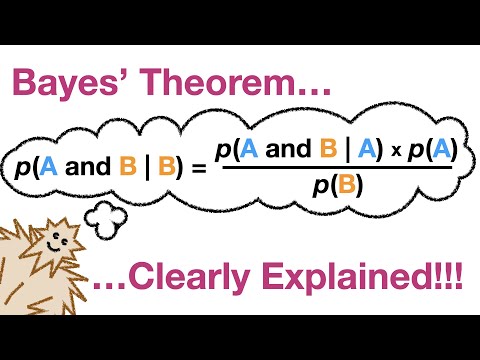

Bayes' Theorem, Clearly Explained!!!!

Hidden Markov Model Clearly Explained! Part - 5

How Bayes Theorem works

Bayes' Theorem of Probability With Tree Diagrams & Venn Diagrams

undergraduate machine learning 5: Introduction to Bayes

Bayesian Statistics: An Introduction

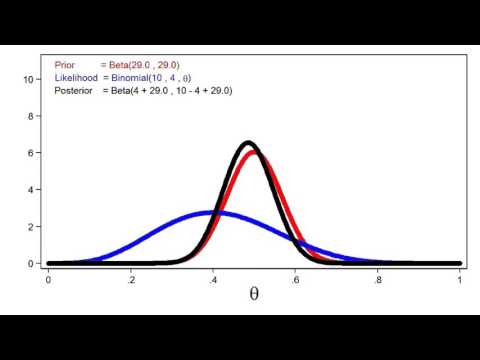

Introduction to Bayesian statistics, part 1: The basic concepts

Introduction to Bayesian data analysis - part 1: What is Bayes?

A Friendly Introduction to Generative Adversarial Networks (GANs)

Bayes Rule Intro

Introduction to Bayesian Statistics - A Beginner's Guide

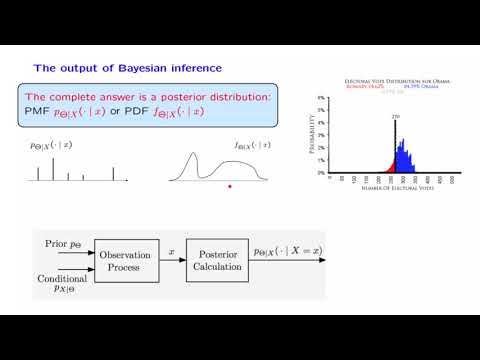

L14.4 The Bayesian Inference Framework

Naive Bayes, Clearly Explained!!!

Bayesian Networks

Introduction to Bayesian data analysis - Part 2: Why use Bayes?

Gentle Bayesian updating | David Manley | EA Student Summit 2020

A Short Introduction to Bayesian Statistics

Комментарии

0:32:46

0:32:46

0:15:11

0:15:11

0:30:49

0:30:49

0:20:29

0:20:29

0:12:05

0:12:05

0:05:31

0:05:31

0:05:48

0:05:48

0:14:00

0:14:00

0:09:32

0:09:32

0:25:09

0:25:09

0:19:14

0:19:14

0:09:30

0:09:30

0:38:19

0:38:19

0:09:12

0:09:12

0:29:30

0:29:30

0:21:01

0:21:01

0:00:25

0:00:25

1:18:47

1:18:47

0:09:48

0:09:48

0:15:12

0:15:12

0:39:57

0:39:57

0:23:00

0:23:00

0:27:05

0:27:05

0:12:34

0:12:34