filmov

tv

Bayesian Networks

Показать описание

CS5804 Virginia Tech

Introduction to Artificial Intelligence

Introduction to Artificial Intelligence

1 What is a Bayesian network

What is a Bayesian network?

Bayesian Networks

Introduction to Bayesian Networks | Implement Bayesian Networks In Python | Edureka

17 Probabilistic Graphical Models and Bayesian Networks

Bayesian Network with Examples | Easiest Explanation

1. Bayesian Belief Network | BBN | Solved Numerical Example | Burglar Alarm System by Mahesh Huddar

Explaining Bayesian Networks and Building an AI Project with Python on Customer Purchase or not?

Section 5: Probability, Bayes Nets

The Bayesian Trap

Machine Learning #29 - Bayes'sche Netze

Bayes theorem, the geometry of changing beliefs

Bayesian Network | Introduction and Workshop

Bayesian Networks 2 - Definition | Stanford CS221: AI (Autumn 2021)

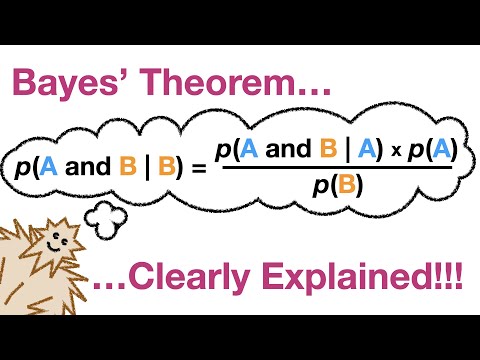

Bayes' Theorem, Clearly Explained!!!!

Bayesian networks for healthcare data: what are they and why they work when ‘big data’ methods fail...

Bayesian networks: what are they and why they work when ‘big data’ methods fail

Bayesian network - Artificial Intelligence - Unit - IV

#45 Bayesian Belief Networks - DAG & CPT With Example |ML|

Simple introduction to Bayesian Networks with the classic ‘Asia’ model

Bayesian Networks: Syntax

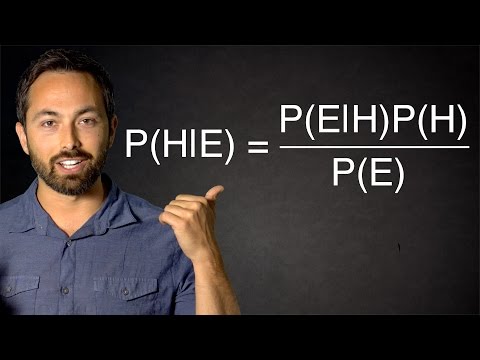

Naive Bayes, Clearly Explained!!!

QGeNIe - A Link Between Qualitative and Quantitative Bayesian Networks [ABNMS2020]

Using Bayesian Networks to Analyse Data

Комментарии

0:02:14

0:02:14

0:03:04

0:03:04

0:39:57

0:39:57

0:29:35

0:29:35

0:30:03

0:30:03

0:09:54

0:09:54

0:11:16

0:11:16

0:10:02

0:10:02

0:11:24

0:11:24

0:10:37

0:10:37

0:07:46

0:07:46

0:15:11

0:15:11

0:24:22

0:24:22

0:28:40

0:28:40

0:14:00

0:14:00

0:41:00

0:41:00

0:45:19

0:45:19

0:24:40

0:24:40

0:14:41

0:14:41

0:05:55

0:05:55

0:21:17

0:21:17

0:15:12

0:15:12

0:16:58

0:16:58

0:03:41

0:03:41