filmov

tv

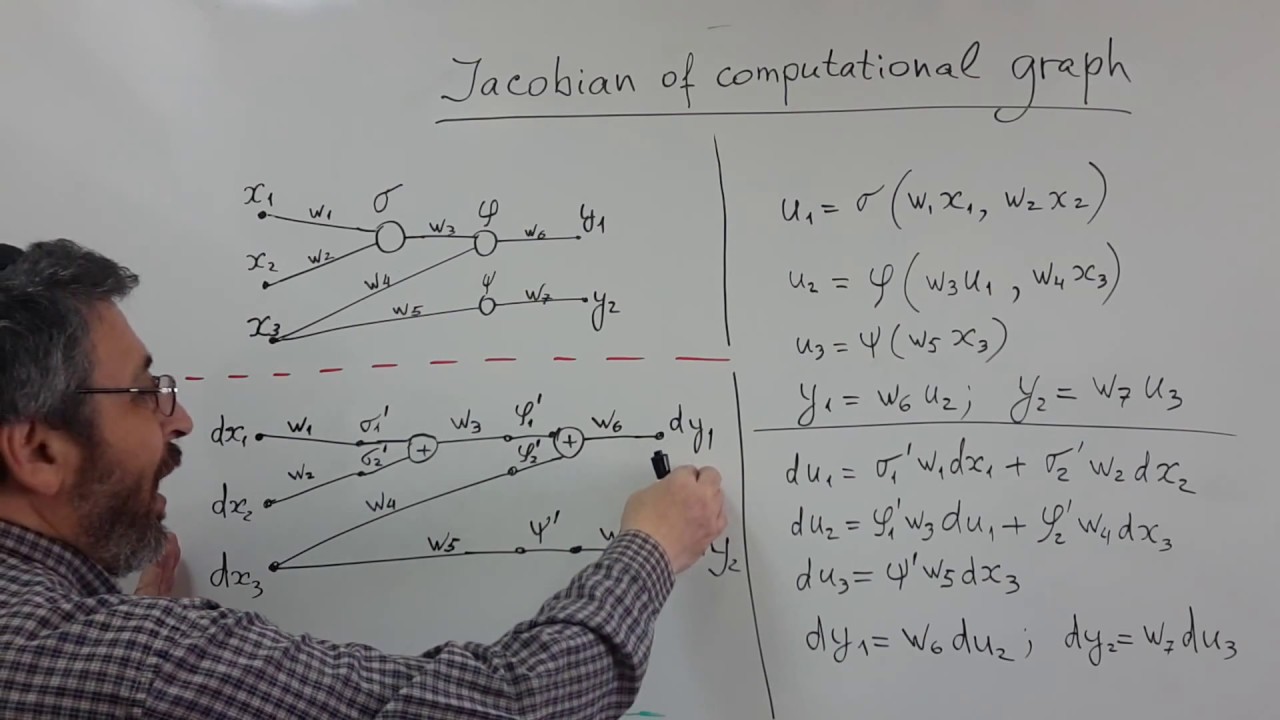

Easy way to compute Jacobian and gradient with forward and back propagation in graph

Показать описание

Before watching, you can refresh the notion of the differential with my other video:

Lecture 2-3: Gradient and Hessian of Multivariate Function

Help us caption & translate this video!

Lecture 2-3: Gradient and Hessian of Multivariate Function

Help us caption & translate this video!

Easy way to compute Jacobian and gradient with forward and back propagation in graph

What is Jacobian? | The right way of thinking derivatives and integrals

The Jacobian

Jacobian| jacobian transformation|differential calculus

Computing a Jacobian matrix

Change of Variables and the Jacobian

How to find Jacobian Matrix? | Solved Examples | Robotics 101

Change of Variables & The Jacobian | Multi-variable Integration

parameterization of surface in calculus - 3D Jacobian d(x, y, z) /d(u, v, w) part 8

The Jacobian matrix

How to Compute the Jacobian of a Robot Manipulator: A Numerical Example | Robotic Systems

The Jacobian Matrix

Linear Algebra: Jacobian Determinant

Jacobin theorem(engineering mathematics)

Oxford Calculus: Jacobians Explained

How REAL Men Integrate Functions

Double Integral through a Change of Coordinates (the Jacobian)

Example -- Forward Kinematics and Jacobian Matrix

Jacobian - how it works

5 simple unsolvable equations

Calculus: How to Find the Jacobian of the Transformation. [HD]

Robotics 2 U1 (Kinematics) S3 (Jacobian Matrix) P2 (Finding the Jacobian)

#jacobian

Jacobian of the transformation (2x2) (KristaKingMath)

Комментарии

0:18:06

0:18:06

0:27:14

0:27:14

0:04:46

0:04:46

0:07:34

0:07:34

0:03:53

0:03:53

0:13:08

0:13:08

0:09:58

0:09:58

0:10:07

0:10:07

0:35:01

0:35:01

0:06:22

0:06:22

0:09:23

0:09:23

0:40:21

0:40:21

0:04:14

0:04:14

0:10:30

0:10:30

0:29:25

0:29:25

0:00:35

0:00:35

0:10:25

0:10:25

0:30:18

0:30:18

0:05:59

0:05:59

0:00:50

0:00:50

0:02:51

0:02:51

0:16:41

0:16:41

0:00:16

0:00:16

0:06:15

0:06:15