filmov

tv

Advanced RAG with ColBERT in LangChain and LlamaIndex

Показать описание

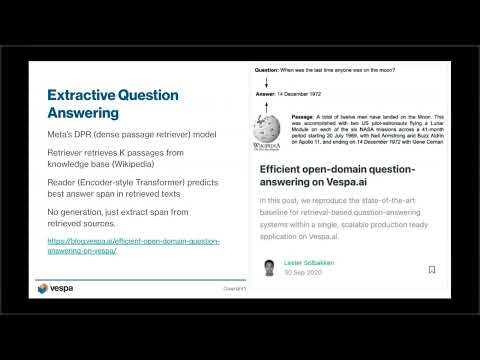

ColBERT is a fast and accurate retrieval model, enabling scalable BERT-based search over large text collections in tens of milliseconds. This can be used as a potential alternative to Dense Embeddings in Retrieval Augmented Generation. In this video we explore using ColBERTv2 with RAGatouille and compare it with OpenAI Embedding models.

Signup for Advanced RAG:

LINKS:

TIMESTAMPS:

[00:00] Introduction

[00:29] Use ColBERT in LangChain

[08:46] Use ColBERT in LlamaIndex

All Interesting Videos:

Signup for Advanced RAG:

LINKS:

TIMESTAMPS:

[00:00] Introduction

[00:29] Use ColBERT in LangChain

[08:46] Use ColBERT in LlamaIndex

All Interesting Videos:

Advanced RAG with ColBERT in LangChain and LlamaIndex

Supercharge Your RAG with Contextualized Late Interactions

Advanced RAG Concept: Improving RAG with Multi-stage Document Reranking

RAG But Better: Rerankers with Cohere AI

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

Stanford CS25: V3 I Retrieval Augmented Language Models

RAG From Scratch: Part 14 (ColBERT)

Build a Streamlit Chatbot using Langchain, ColBERT, Ragatouille, and ChromaDB

DSPy: MOST Advanced AI RAG Framework with Auto Reasoning and Prompting

Neural Notes: ColBERT & ColBERTv2

Lady Gaga’s best response ever

He Didn't Even Hesitate 😳 #shorts #comedy

Developing and Serving RAG-Based LLM Applications in Production

Simple ideas to improve your RAG (Stanford, Google)

Sting watching his song get murdered… 🔪 #sting #thepolice #80smusic #80s #rock #everybreathyoutake...

Learn RAG from Scratch in Python without using frameworks (langchain or llamaIndex)

Meryl Streep Meets Anna Wintour at Vogue

DSPy explained: No more LangChain PROMPT Templates

Howard and Melissa Rauch doing voice of Howard's Mom |Jim mocking comic con 201l

Ep. 8 — ColBERT + ColBERTv2: late interaction at a reasonable inference cost

Homework: Few-shot OpenQA with ColBERT retrieval | Stanford CS224U Natural Language Understanding

RAG Basics to Intermediate | Query Transformation, Construction, Routing, Advanced Retrievers

LangChain Retrieval Webinar

Neil deGrasse Tyson Panicking Over Declassified Photos From Venus By The Soviet Union!

Комментарии

0:13:35

0:13:35

0:17:45

0:17:45

0:12:33

0:12:33

0:23:43

0:23:43

2:33:11

2:33:11

1:19:27

1:19:27

0:07:13

0:07:13

0:00:30

0:00:30

0:18:55

0:18:55

0:31:09

0:31:09

0:00:26

0:00:26

0:00:26

0:00:26

0:29:11

0:29:11

0:39:31

0:39:31

0:00:16

0:00:16

0:11:21

0:11:21

0:05:37

0:05:37

0:53:22

0:53:22

0:19:42

0:19:42

0:57:23

0:57:23

0:17:07

0:17:07

1:57:37

1:57:37

1:05:32

1:05:32

0:21:07

0:21:07