filmov

tv

Developing and Serving RAG-Based LLM Applications in Production

Показать описание

There are a lot of different moving pieces when it comes to developing and serving LLM applications. This talk will provide a comprehensive guide for developing retrieval augmented generation (RAG) based LLM applications — with a focus on scale (embed, index, serve, etc.), evaluation (component-wise and overall) and production workflows. We’ll also explore more advanced topics such as hybrid routing to close the gap between OSS and closed LLMs.

Takeaways:

• Evaluating RAG-based LLM applications are crucial for identifying and productionizing the best configuration.

• Developing your LLM application with scalable workloads involves minimal changes to existing code.

• Mixture of Experts (MoE) routing allows you to close the gap between OSS and closed LLMs.

About Anyscale

---

Anyscale is the AI Application Platform for developing, running, and scaling AI.

If you're interested in a managed Ray service, check out:

About Ray

---

Ray is the most popular open source framework for scaling and productionizing AI workloads. From Generative AI and LLMs to computer vision, Ray powers the world’s most ambitious AI workloads.

#llm #machinelearning #ray #deeplearning #distributedsystems #python #genai

Takeaways:

• Evaluating RAG-based LLM applications are crucial for identifying and productionizing the best configuration.

• Developing your LLM application with scalable workloads involves minimal changes to existing code.

• Mixture of Experts (MoE) routing allows you to close the gap between OSS and closed LLMs.

About Anyscale

---

Anyscale is the AI Application Platform for developing, running, and scaling AI.

If you're interested in a managed Ray service, check out:

About Ray

---

Ray is the most popular open source framework for scaling and productionizing AI workloads. From Generative AI and LLMs to computer vision, Ray powers the world’s most ambitious AI workloads.

#llm #machinelearning #ray #deeplearning #distributedsystems #python #genai

Developing and Serving RAG-Based LLM Applications in Production

What is Retrieval-Augmented Generation (RAG)?

Build a RAG Based LLM App in 20 Minutes! | Full Langflow Tutorial

Building Production-Ready RAG Applications: Jerry Liu

Building a RAG Based LLM App And Deploying It In 20 Minutes

Building RAG-based LLM Applications for Production // Philipp Moritz & Yifei Feng // LLMs III Ta...

AutoLLM: Create RAG Based LLM Web Apps in SECONDS!

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

Building RAG apps with Azure Cosmos DB for MongoDB

Building Multimodal AI RAG with LlamaIndex, NVIDIA NIM, and Milvus | LLM App Development

Step-by-Step Guide to Building a RAG LLM App with LLamA2 and LLaMAindex

'I want Llama3 to perform 10x with my private knowledge' - Local Agentic RAG w/ llama3

Build your own RAG (retrieval augmented generation) AI Chatbot using Python | Simple walkthrough

LLM Application Development - Tutorial 3 - RAG

LLM Explained | What is LLM

How Large Language Models Work

RAG Explained

Retrieval Augmented Generation (RAG) | Embedding Model, Vector Database, LangChain, LLM

Lessons Learned on LLM RAG Solutions

Deploy LLM App as API Using Langserve Langchain

How to evaluate an LLM-powered RAG application automatically.

Retrieval Augmented Generation (RAG): Boosting LLM Performance with External Knowledge

RAG + Langchain Python Project: Easy AI/Chat For Your Docs

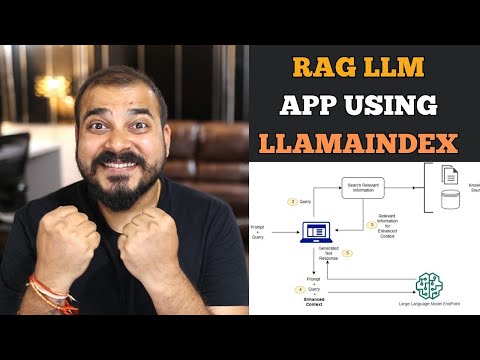

End to end RAG LLM App Using Llamaindex and OpenAI- Indexing and Querying Multiple pdf's

Комментарии

0:29:11

0:29:11

0:06:36

0:06:36

0:24:03

0:24:03

0:18:35

0:18:35

0:21:14

0:21:14

0:30:23

0:30:23

0:12:58

0:12:58

2:33:11

2:33:11

1:00:01

1:00:01

0:16:41

0:16:41

0:24:09

0:24:09

0:24:02

0:24:02

0:16:41

0:16:41

0:28:56

0:28:56

0:04:17

0:04:17

0:05:34

0:05:34

0:08:03

0:08:03

0:04:34

0:04:34

0:34:31

0:34:31

0:17:49

0:17:49

0:50:42

0:50:42

0:47:43

0:47:43

0:16:42

0:16:42

0:27:21

0:27:21