filmov

tv

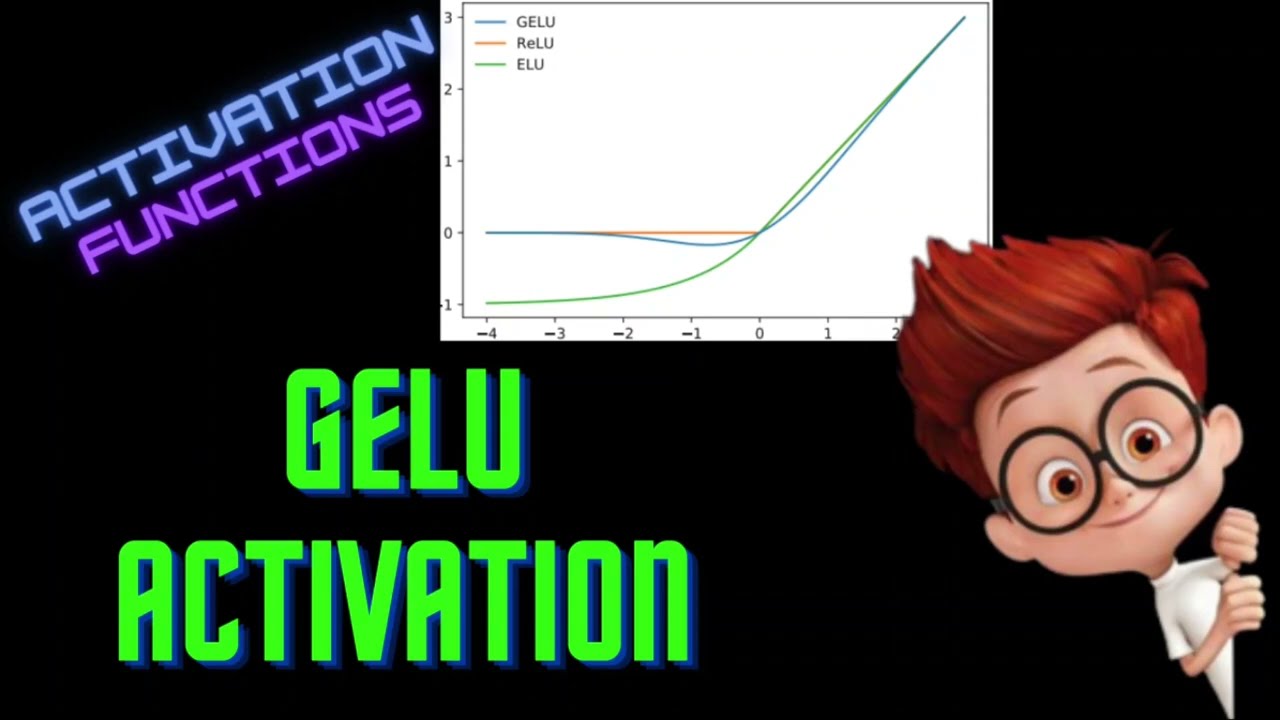

Neural Networks From Scratch - Lec 15 - GeLU Activation Function

Показать описание

Building Neural Networks from scratch in python.

This is the fifteenth video of the course - "Neural Networks From Scratch". This video covers the GeLU activation function and its intuition in detail. We look at the derivative of GeLU and discuss the advantages and disadvantages of using the GeLU activation function. We looked at the performance comparisons of mish against relu and elu activation functions and finally, we saw the python implementation

Neural Networks From Scratch Playlist:

Activation Functions Playlist:

GeLU Activation:

Please like and subscribe to the channel for more videos. This will help me in assessing your interests and creating more content. Thank you!

Chapter:

0:00 Introduction

0:14 Motivation

1:18 Intuition & Deriving GeLU

5:35 Definition of GeLU

6:30 Derivative of GeLU

7:04 Performance comparison

7:38 Python Implementation

#geluactivationfunction, #geluactivationfunction, #geluactivationfunctioninneuralnetwork, #reluactivationfunction, #activationfunctioninneuralnetwork, #vanishinggradient, #selfgatedactivationfunction, #dropout

This is the fifteenth video of the course - "Neural Networks From Scratch". This video covers the GeLU activation function and its intuition in detail. We look at the derivative of GeLU and discuss the advantages and disadvantages of using the GeLU activation function. We looked at the performance comparisons of mish against relu and elu activation functions and finally, we saw the python implementation

Neural Networks From Scratch Playlist:

Activation Functions Playlist:

GeLU Activation:

Please like and subscribe to the channel for more videos. This will help me in assessing your interests and creating more content. Thank you!

Chapter:

0:00 Introduction

0:14 Motivation

1:18 Intuition & Deriving GeLU

5:35 Definition of GeLU

6:30 Derivative of GeLU

7:04 Performance comparison

7:38 Python Implementation

#geluactivationfunction, #geluactivationfunction, #geluactivationfunctioninneuralnetwork, #reluactivationfunction, #activationfunctioninneuralnetwork, #vanishinggradient, #selfgatedactivationfunction, #dropout

I Built a Neural Network from Scratch

Building a neural network FROM SCRATCH (no Tensorflow/Pytorch, just numpy & math)

But what is a neural network? | Chapter 1, Deep learning

How to Create a Neural Network (and Train it to Identify Doodles)

Neural Network from Scratch | Mathematics & Python Code

Neural Networks from Scratch - P.1 Intro and Neuron Code

Understanding AI from Scratch – Neural Networks Course

Neural Networks Explained from Scratch using Python

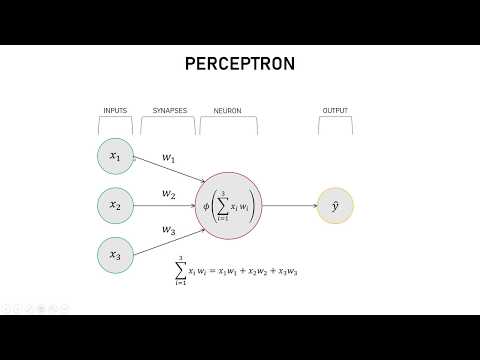

Building a Perceptron From Scratch - Beginner Friendly Neural Network Tutorial | Neural Networks

Create a Simple Neural Network in Python from Scratch

Neural Network From Scratch In Python

Neural Networks from Scratch - P.5 Hidden Layer Activation Functions

The Complete Mathematics of Neural Networks and Deep Learning

How to train simple AIs

Neural Networks from Scratch - P.4 Batches, Layers, and Objects

Neural Network from scratch using Only NUMPY

Convolutional Neural Network from Scratch | Mathematics & Python Code

Transformer Neural Networks Derived from Scratch

Neural Networks from Scratch - P.9 Introducing Optimization and derivatives

Running a NEURAL NETWORK in SCRATCH (Block-Based Coding)

Neural Networks From Scratch in Rust

Neural Networks from Scratch - P.6 Softmax Activation

The spelled-out intro to neural networks and backpropagation: building micrograd

Neural Networks from Scratch announcement

Комментарии

0:09:15

0:09:15

0:31:28

0:31:28

0:18:40

0:18:40

0:54:51

0:54:51

0:32:32

0:32:32

0:16:59

0:16:59

3:44:18

3:44:18

0:17:38

0:17:38

0:17:57

0:17:57

0:14:15

0:14:15

1:13:07

1:13:07

0:40:06

0:40:06

5:00:53

5:00:53

0:12:59

0:12:59

0:33:47

0:33:47

1:39:10

1:39:10

0:33:23

0:33:23

0:18:08

0:18:08

0:14:13

0:14:13

0:13:29

0:13:29

0:09:12

0:09:12

0:34:01

0:34:01

2:25:52

2:25:52

0:11:35

0:11:35