filmov

tv

I Built a Neural Network from Scratch

Показать описание

I'm not an AI expert by any means, I probably have made some mistakes. So I apologise in advance :)

Also, I only used PyTorch to test the forward pass. Apart from that, everything else is written in pure Python (+ use of Numpy).

⭐ Other Social Media Links:

Current Subs: 14,219

I Built a Neural Network from Scratch

I Made an AI with just Redstone!

But what is a neural network? | Chapter 1, Deep learning

Building a neural network FROM SCRATCH (no Tensorflow/Pytorch, just numpy & math)

How to Create a Neural Network (and Train it to Identify Doodles)

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

I BUILT A NEURAL NETWORK IN MINECRAFT | Analog Redstone Network w/ Backprop & Optimizer (NO MODS...

Neural Networks Explained in 5 minutes

Getting realistic about AI’s potential with Nick Frosst from Cohere

Evolving Genetic Neural Network Optimizes Poly Bridge Problems

Neural Networks Explained from Scratch using Python

Build A Neural Net In Under 4 Minutes

Neural Network Architectures & Deep Learning

PyTorch in 100 Seconds

TensorFlow in 100 Seconds

How AIs, like ChatGPT, Learn

Machine Learning Explained in 100 Seconds

The Essential Main Ideas of Neural Networks

The spelled-out intro to neural networks and backpropagation: building micrograd

Create a Simple Neural Network in Python from Scratch

How to Build a Neural Network with TensorFlow and Keras in 10 Minutes

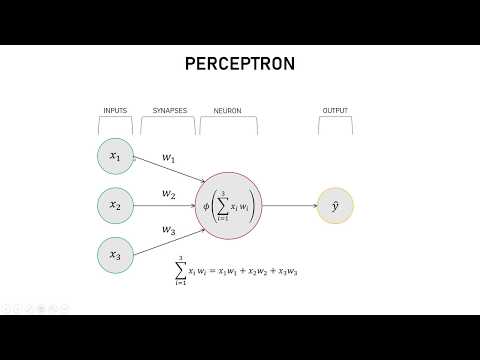

Neural Network Simply Explained | Deep Learning Tutorial 4 (Tensorflow2.0, Keras & Python)

What are Neural Networks || How AIs think

Coding a Neural Network: A Beginner's Guide (part 1)

Комментарии

0:09:15

0:09:15

0:17:23

0:17:23

0:18:40

0:18:40

0:31:28

0:31:28

0:54:51

0:54:51

0:05:45

0:05:45

0:21:47

0:21:47

0:04:32

0:04:32

0:38:37

0:38:37

0:09:59

0:09:59

0:17:38

0:17:38

0:03:52

0:03:52

0:09:09

0:09:09

0:02:43

0:02:43

0:02:39

0:02:39

0:08:55

0:08:55

0:02:35

0:02:35

0:18:54

0:18:54

2:25:52

2:25:52

0:14:15

0:14:15

0:13:46

0:13:46

0:11:01

0:11:01

0:12:14

0:12:14

0:11:35

0:11:35