filmov

tv

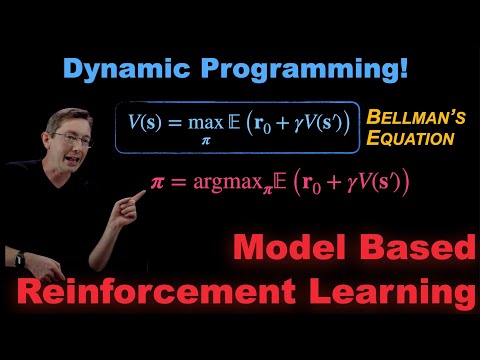

Model Based Reinforcement Learning: Policy Iteration, Value Iteration, and Dynamic Programming

Показать описание

Here we introduce dynamic programming, which is a cornerstone of model-based reinforcement learning. We demonstrate dynamic programming for policy iteration and value iteration, leading to the quality function and Q-learning.

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

Model Based Reinforcement Learning: Policy Iteration, Value Iteration, and Dynamic Programming

Why Choose Model-Based Reinforcement Learning?

Reinforcement Learning Series: Overview of Methods

How Are Value-Based, Policy-Based, and Model-Based Methods Different in Reinforcement Learning?

Types of Reinforcement Learning: A Comprehensive Guide

CS885 Lecture 9: Model-based RL

Policies and Value Functions - Good Actions for a Reinforcement Learning Agent

L6 Model-based RL (Foundations of Deep RL Series)

Online Refresher Course on AI & Machine Learning Mastery | CFDET IIT (BHU) Varanasi | 22 Nov 202...

Towards Model-Based Reinforcement Learning on Real Robots | AI & Engineering | Georg Martius

SINDy-RL: Interpretable and Efficient Model-Based Reinforcement Learning

Model Based RL Finally Works!

DeepRL1.6 Model based versus Model free Reinforcement Learning Source

Model-based reinforcement learning

Efficient model-based reinforcement learning through optimistic policy search and planning

MOPO: Model-Based Offline Policy Optimization

An introduction to Policy Gradient methods - Deep Reinforcement Learning

Monte Carlo And Off-Policy Methods | Reinforcement Learning Part 3

Model-Based Reinforcement Learning with Reinforcement Learning Toolbox

Learning to Model What Matters // Model-Based Reinforcement Learning

Q-Learning: Model Free Reinforcement Learning and Temporal Difference Learning

Model-Based Reinforcement Learning with a Generative Model is Minimax Optimal

No. 48 @ Model-Based Reinforcement Learning (MB-RL) @ Deep Learning 101

RLSS 2023 - Model-based Reinforcement Learning - Andreas Krause (presented by Felix Berkenkamp)

Комментарии

0:27:10

0:27:10

0:15:01

0:15:01

0:21:37

0:21:37

0:02:14

0:02:14

0:02:05

0:02:05

1:24:44

1:24:44

0:06:52

0:06:52

0:18:14

0:18:14

2:44:06

2:44:06

0:26:59

0:26:59

0:21:28

0:21:28

0:28:01

0:28:01

0:10:39

0:10:39

0:09:51

0:09:51

0:50:07

0:50:07

0:37:44

0:37:44

0:19:50

0:19:50

0:27:06

0:27:06

0:06:04

0:06:04

0:12:00

0:12:00

0:35:35

0:35:35

0:15:14

0:15:14

2:01:29

2:01:29

1:21:42

1:21:42