filmov

tv

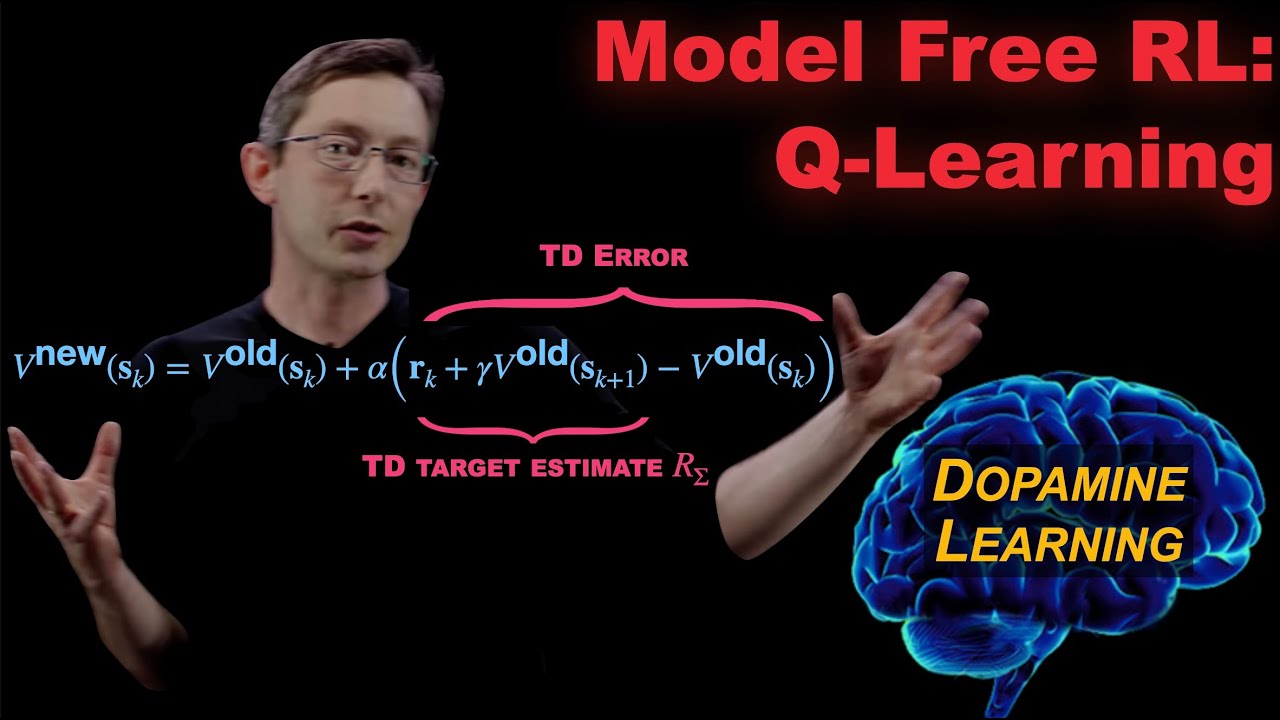

Q-Learning: Model Free Reinforcement Learning and Temporal Difference Learning

Показать описание

Here we describe Q-learning, which is one of the most popular methods in reinforcement learning. Q-learning is a type of temporal difference learning. We discuss other TD algorithms, such as SARSA, and connections to biological learning through dopamine. Q-learning is also one of the most common frameworks for deep reinforcement learning.

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

Q-Learning: Model Free Reinforcement Learning and Temporal Difference Learning

Q Learning Explained (tutorial)

Reinforcement Learning Series: Overview of Methods

Reinforcement Learning Tutorial: Q Learning, Model Free Learning and more! (With Python Code!)

Q Learning simply explained | SARSA and Q-Learning Explanation

Q-learning - Explained!

Q-Learning Explained - Reinforcement Learning Tutorial

OpenAI's Q*?: Reinforcement Learning, Model-Based vs. Model-Free Methods, and Q-Learning

Reinforcement Learning Basics

RL Course by David Silver - Lecture 5: Model Free Control

DeepRL1.6 Model based versus Model free Reinforcement Learning Source

Monte Carlo And Off-Policy Methods | Reinforcement Learning Part 3

MIT 6.S091: Introduction to Deep Reinforcement Learning (Deep RL)

AI Learns to Walk (deep reinforcement learning)

Q Learning | Building a Crawling Robot with Reinforcement Learning

Q Learning Intro/Table - Reinforcement Learning p.1

Maze Solver using Q Learning (Reinforcement Learning)

#1. Q Learning Algorithm Solved Example | Reinforcement Learning | Machine Learning by Mahesh Huddar

Reinforcement Learning Course - Full Machine Learning Tutorial

Overview of Deep Reinforcement Learning Methods

Model-Based and Model-Free Algorithms in Reinforcement Learning

Python + PyTorch + Pygame Reinforcement Learning – Train an AI to Play Snake

Q Learning In Reinforcement Learning | Q Learning Example | Machine Learning Tutorial | Simplilearn

Q Learning for Trading

Комментарии

0:35:35

0:35:35

0:09:27

0:09:27

0:21:37

0:21:37

0:33:10

0:33:10

0:09:46

0:09:46

0:11:54

0:11:54

0:06:48

0:06:48

0:08:44

0:08:44

0:02:28

0:02:28

1:36:31

1:36:31

0:10:39

0:10:39

0:27:06

0:27:06

1:07:30

1:07:30

0:08:40

0:08:40

1:05:19

1:05:19

0:24:04

0:24:04

0:04:34

0:04:34

0:11:11

0:11:11

3:55:27

3:55:27

0:24:50

0:24:50

0:01:02

0:01:02

1:38:34

1:38:34

0:24:55

0:24:55

0:10:50

0:10:50