filmov

tv

I Parsed 1 Billion Rows Of Text (It Sucked)

Показать описание

The 1 Billion Row Challenge (1BRC) was a wonderful idea from the Java community that spread way further than I would have expected. Obviously I have to talk about it!

SOURCES

S/O Ph4se0n3 for the awesome edit 🙏

SOURCES

S/O Ph4se0n3 for the awesome edit 🙏

I Parsed 1 Billion Rows Of Text (It Sucked)

How Fast can Python Parse 1 Billion Rows of Data?

Java, How Fast Can You Parse 1 Billion Rows of Weather Data? • Roy van Rijn • GOTO 2024

how fast can python parse 1 billion rows of data

How To Scare C++ Programmer

A Billion Rows per Second: Metaprogramming Python for Big Data

How Much Memory for 1,000,000 Threads in 7 Languages | Go, Rust, C#, Elixir, Java, Node, Python

Ville Tuulos - How to Build a SQL-based Data Warehouse for 100+ Billion Rows in Python

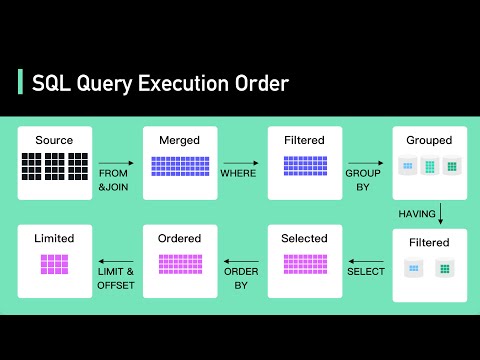

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

Industrial-scale Web Scraping with AI & Proxy Networks

Attention in transformers, step-by-step | DL6

Unlock petabyte-scale datasets in Azure with aggregations in Power BI | Azure Friday

Process HUGE Data Sets in Pandas

How to Create Database Indexes: Databases for Developers: Performance #4

Inserting 10 Million Records in SQL Server with C# and ADO.NET (Efficient way)

How to Make 2500 HTTP Requests in 2 Seconds with Async & Await

5 Secrets for making PostgreSQL run BLAZING FAST. How to improve database performance.

The Parquet Format and Performance Optimization Opportunities Boudewijn Braams (Databricks)

FAST - Billions of rows with Azure Data Explorer (ADX)

Watch Me Build A PDF Invoice Parsing Flow In Minutes

Python for Everybody - Full University Python Course

Solving distributed systems challenges in Rust

Ten SQL Tricks that You Didn’t Think Were Possible (Lukas Eder)

D. Richard Hipp - SQLite [The Databaseology Lectures - CMU Fall 2015]

Комментарии

0:39:23

0:39:23

0:16:31

0:16:31

0:42:16

0:42:16

0:11:31

0:11:31

0:01:16

0:01:16

0:29:06

0:29:06

0:26:21

0:26:21

0:43:37

0:43:37

0:05:57

0:05:57

0:06:17

0:06:17

0:26:10

0:26:10

0:14:48

0:14:48

0:10:04

0:10:04

0:12:47

0:12:47

0:06:45

0:06:45

0:04:27

0:04:27

0:08:12

0:08:12

0:40:46

0:40:46

0:10:32

0:10:32

0:35:43

0:35:43

13:40:10

13:40:10

3:15:52

3:15:52

0:45:10

0:45:10

1:07:08

1:07:08