filmov

tv

Process HUGE Data Sets in Pandas

Показать описание

Today we learn how to process huge data sets in Pandas, by using chunks.

◾◾◾◾◾◾◾◾◾◾◾◾◾◾◾◾◾

📚 Programming Books & Merch 📚

🌐 Social Media & Contact 🌐

◾◾◾◾◾◾◾◾◾◾◾◾◾◾◾◾◾

📚 Programming Books & Merch 📚

🌐 Social Media & Contact 🌐

Process HUGE Data Sets in Pandas

How to work with big data files (5gb+) in Python Pandas!

Python Pandas Tutorial 15. Handle Large Datasets In Pandas | Memory Optimization Tips For Pandas

This INCREDIBLE trick will speed up your data processes.

Pythonic code: Tip 4 Processing large data sets with yield and generators

How To Read And Process Huge Datasets in Seconds Using Vaex Library| Data Science| Machine Learning

Big Data In 5 Minutes | What Is Big Data?| Big Data Analytics | Big Data Tutorial | Simplilearn

Processing large data files with Python multithreading

ANGULAR 14 tutorials by Mr. Ramesh Sir

How REST APIs support upload of huge data and long running processes | Asynchronous REST API

Element 84 Uses AWS to Process Large Datasets at Scale

Excel Tutorial - Using VLOOKUP with large tables

SE4AI: Managing and Processing Large Datasets

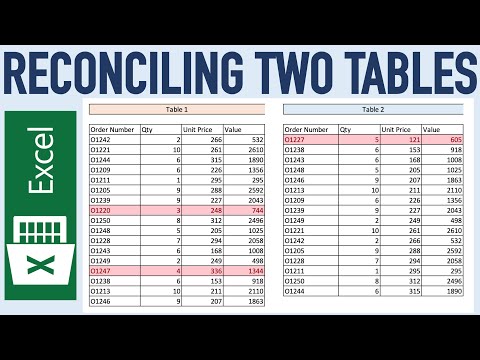

Excel Tutorial to Quickly Reconcile two sets of Data

How to Rapidly Process & Query Large Data Sets

How to Export Large Data Within Power BI | Data Exceeds the Limit Solution | Large Data Export

Try limiting rows when creating reporting for big data in Power BI

Removing Outliers From a Dataset

Excel: Quickly Select a Large Amount Amount of Data/Cells

How to handle more than million rows in Excel - Interview Question 02

Data Cleaning Tutorial | Cleaning Data With Python and Pandas

Excel AI - data analysis made easy

10 Million Rows of Data Loaded into Excel ( **see updated version of this - link in description**)

EXCEL TRICK - Select large data quickly in columns & rows WITHOUT click & drag or unwanted c...

Комментарии

0:10:04

0:10:04

0:11:20

0:11:20

0:05:43

0:05:43

0:12:54

0:12:54

0:08:19

0:08:19

0:19:31

0:19:31

0:05:12

0:05:12

0:00:11

0:00:11

1:15:22

1:15:22

0:09:20

0:09:20

0:01:20

0:01:20

0:03:23

0:03:23

1:21:27

1:21:27

0:04:37

0:04:37

0:20:25

0:20:25

0:03:34

0:03:34

0:05:01

0:05:01

0:04:33

0:04:33

0:02:20

0:02:20

0:10:20

0:10:20

0:15:38

0:15:38

0:08:13

0:08:13

0:03:08

0:03:08

0:03:26

0:03:26